I've gotten various writeups on this particular topic forwarded to me by several Embedded Vision Alliance contacts in the last several weeks, beginning with my boss and most recently including TI's Brian Carlson, so I'm apparently supposed to write about it 😉 And after doing the research, I've decided that New York University's Interactive Telecommunications Program (NYU ITP) has got to be about the coolest college curriculum in the Universe…this isn't the first time that I've written about something that's come out of it.

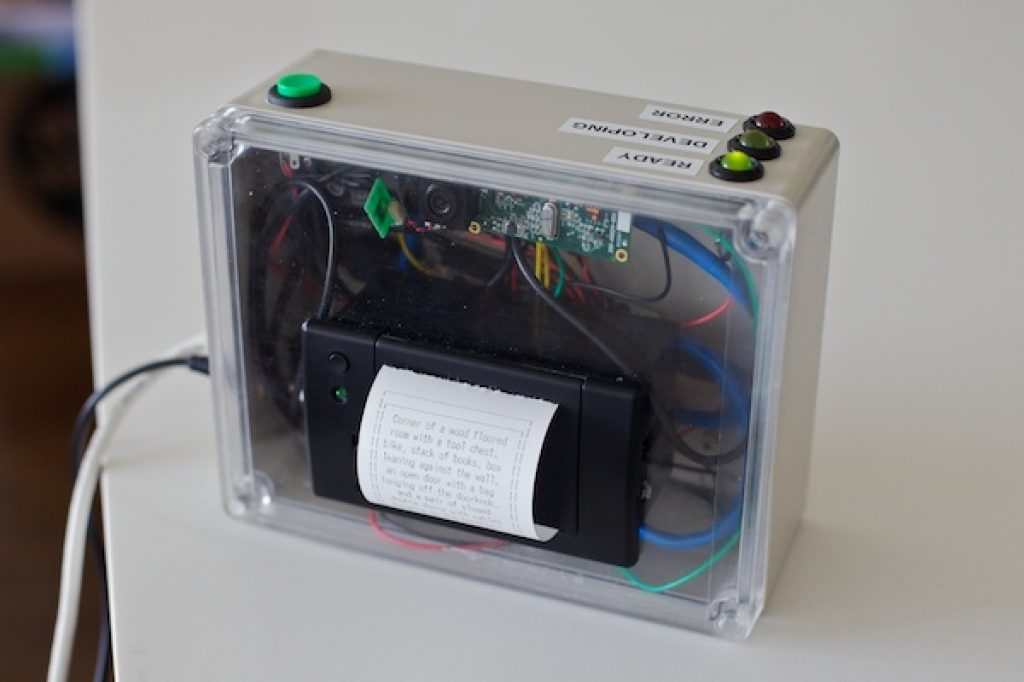

The above image shows the Descriptive Camera, built on an OMAP-based BeagleBone board foundation (thereby explaining Texas Instruments' interest) and developed by Matt Richardson for the Spring 2012 Computational Cameras class at NYU ITP. Here's the overview description:

The Descriptive Camera works a lot like a regular camera—point it at subject and press the shutter button to capture the scene. However, instead of producing an image, this prototype outputs a text description of the scene. Modern digital cameras capture gobs of parsable metadata about photos such as the camera's settings, the location of the photo, the date, and time, but they don't output any information about the content of the photo. The Descriptive Camera only outputs the metadata about the content.

As we amass an incredible amount of photos, it becomes increasingly difficult to manage our collections. Imagine if descriptive metadata about each photo could be appended to the image on the fly—information about who is in each photo, what they're doing, and their environment could become incredibly useful in being able to search, filter, and cross-reference our photo collections.

At this point, you might be thinking that Richardson somehow accomplished the objective that DARPA's Jim Donlon spoke about two months ago at the Embedded Vision Alliance Summit. Well…not quite. To wit:

The technology at the core of the Descriptive Camera is Amazon's Mechanical Turk API. It allows a developer to submit Human Intelligence Tasks (HITs) for workers on the internet to complete. The developer sets the guidelines for each task and designs the interface for the worker to submit their results. The developer also sets the price they're willing to pay for the successful completion of each task. An approval and reputation system ensures that workers are incented to deliver acceptable results. For faster and cheaper results, the camera can also be put into "accomplice mode," where it will send an instant message to any other person. That IM will contain a link to the picture and a form where they can input the description of the image…

… After the shutter button is pressed, the photo is sent to Mechanical Turk for processing and the camera waits for the results. A yellow LED indicates that the results are still "developing" in a nod to film-based photo technology. With a HIT price of $1.25, results are returned typically within 6 minutes and sometimes as fast as 3 minutes. The thermal printer outputs the resulting text in the style of a polaroid print.

So not exactly computer vision (or, for that matter, embedded vision). But still, pretty cool, and a precursor of the human-free embedded vision version to follow. For more, check out the following video:

along with the following coverage: