This article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA.

The Virtual Reality (VR) industry is in the midst of a new hardware cycle – higher resolution headsets and better optics being the key focus points for the device manufacturers. Similarly on the software front, there has been a wave of content-rich applications and an emphasis on flawless VR experiences for the end user.

With the introduction of the revolutionary Turing architecture, mainstream GPUs can now deliver the performance required for VR experiences at native resolutions.

There is an opportunity to tap the unused GPU cycles and harness that power to deliver better image quality. Image quality and minimal latency are still the key factors driving the immersive experience for VR users.

Variable Rate Supersampling (VRSS) expands on Turing’s Variable Rate Shading (VRS) feature to deliver image quality improvements by performing selective supersampling. This can also be selectively engaged only if idle GPU cycles are available. VRSS is completely handled from within the NVIDIA display driver without application developer integration.

Background

Supersampling

MSAA (multi-sample antialiasing) is an antialiasing technique used to mitigate aliasing over the edges of geometries. This is facilitated by the usage of specialized multi-sampled buffers (render target). The available configurations for the buffers are 2x, 4x, 8x (nX – where ‘n’ denotes the no. of samples allocated per-pixel). MSAA takes place in the rasterization stage of the pipeline – the triangle is tested for coverage at each of the ‘n’ sample points, building a 16-bit coverage mask identifying the portion of the pixel covered by the triangle. The pixel shader is then executed once and the values are shared across the samples identified by the coverage mask. This multi-sampled buffer is then resolved into a final frame buffer addressing edge aliasing.

SSAA (Supersampling antialiasing) operates on the above principle as well, however the difference being it executes the pixel shader for all the covered samples. This results in each sample location having its own unique color value accurately computed. On resolving, this results in higher visual quality & higher performance cost compared to MSAA. MSAA operates along the geometric edges, whereas SSAA operates even inside the geometry.

Though SSAA seems to be advantageous for visual quality, it has its own limitations:

- SSAA is performance intensive – The pixel load scales linearly with the number of samples used

- No finer control on shading rate – There is no way to perform 2x supersampling on a 4x MSAA buffer nor can we selectively shade objects based on the rendering region or any other criterion

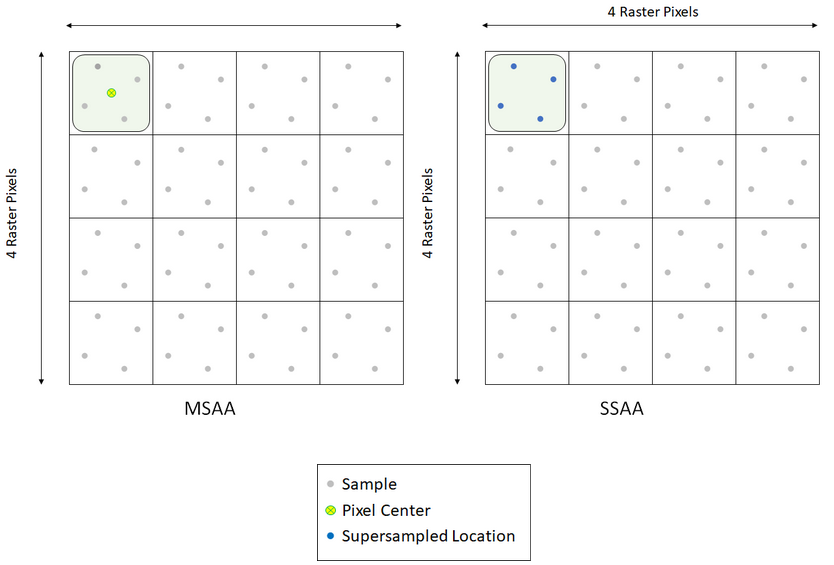

Figure 1: Image representation of pixel shader execution for MSAA and SSAA. MSAA performs a single pixel shader execution and shares the value among the samples covered, whereas SSAA performs a pixel shader execution for all samples

Example of MSAA and SSAA

Figure 2 below shows each pixel as a square and dots indicate sample locations within a pixel.

Figure 2: MSAA and SSAA

Before running Pixel Shaders, hardware generates a “coverage mask”. This stores which samples are covered by the current triangle and which are not.

In Pixel1, all 4 samples are covered.

In Pixel2, only 3 samples are covered.

| MSAA | Supersampling |

| Pixel shader executes once per pixel at the center, generates only one shade and replicates this to the “covered” samples | Pixel shader executes once per sample at sample locations, generates unique shades for each covered sample. |

| Pixel1: All 4 samples contain the exact same shade, so averaging them yields same shade:

No Quality Improvement |

Pixel1: All 4 samples contain unique shades, so averaging them yields more correct shade:

Gives Quality Improvement + internal anti-aliasing |

| Pixel2: 3 samples contain the exact same shade, 4th sample contains background color, so averaging them yields anti-aliased output:

No Quality Improvement, but edge anti-aliased |

Pixel2: 3 samples contain unique shades, 4th sample contains background color, so averaging them yields more correct and anti-aliased output:

Gives Quality Improvement + edge anti-aliasing |

Super Resolution

Applications can have an in-game dynamic scaling feature for scaling the rendering resolution. This approach involves scaling the render target by a factor. However such scaling is carried out across the entire frame. Even the unwanted periphery gets scaled & rendered at a higher resolution, which is not really required for VR. A better, but more complex solution would be to scale the central region alone for rendering and downsize it for better quality.

Region of Interest

In VR the rendering regions could be categorized as foveated central region or the periphery region. Viewers tend to focus their attention mostly at the central region of the screen for VR. Lens distortion significantly squashes the periphery of the image and isn’t perceived by the user. Any enhancement carried out just on the central region would improve the overall experience for the end users.

Turing Variable Rate Shading (VRS)

Turing’s VRS has the ability to selectively control the shading rates across the rendering surface. Instead of executing the pixel shader once per pixel, VRS can dynamically change the shading rate during actual pixel shading in one of two ways:

- Coarse Shading: Execution of the pixel shader once per multiple raster pixels and copying the same shade to those pixels

- Supersampling: Execution of the pixel shader more than once per single raster pixel

Such VRS capabilities allows us to selectively improve visual quality with supersampling applied just to the foveated central region.

Variable Rate Supersampling (VRSS)

Introduction

VRSS operates on the principle of selectively supersampling the central region of a frame – fixed foveated supersampling. This is possible using Turing’s VRS capability to apply different shading rates across a render target. While the central region is supersampled, the peripheral region is untouched. VRSS can also be selectively engaged only if idle GPU cycles are available.

Figure 3: Conceptual representation of VRSS. [Image of Boneworks [courtesy of Stress Level Zero]

The central region can be supersampled up to 8x – this is based on the MSAA level selected in the application. All this is completely handled from within the NVIDIA display driver without the need of any application integration.

VRSS

Users can enable VRSS from the NVIDIA Control panel -> ‘Manage 3D Settings’ page -> ‘Program Settings’ tab. There are a couple of options to choose from while turning ‘On’ VRSS.

- Adaptive – Applies supersampling to the central region of a frame. Size of the central region varies based on the GPU headroom available

- Always On – Applies supersampling to the fixed size central region of a frame. This mode does not consider the GPU headroom availability and might result in frame drops

This feature is available only for applications which meet below criterion and are profiled by NVIDIA:

- DirectX 11 VR applications

- Forward Rendered with MSAA

Under the hood

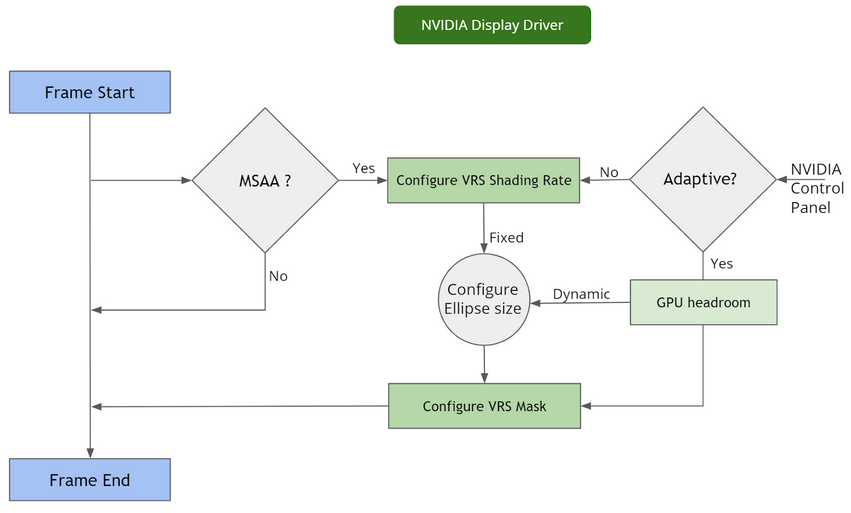

VRSS does not require any developer integration, and the entire feature is implemented and handled inside the NVIDIA display driver, provided the application is compatible and profiled by NVIDIA.

The NVIDIA display driver handles several pieces of functionality internally, including:

- Frame Resources tracking – The driver keeps track of the resources encountered per frame and builds a heuristical model to flag a scenario where VRS could be invoked. Notes the MSAA level to configure the VRS shading rate – the supersampling factor to be used at the center of the image. Provides render target parameters for configuring the VRS Mask.

- Frame Render monitoring – This involves measuring the rendering load across frames, the current frame rate of the application, target frame rates based on the HMD refresh rate, etc. This in turn would be computing the rendering stats required for Adaptive mode of VRSS.

- Variable Rate Supersampling enablement – As mentioned previously, VRS gives us the ability to configure shading rate across the render surface. This is done via a shading rate mask and shading rate lookup table. The technical details for these are available here Turing Variable Rate Shading in VRWorks. The VRS infrastructure setup – handles configuration of the VRS mask and the VRS shading rate table lookup. The VRS framework is configured based on the performance stats as followed:

- VRS Mask – Size of the central region mask is configured proportionally to the headroom availability

- VRS Shading rates are configured based on the MSAA buffer’s sample count detected

Figure 4 : A flow chart depicting the per-frame cycle for VRS

VRSS modes

Adaptive

The Adaptive mode takes performance limits into considerations – it tries to maximize the supersampling region and at the same time not hinder the VR experience. Here, the size of the central region grows/shrinks in proportion to the GPU headroom available.

Figure 5: Depicting the dynamic sizing of the central region for Adaptive mode. Supersampled region is depicted with a green mask. Supersampled region shrinks and grows based on the GPU load (scene complexity).

Always On

In this mode, a fixed foveated central region is always supersampled – providing maximum image quality improvements. The size of the central region is adequate to cover the user’s field of view at the center.

This mode will help users to perceive the maximum IQ gains possible for a given title using VRSS. This however might result in frame drops for applications that are performance intensive.

Developer Guidance

With VRSS, we have provided a simple demonstration of the underlying VRS tech. This feature is available for applications profiled by NVIDIA. Developers could submit their games and applications to NVIDIA for consideration.

The advantage of enlisting an application for VRSS would be as follows:

- No integration: No explicit VRS API integration is required. Completely encapsulated in NVIDIA Display driver

- Ease of use: Simple switch (using NVIDIA control panel) to turn on/off supersampling – enhanced image quality for the end user

- Quality improvement: Supersampling provides the highest possible quality of rendering – also mitigates aliasing wherever possible

- Performance mode: Adaptive mode tries to provide image quality improvement without suffering performance losses

- Maintenance: Since the entire tech is at the driver level, no maintenance is required from the developer side

Game and Application Compatibility

The criteria for profiling an application for VRSS is as follows:

- DirectX 11 VR applications

- Forward Rendered with MSAA – Supersampling needs MSAA buffer to be used hence applications using MSAA are compatible. The level of supersampling factor applied is based on the underlying no.of samples used in the MSAA buffer. The central region is shaded 2x for MSAA-2x, 4x supersampled for MSAA-4x & thereon. The maximum shading rate applied for supersampling is 8x. Higher the MSAA level, greater would be the supersampling effect.

Enabling MSAA in Unreal and Unity

MSAA is a popular anti-]aliasing technique associated with forward rendering, and it is well-suited to VR. Major game engines like Unreal and Unity have support for forward rendering in VR. If the game is already forward rendered, adding MSAA support is relatively easy.

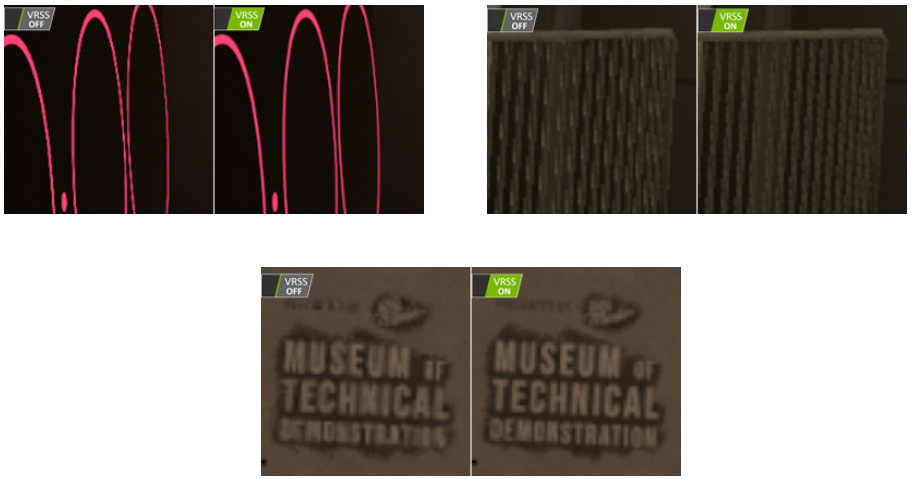

Content Suitability

Content that benefits from supersampling will benefit from VRSS as well. Supersampling not only mitigates aliasing but also brings out details in an image. The degree of quality improvement will vary across content though.

Figure 6: Examples of VRSS improving the content. [Image of Boneworks courtesy of Stress Level Zero]

Supersampling shines when it encounters the following types of content:

- High resolution textures

- High frequency content

- Textures with alpha channels – fence, foliage, menu icons, text, etc.

However it does not exhibit much IQ improvement for:

- Flat shaded geometry

- Texture with low level of detail

Come Onboard!

With VRSS, we have provided a simple demonstration of the underlying VRS technology for selective supersampling, and which does not need any application integration.

VRS technology is already available as explicit programming APIs, with fine grain control for application integration – VRWorks – Variable Rate Shading (VRS). Developers can also leverage VRS on their own for lens matched shading, content adaptive shading, gaze tracked shading, etc.

VRSS (Variable Rate Supersampling) is directly available out of the box without requiring any application integration for DirectX 11 MSAA based applications profiled by NVIDIA. Come onboard and submit your games and applications to NVIDIA for VRSS consideration.

The following 24 titles already support VRSS at launch with NVIDIA driver 441.87

| Battlewake

Boneworks Eternity WarriorsTM VR Hot Dogs, Horseshoes and Hand Grenades In Death Job Simulator Killing Floor: Incursion L.A. Noire: The VR Case Files Lone Echo |

Mercenary 2: Silicon Rising

Pavlov VR Raw Data Rec Room Rick and Morty: Virtual Rick-ality Robo Recall SairentoVR Serious Sam VR: The Last Hope Skeet: VR Target Shooting |

Space Pirate Trainer

Special Force VR: Infinity War Spiderman: Far from Home Spiderman: Homecoming – Virtual Reality Experience Talos Principle VR The Soulkeeper VR |

DeepChand Palswamy

Senior Software Engineer, VRWorks Graphics Team, NVIDIA

Swaroop Bhonde

Senior System Software Engineer, Pune Design Center (India), NVIDIA