This blog post was originally published at Synaptics’ website. It is reprinted here with the permission of Synaptics.

The momentum behind ultra-high definition (UHD) or 4K displays is one of the more interesting growth areas in all of electronics. Market forecasters estimate it to be worth about $48 billion in 2019 and growing at a CAGR of 23% from 2020-2025. The potential for significantly enhanced viewing and gaming experiences has consumers excited about video quality that is four times as good as previous generation displays (UHD has roughly four times as many pixels as a Full HD display does, thus the name).

The problem is content providers have been slow to make true 4K content available. Very little 4K content is being broadcast over the air today. Some broadcasters are offering 4K channels but programming is limited, typically to special events. The majority of Linear TV content shows still come through at a 720p or 1080i resolution. Most true 4K content comes from streaming providers like Netflix, Disney+ and Amazon Video, and even that is significantly less in quantity than their non-4K libraries offer.

One of the primary issues is bandwidth. Television broadcasters and IPTV service providers simply don’t have the bandwidth to provide endless high-quality 4K streams at once. So, they have been encoding Full HD content, rather than Ultra HD. The Full HD compressed (with HEVC, H264, AV1, VPx) stream meets their goal for a reasonable bitrate and it can produce a reasonable viewing experience by using traditional video up-scalers on a UHD TV in a consumer’s home. But even the best decoder and best up-scaler cannot recover the finer details and textures for the UHD display that was in the original source as it was lost during downscaling to FHD.

AI offers a solution

A machine-learning model can classify portions of the image, infer the original appearance, and generate appropriate additional pixels that have the desired image enhancement effect.

But the most important near-term impact of AI for providers of video content may lie in another direction entirely. AI-based Super Resolution — an emerging technique for using deep-learning inference to enhance the perceived resolution of an image beyond the resolution of the input data — can give viewers a compelling 4K Ultra High Definition (UHD) experience on their 4K TV’s from a FHD-resolution source.

This rather non-intuitive result translates into users delight by the range of 4K content suddenly available to them and operators delighted by significantly reduced storage, remote caching, and bandwidth needs — and consequent energy savings across their systems — compared to what they would have observed with streaming or broadcasting native 4K content.

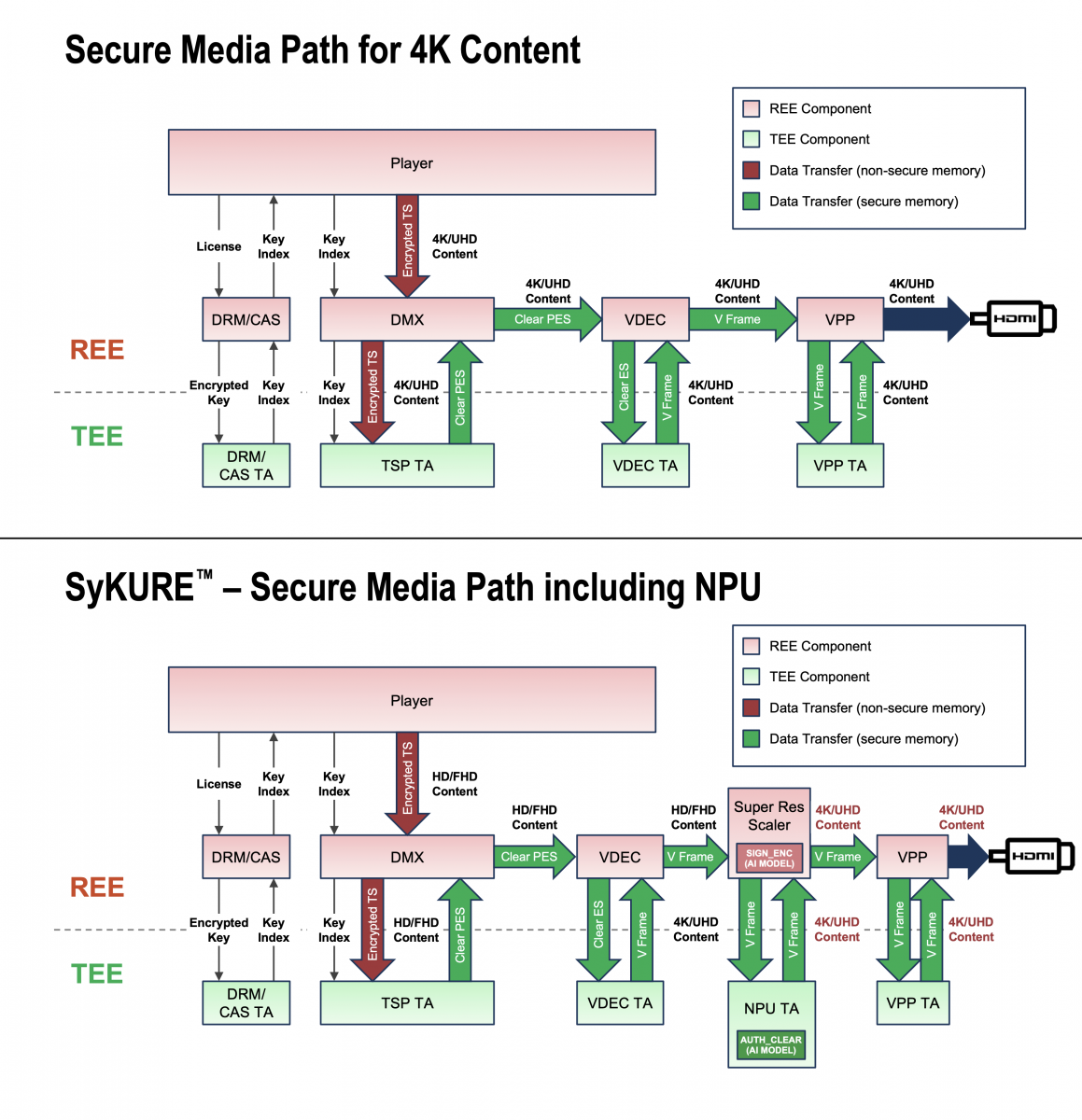

This might seem a rather academic point, as to be most effective, the receiving side of Super Resolution must be executed at the extreme network edge: the user premises and device. But the receiver’s deep-learning inference task can be highly compute-intensive, especially with the real-time constraints of streaming video. At Synaptics, we have been able to demonstrate that the Neural Processing Unit (NPU), a compact deep-learning inference accelerator integrated into our recent set-top SoC, can in fact perform Super Resolution image expansion in real-time, to the satisfaction of critical viewers. On top of it, Synaptics technology ensures the premium video content stays out of the hands of video pirates even when it is processed by a ML model running on the NPU.

Synaptics offers such a solution to deploy AI-based Super Resolution with our VS600 series Multimedia SoC platforms. With it, operators can limit the use of 4K content files, allowing them to offer a far broader range of 4K-quality content while saving storage, cache, and bandwidth. But to do so, they must specify receiving-end devices with neural-network inference accelerator hardware: fast enough for guaranteed real-time upscaling of each frame, and with hardware security that will withstand the intense scrutiny of copyright owners.

Read more about the detail of such a solution in this article in Streaming Media magazine.

Gaurav Arora

VP, Systems Design/Architecture, Synaptics