Zuoguan Wang, Senior Algorithm Manager at Black Sesame Technologies, presents the “Joint Regularization of Activations and Weights for Efficient Neural Network Pruning” tutorial at the September 2020 Embedded Vision Summit.

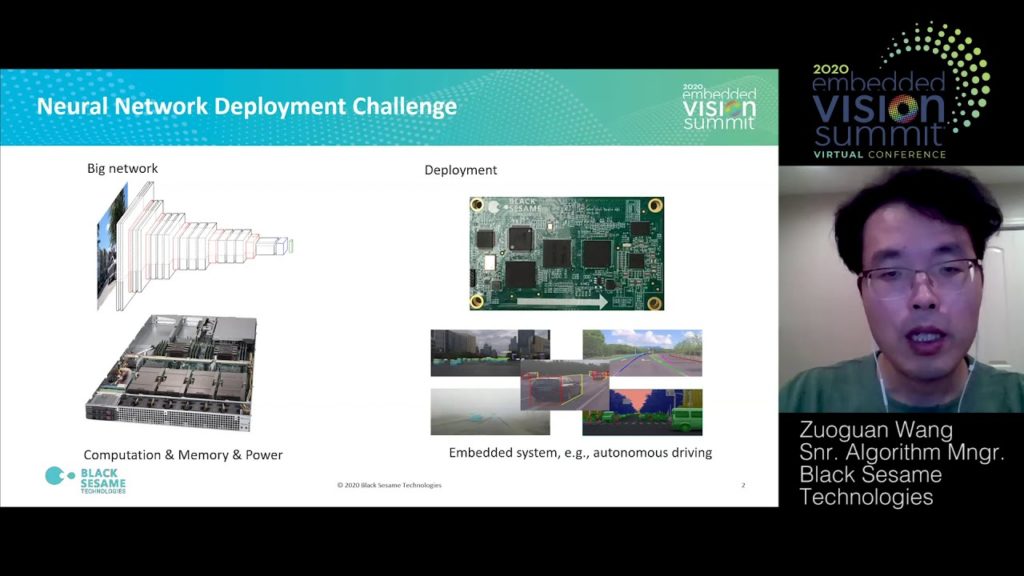

With the rapid increase in the sizes of deep neural networks (DNNs), there has been extensive research on network model compression to improve deployment efficiency. In this presentation, Wang presents his company’s work to advance compression beyond the weights to neuron activations. He proposes a joint regularization technique that simultaneously regulates the distribution of weights and activations. By distinguishing and leveraging the significant difference among neuron responses and connections during learning, the jointly pruned networks (JPnet) optimize the sparsity of activations and weights.

The derived deep sparsification reveals more optimization space for existing DNN accelerators utilizing sparse matrix operations. Wang evaluates the effectiveness of joint regularization through various network models with different activation functions and on different datasets. With a 0.4% degradation constraint on inference accuracy, a JPnet can save 72% to 99% of computation cost, with up to 5.2x and 12.3x reductions in activation and weight numbers, respectively.

See here for a PDF of the slides.