This is a reprint of an Altera white paper also found here (PDF).

Cameras and other equipment used in Surveillance and Machine Vision perform a variety of different tasks, ranging from Image Signal Processing (ISP), Video Transport and Video Format Conversion to Video Compression and Analytics.

Video Processing – Today versus Tomorrow

The video processing chain, starting from a CMOS or CCD image sensor and ending at a display, storage or video transport medium consists of several stages such as sensor interfacing, image signal processing, image compression, video format conversion (transcoding), transport and storage. Traditional video processing still largely consists of discrete devices such as Digital Signal Processors (DSP) for each of these functions. There are Application Specific Standard Products (ASSPs) available that combine two or more of the above functions; however once an ASSP has been fabricated it cannot be altered, creating a challenge for video system designers. This challenge is due to several reasons: rapid changes in sensor technologies that require new ways of interfacing with sensors, the introduction of High Dynamic Range (HDR) requirement into the image signal processing pipelines to enable cameras to see equally well in mixed light and dark areas of a scene, improvement in the efficiency of compression algorithms and the emerging need to support Video Content Analytics (VCA). Programmable devices like the FPGA offer system designers the flexibility to respond to these challenges by empowering them with the ability to change the behavior of the hardware used to implement these functions. Since FPGAs are reprogrammable, they can be used to implement multiple behaviors within the same device. Entire cameras, surveillance systems and machine vision systems can be implemented in a single device, thus reducing cost, power consumption, and board space, while improving the reliability of the system due to fewer components. Also, due to their parallel computing nature, FPGAs tend to provide accelerated performance over DSP for many of the functionalities required in Video Surveillance and Machine Vision.

FPGAs Make it Easy to Support New Image Sensor Interfaces

CMOS and CCD camera image sensors convert light (visible, infrared, etc.) into electrical signals. It is common for sensor manufacturers to use different electrical interfaces to output pixel information from the sensors. These interfaces can vary greatly between devices even from the same sensor manufacturer. This often poses a problem for camera manufacturers implementing ISP pipelines in ASSPs which are usually constrained in their ability to interface with one or two sensor interface schemes. FPGAs, on the other hand, being fully programmable, do not have such constraints, and can easily be reprogrammed to accommodate different – even totally new – camera sensor interfaces. For example, of late, a large number of sensor manufacturers have made a transition from parallel to serial image sensor interfaces to accommodate higher data output rate from high megapixel sensors. Sensor interfaces implemented in FPGA enable camera manufacturers to upgrade to newer, improved sensors as and when they become available without having to redesign their camera board with a different ASSP.

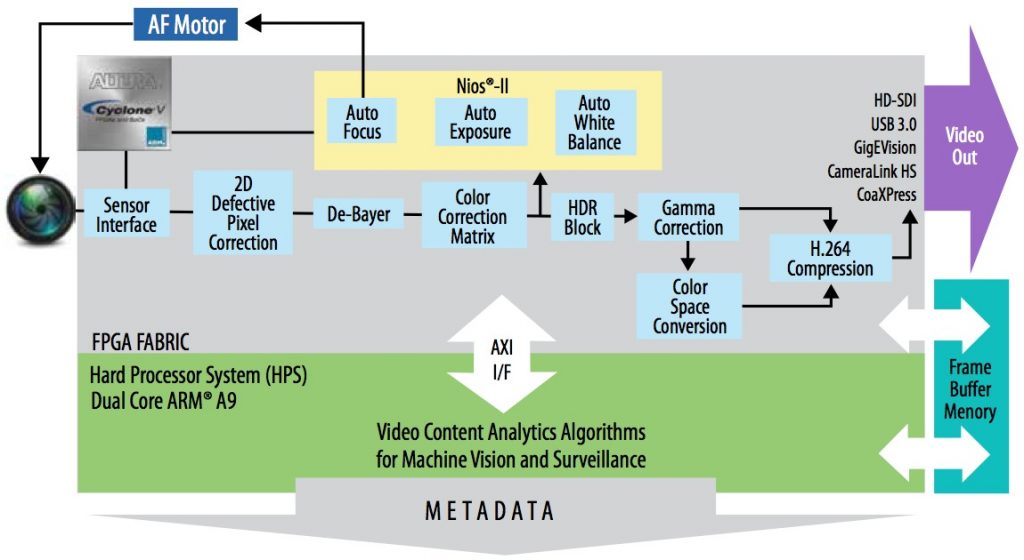

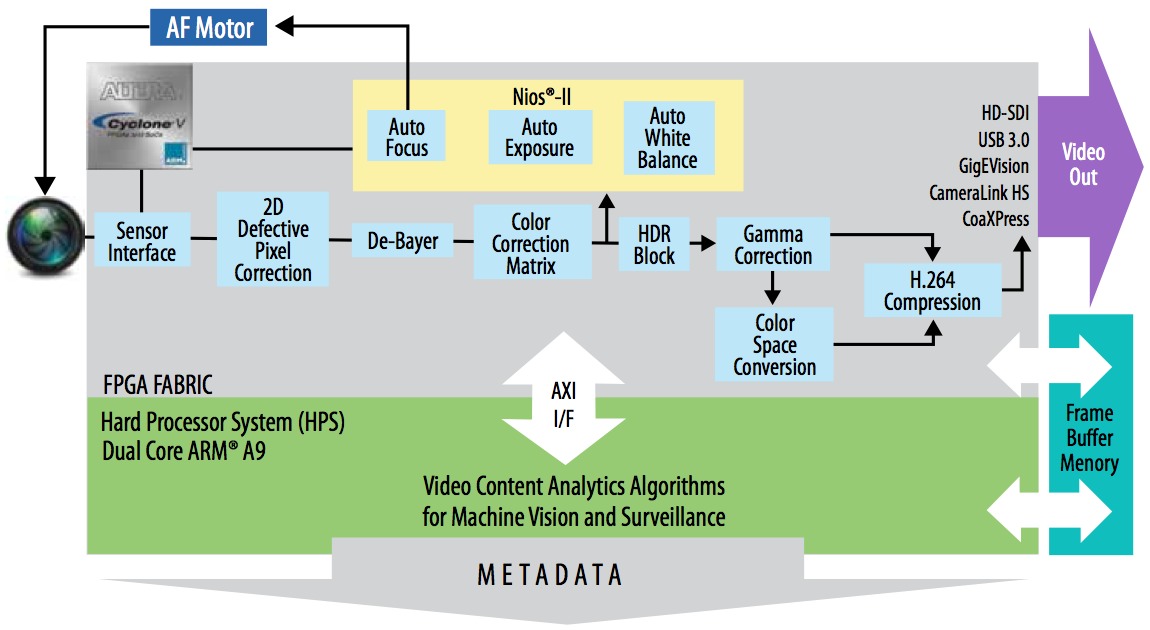

Altera Cyclone® V SoC showing Implementation of ISP Pipeline and Video Analytics

FPGAs Deliver High-performance Image Signal Processing at Significantly Reduced Cost

Image sensor resolutions have been steadily increasing and are trending from 1 Megapixel to 5 Megapixels and beyond. Data out of the sensors may have resolution of 20 bits per pixel or more. Full HD (1920x 1080) display resolution, refreshed at 60 frames per second is becoming standard. High (or Wide) Dynamic Range (HDR/WDR) is required for many surveillance applications, especially ones that will interface to video analytics. HDR/WDR is often implemented by sensor manufacturers with schemes that require multiple exposures in the sensor to create one output frame (e.g. a particular Aptina HDR sensor internally uses 3 exposures per frame). In such a case, in terms of frames that must be processed to output a display frame rate of 60 fps, the image signal processing pipeline actually needs to process 60 x 3 = 180 frames per second. This combination leads to a very large quantity of data that must be processed in near-real-time so as to avoid latency (delay) between the physical scene and the processed image. For example, at 20 bits per pixel we have:

(1920 x 1080) pixels x 20 bits/pixel x 180 frames/sec = > 7 Gbits/sec of data

Traditional Digital Signal Processing requires a very high performance DSP to process 1080p60 HDR, with high price points. The same image signal processing performance can be achieved in an FPGA, such as Altera MAX® 10 or Cyclone® IV, at a fraction of the cost. Depending on the implementation, a 1080p60 HDR pipeline implemented in an FPGA can be less than two-thirds to less than half the cost of equivalent implementation in DSP, for the same image quality.

Programmability of the FPGA fabric offers further flexibility by enabling camera manufacturers to make changes to the ISP blocks to add or subtract functionality and tweak quality and performance, giving them the ability to implement a wide range of camera product line on a relatively small set of boards.

FPGAs Enable Flexible High Efficiency Video Compression

With the trend towards IP (Internet Protocol) cameras, Ethernet is being adopted as the interface of choice in video transport, creating substantial savings over coaxial cable costs. Video over Ethernet does require H.264/H.265 type of compression, which in the past required significantly large FPGAs – of the order of 70KLE (Logic Elements). However, newer high-efficiency compression algorithms are able to implement video compression in half that LE count. This, combined with a lower total integrated cost compared to DSPs, makes FPGAs a solid choice for video compression. Furthermore, it is a simple matter to program one or more Ethernet MACs (Media Access Controller) within the FPGA, to drive video over Ethernet straight out of the FPGA with the addition of only an external PHY.

Programmability of the FPGA offers additional flexibility by enabling camera manufacturers to customize the compression block to implement only the compression profile required for the particular application, or even to reprogram the FPGA with a different compression profile when needed. Unlike ASSPs that may offer a choice of a few compression profiles, there is no real limit other than the size of the chosen FPGA for various different compression profiles that may be programmed.

FPGAs Provide the Performance and Flexibility for Real-Time Transcoding

Video transport protocol translation is often required between the source and destination equipment. A very significant amount of video data needs to be reformatted while converting (transcoding) from one transport protocol to another in near-real-time, such as between CameraLink, GigEVision, DVI, SDI, HD-SDI, 3G-SDI, USB3.0 and CoaXPress. This is more cost effective to accomplish in a parallel processing device like an FPGA, and can often be integrated within the camera on the same FPGA being used for ISP. Programmability of the FPGA also offers camera manufacturers the ability to reprogram different transport interfaces onto the same board as needed, optimizing the total number of boards to support a broad product line.

High speed serial video transport protocols like HD-SDI (1.485Gb/s) are evolving to 3G-SDI (2.97Gb/s) and CoaXPress (6.25 Gbps) and are able to leverage the already built in transceivers in certain classes of FPGAs for integration of image signal processing, HDR and high speed video transport on a single chip.

SoC FPGAs Provide the Processing Power to Implement Video Content Analysis

Video Content Analytics (VCA) is a compute-intensive application that involves analyzing real-time changes in macro image blocks like people, objects, license plates, virtual trip wires, etc. in a video stream. It requires the blocks to be identified, their behavior tracked and decisions to be made with regard to either their content (e.g. reading a number plate) or intent as threatening or non-threatening (e.g. object left behind- in a crowded place which may contain explosives). They are also used for applications like people counting that may be used for statistical purposes such as recording trade show attendance or crowd control. In order for VCA to be effective in its ultimate goals of preserving safety and security, analysis must happen in real-time. This requires both the deployment of high computing power as well as low latency between the occurrence of events and their analyses.

VCA is an area where the combined power of FPGA fabric and Hard Processor System (HPS) such as the dual core ARM Cortex A9 in Altera Cyclone V SoC FPGAs come together to offer unprecedented performance and integration benefits otherwise not realizable in a single chip. VCA is a relatively new field in Machine Vision; up to now it has mostly been implemented as a two-chip solution: the ISP portion in a DSP or FPGA and the VCA algorithms running on a processor. System-on-Chip (SoC) FPGAs that incorporate one or more hard processor systems along with FPGA fabric on a single chip now make it possible for a single device to run both ISP and VCA applications at the same time. In the Altera Cyclone V SoC FPGAs, ISP data from the FPGA fabric is available to the HPS through very low latency on chip interconnect for processor intensive video analytics computations, obviating the need for separate ISP and VCA processing chips. The dual ARM Cortex A9 processors not only provide enough computing power, but also enable a programmer and OS-friendly environment for rapid development and deployment of VCA applications.