Sreepada Hegade, Senior Manager for ML Software and Solutions at Lattice Semiconductor, presents the “Flexible Machine Learning Solutions with Lattice FPGAs” tutorial at the May 2021 Embedded Vision Summit.

The ability to perform neural network inference in resource-constrained devices is fueling the growth of machine learning at the edge. But application solutions require more than just inference—they also incorporate aggregation and pre-processing of input data, and post-processing of inference results. In addition, new neural network topologies are emerging rapidly. This diversity of functionality and quick evolution of topologies means that processing engines must have the flexibility to execute different types of workloads. I/O flexibility is also key, to enable system developers to choose the best sensor and connectivity options for their applications.

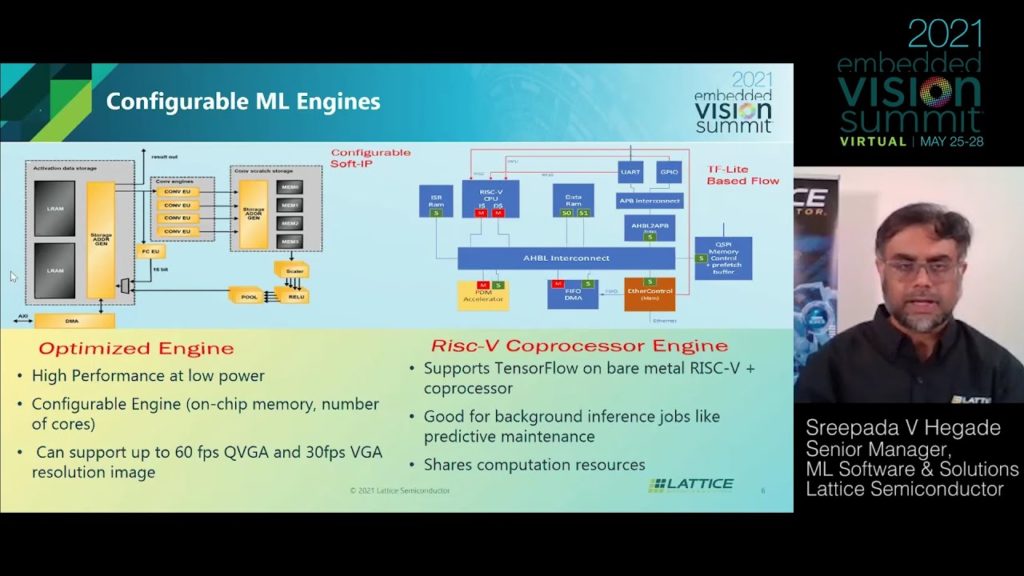

In this talk, Hegade explores how the configurable nature of Lattice FPGAs and the soft cores implemented on them allow for quick adoption of emerging neural network topologies, efficient execution of pre- and post-processing functions, and flexible I/O interfacing. He also shows how his company optimizes network topologies and its compiler to get the best out of FPGAs.

See here for a PDF of the slides.