Sakyasingha Dasgupta, the founder and CEO of EdgeCortix, presents the “Dynamic Neural Accelerator and MERA Compiler for Low-latency and Energy-efficient Inference at the Edge” tutorial at the May 2021 Embedded Vision Summit.

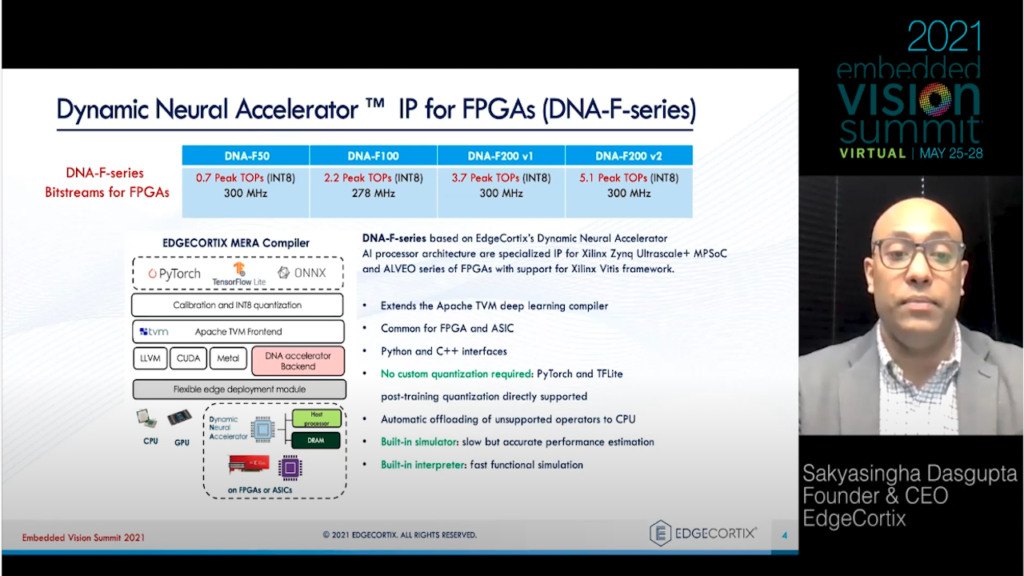

Achieving high performance and power efficiency for machine learning inference at the edge requires maintaining high chip utilization, even with a batch size of one, while processing high-resolution image data. In this tutorial session, Dasgupta shows how Edgecortix’s reconfigurable Dynamic Neural Accelerator (DNA) AI processor architecture, coupled with the company’s MERA compiler and software stack, enables developers to seamlessly execute deep neural networks written in Pytorch and TensorFlow Lite while maintaining high chip utilization, power efficiency and low latency regardless of the type of convolution neural network.

Dasgupta walks through examples of implementing deep neural networks for vision applications on DNA, starting from standard machine learning frameworks and then benchmarking performance using the built-in simulator as well as FPGA hardware.