The Internet of Things (IoT), the interconnection of uniquely identifiable embedded computing devices, will increasingly be a key vision processing opportunity.

By Brian Dipert

Editor-in-Chief, Embedded Vision Alliance

The "Internet of Things" (abbreviated IoT), one of the hottest topics in technology today, is widely anticipated to be a notable driver of both semiconductor and software demand in coming years. As Wikipedia notes:

The Internet of Things (IoT) is the interconnection of uniquely identifiable embedded computing devices within the existing Internet infrastructure. Typically, IoT is expected to offer advanced connectivity of devices, systems, and services that goes beyond machine-to-machine communications (M2M) and covers a variety of protocols, domains, and applications. The interconnection of these embedded devices (including smart objects) is expected to usher in automation in nearly all fields, while also enabling advanced applications like a Smart Grid.

Things, in the IoT, can refer to a wide variety of devices such as heart monitoring implants, biochip transponders on farm animals, automobiles with built-in sensors, or field operation devices that assist fire-fighters in search and rescue. Current market examples include smart thermostat systems and washer/dryers that utilize wifi for remote monitoring.

According to Gartner, there will be nearly 26 billion devices on the Internet of Things by 2020. ABI Research estimates that more than 30 billion devices will be wirelessly connected to the Internet of Things (Internet of Everything) by 2020. As per a recent survey and study done by Pew Research Internet Project, a large majority of the technology experts and engaged Internet users who responded—83 percent—agreed with the notion that the Internet/Cloud of Things, embedded and wearable computing (and the corresponding dynamic systems) will have widespread and beneficial effects by 2025. It is, as such, clear that the IoT will consist of a very large number of devices being connected to the Internet.

Key to an understanding of the IoT opportunity is its machine-to-machine aspect. Content generated at the source end of the IoT communication link is created by a computer or other machine in conjunction with various sensor technologies, not by a human. Similarly, while people may be on the IoT data distribution list, the information is primarily received, interpreted and acted on by other machines. One noteworthy example of a sensor technology is the image sensor, i.e. camera, which with an associated processor is increasingly employed in a diversity of applications.

Vision technology is enabling a wide range of products, both new and enhanced, that are more intelligent and responsive than before, and thus more valuable to users. The Embedded Vision Alliance uses the term “embedded vision” to refer to this growing use of practical computer vision technology in embedded systems, mobile devices, PCs, and the cloud. And as trendsetting product case studies suggest, the embedded vision application ecosystem will increasingly expand to include a variety of IoT opportunities.

Video Surveillance Gets Automated, Diversified

Hear the word "camera" and the word "surveillance" might quickly come to mind, followed by the word "security". Indeed, video security systems are becoming increasingly automated, harnessing the tireless analysis that computer vision processing can deliver as an antidote to fundamental human shortcomings such as distraction and fatigue. As automated security systems' silicon and software building blocks become increasingly performance-rich, power consumption-stingy and low-priced, they're broadening their market reach beyond military, airport, and other niches into mainstream business and consumer adoption.

For more perspective on this broadening trend, see the Embedded Vision Alliance interview with Cernium Corporation, a company that expanded beyond traditional business surveillance markets into the consumer space with its Archerfish product.

Where does the machine-to-machine IoT angle to automated surveillance come in? Ideally, a computer vision system would be able to issue alerts by itself, including directly to other machines, when it sensed the need to do so. Until recently, though, the "false positive" percentage delivered by such systems was too high to enable them to directly contact law enforcement authorities. A human intermediary was historically needed to screen the automated warnings and decide whether or not the captured image was that of an intruder versus, say, an animal, a tree swaying in the wind, or a gust-propelled cluster of leaves.

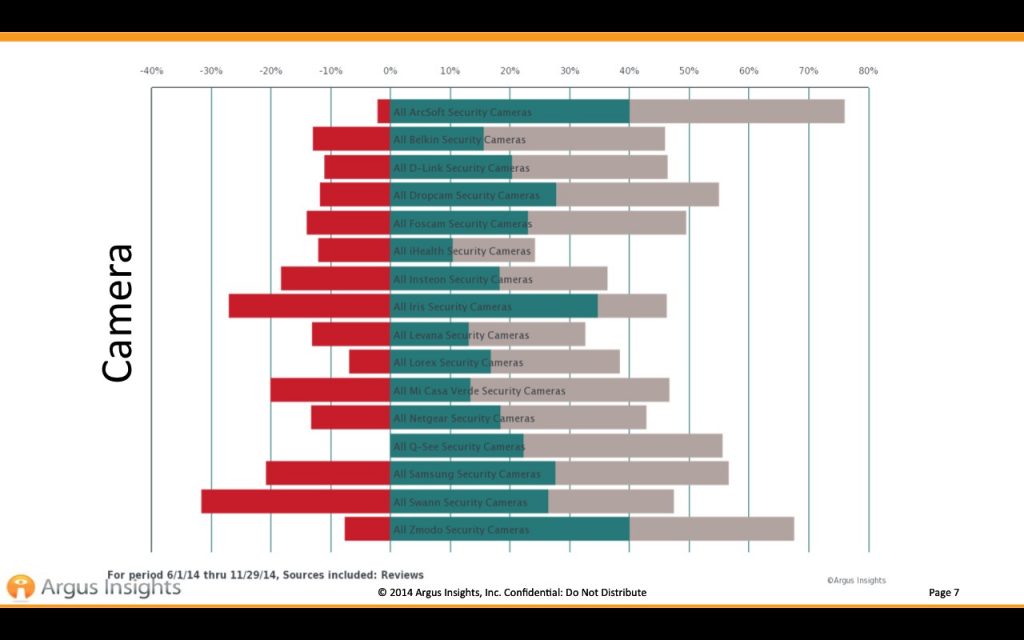

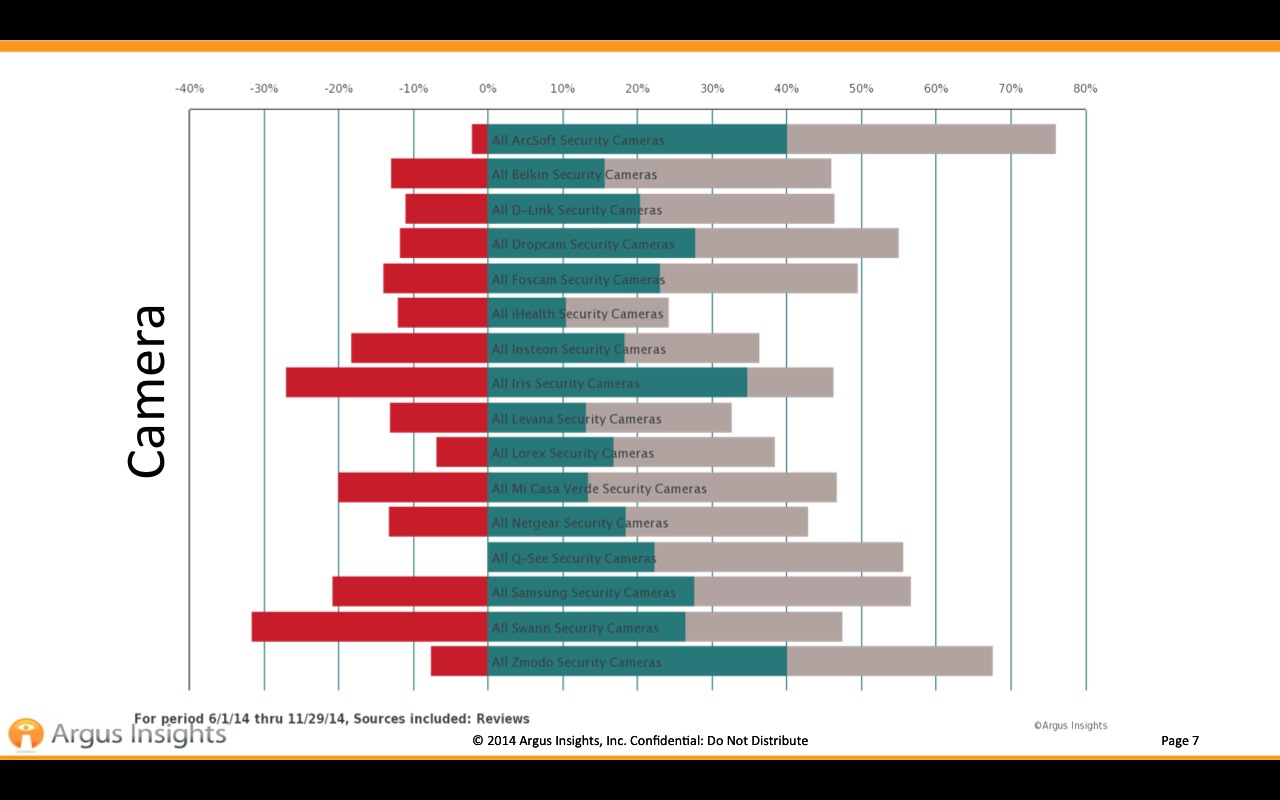

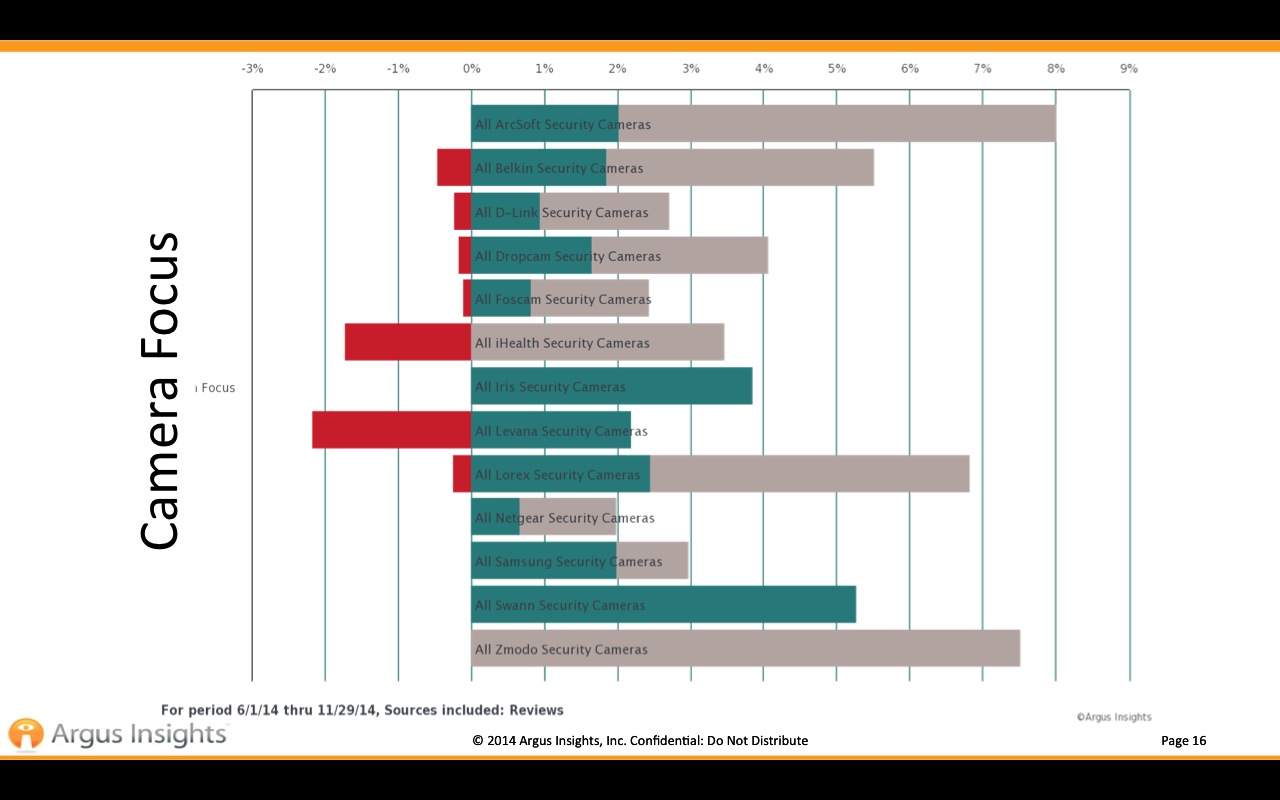

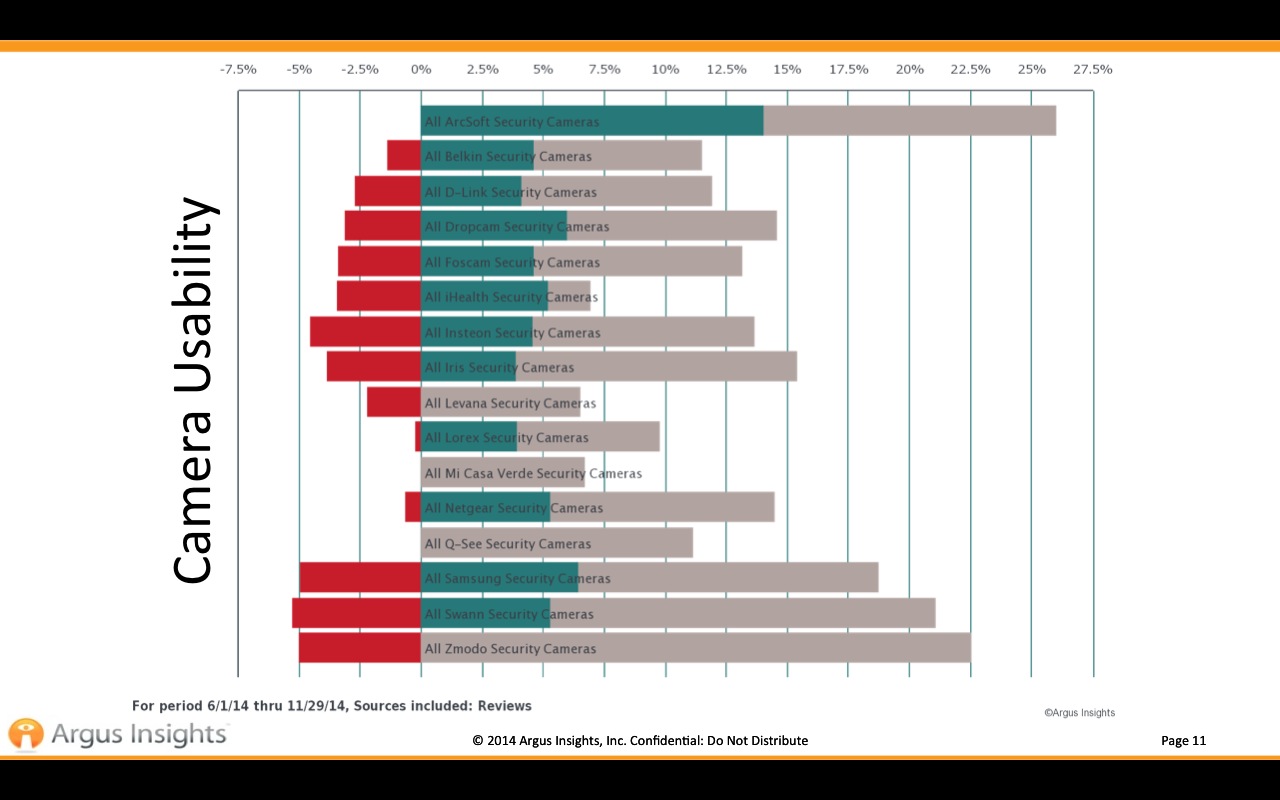

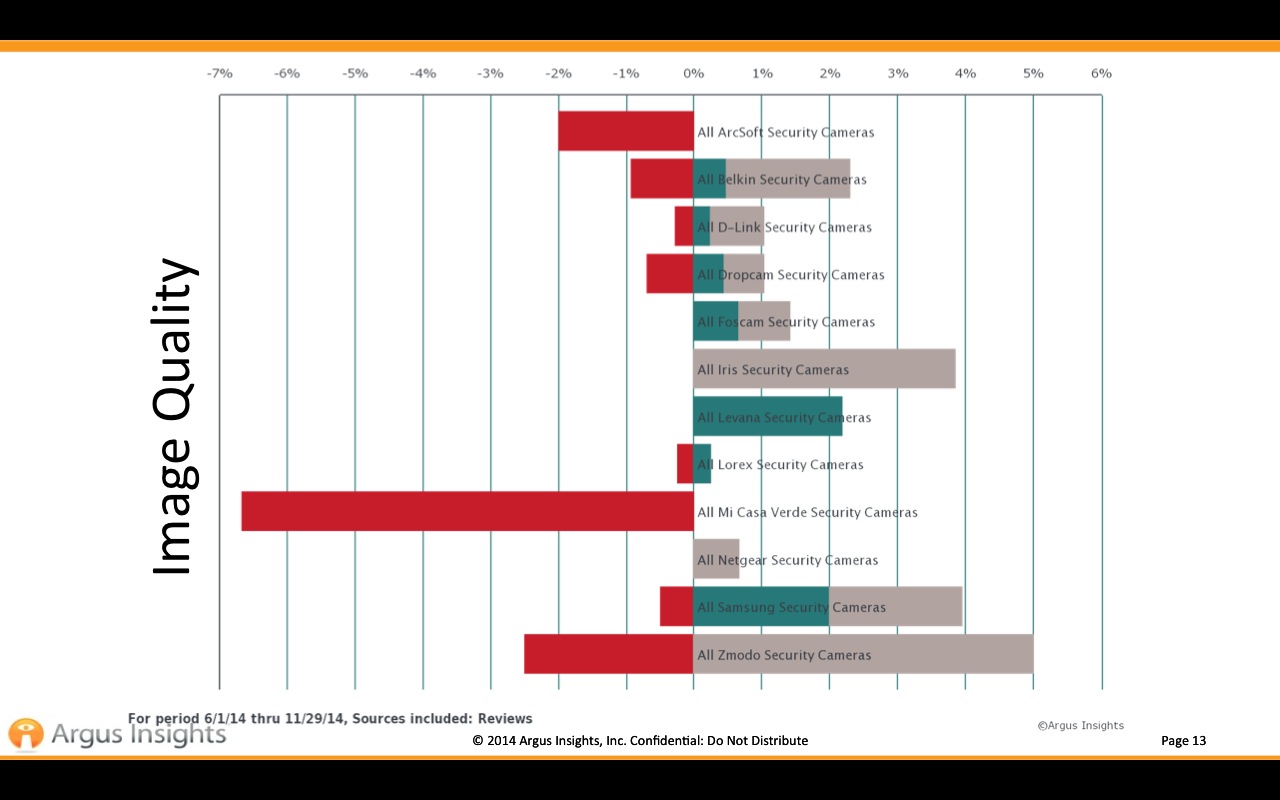

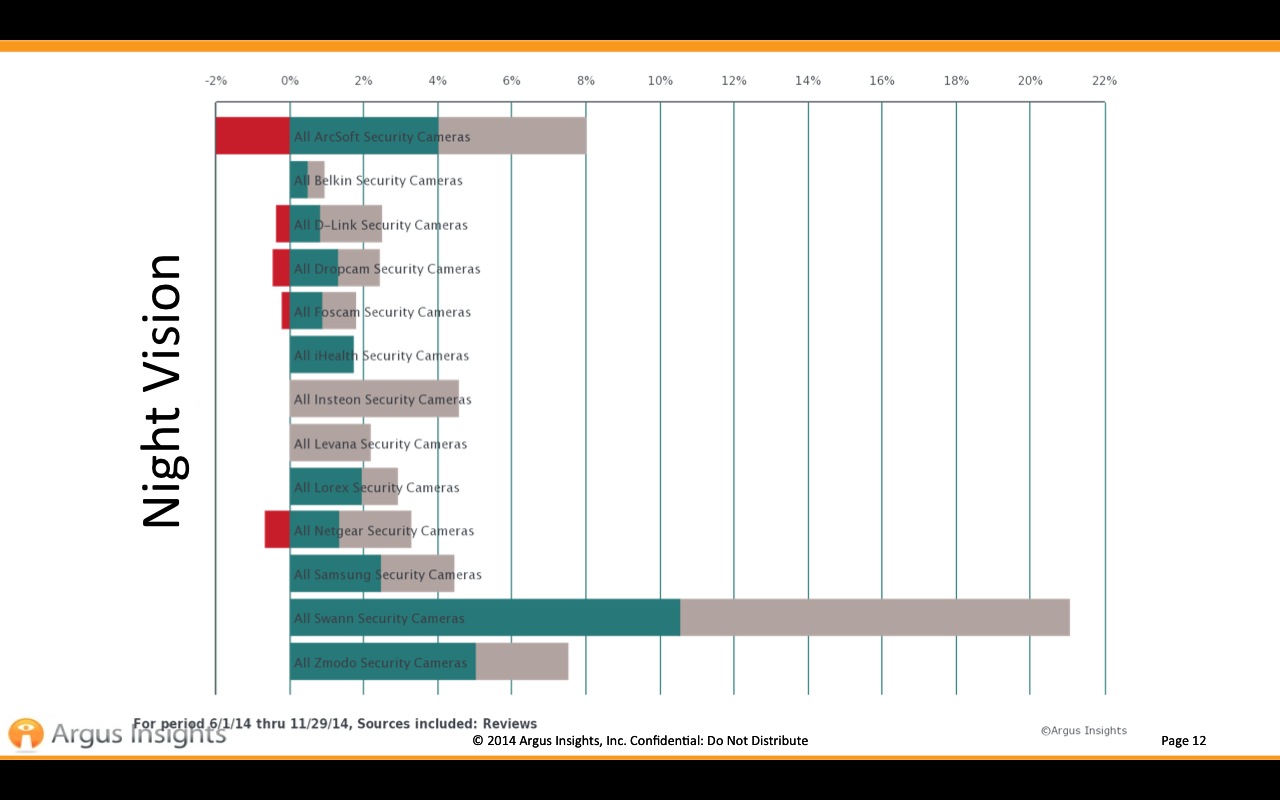

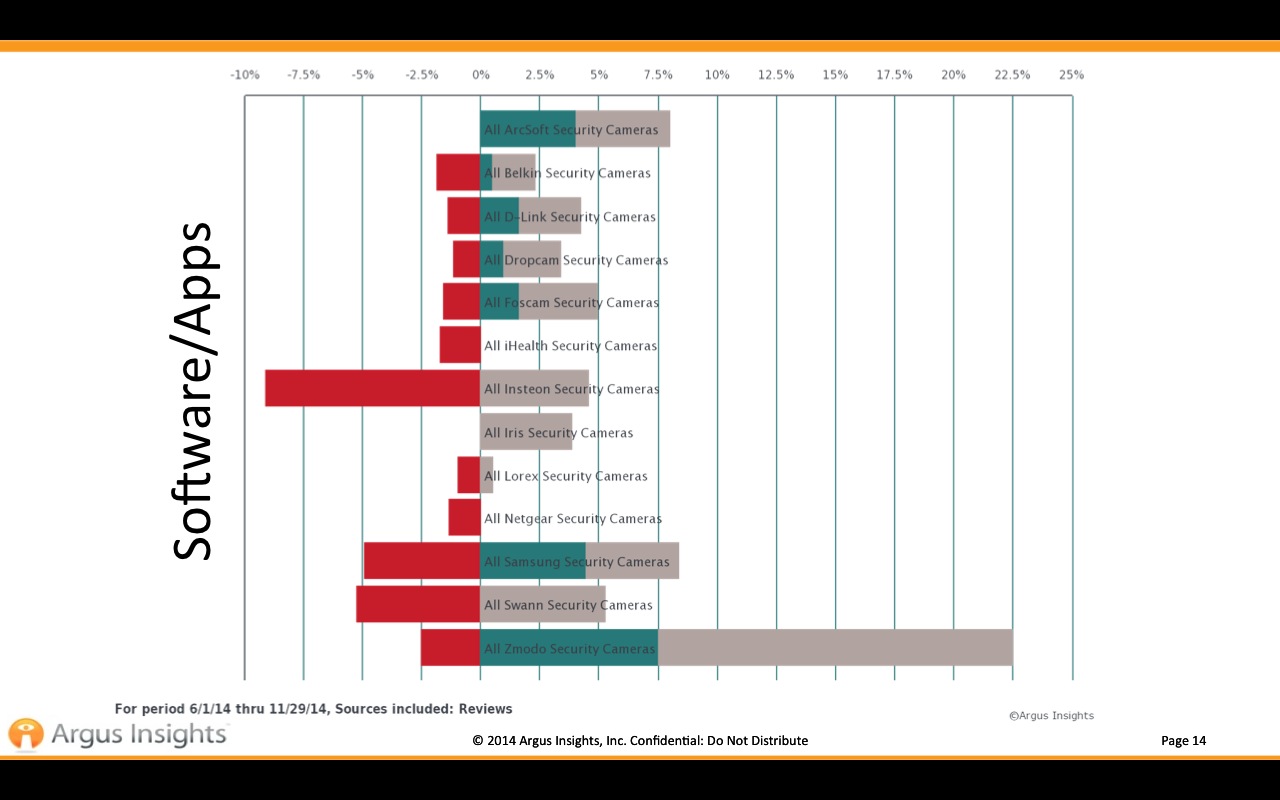

However, as analytics algorithms grow more robust, in the process more effectively accounting for non-ideal lighting and other environmental conditions, they're increasingly able to discern between disconcerting and of-no-concern movement, and (via face recognition) between humans and other objects. And increasingly, they'll even be able to tell whether the human entering your premises is a child that you didn't expect to be there at that particular time, versus a crook. Image quality and various analysis attributes are already becoming key differentiators between various consumer surveillance manufacturers and products, as late-2014 social media feedback analysis from Argus Insights indicates (Figure 1).

Figure 1. Social media feedback from consumers indicates clear differentiation between various surveillance systems intended for the home, with respect to image quality, automated analysis feature set robustness and accuracy, and other vision processing-related metrics.

For more information on this survey data, see the presentation "Consumer Adoption of Embedded Vision in the Internet of Things: What Works and What Frustrates" given by Argus Insights founder and CEO John Feland:

Consider, too, products such as the DoorBot, a crowdfunded device launched two years ago and more recently renamed the Ring Video Doorbell. As its name implies, it enables you (via your home's Wi-Fi network) to see and interact with someone at the door. Recent feature additions deliver enhanced dim-light performance (via infrared support) and evolve DoorBot into a more general-purpose consumer surveillance camera, via motion detection that doesn't require a doorbell press as an alert trigger. As with many first-generation products, reviews suggest that DoorBot undershoots its potential, but further refinement and evolution will likelely improve on the initial concept. And it's not a stretch to imagine that in the near future, face recognition capabilities will enable such video systems to proactively tell you which family member or friend is at the door, before you even look at your computer, tablet or smartphone.

Safety Enhancements

Surveillance means more than just improved security, of course; it also can deliver enhanced safety. Consider, for example, an Alzheimer's patient. Comfort and cost factors both compel a desire to keep such a person at home as long as possible. However, the possibility that such a person might wander out the front door at any time is very real. And the "false positive" alarm potential resulting from arriving and departing friends and family members, healthcare assistants, etc. who have forgotten to disable a rudimentary open-door sensor-based system (if they even know it's present, that is) is more than just annoying; it's expensive.

Better yet, use facial analysis algorithms to determine whether or not the person leaving the house is the elderly patient who shouldn't be doing so. And on the opposite end of the spectrum, consider that automation is equally useful in monitoring the youth in your household. So-called "nanny cams" are increasingly popular but, although they reduce the need for a parent to be in the child's room, they still require that the parent be regularly watching the monitor at the other end of the video chain. Motion detection, boundary-crossing trip detection and other now-commonplace video analytics techniques can instead automate the child-monitoring process, alerting an adult to a baby climbing out of its crib, or some other pending calamity.

This next safety example spans all ages. Computer vision-enabled automated swimming pool monitoring systems such as the ones developed by Poseidon Technologies can alert lifeguards and fellow pool occupants alike, via machine-generated automated audible and visual alarms, to a swimmer who's in danger of drowning (Figure 2). It's a common misconception that those struggling in the water are able to splash, shout and otherwise alert others to their plight; in fact, most victims slip under the water's surface with little to no detectible struggle. But as they sink to the bottom of the pool, video cameras in combination with computer vision algorithms can discern their presence and make an appropriate automated response.

Figure 2. Automated vision processing enables the detection of a swimmer in peril, even when overlooked by lifeguards and other swimming pool occupants.

Other Opportunities, and Developer Resources

Automated scene analysis can also be more pedestrian in its purpose. Who among you hasn't been annoyed when, while sitting in a room, the lights automatically turn off because motion wasn't detected? Raise your hands if, like me, you've needed to periodically raise (and wave) your hands to tell the motion-sensing system to turn the lights back on. Isn't there a better way? There is, in fact; use one or multiple cameras, in combination with face detection algorithms, to discern that people are present, even if they're still, and keep them from being "in the dark."

Vision processing doesn't necessarily need to involve humans as the subjects, of course. Any of you who've put out the trash for periodic pickup know that sometimes the bins are nearly empty, while other times they're overflowing. Businesses have the same feast-or-famine issues with the waste they create. Wouldn't it be better if the trash bin could automatically alert the sanitation company to its near-full status, whenever that occurred, for an only-as-needed truck roll? That's what a company called Compology thought, and that's what its intelligent monitoring system, which "retrofits to any dumpster, can or tank" (quoting the manufacturer website) accomplishes.

Farmers can also harness practical computer vision to, for example, automatically alert them to the presence of sick poultry and cattle, by monitoring factors like movement (or lack thereof), body position and gait. And those same factors, along with others such as size, can also tell the farmer when his herd is ready for slaughter. More generally, as IoT devices become increasingly networked to each other and to the greater ecosystem, the data supplied by their image sensors and analyzed by their vision processors will be used in ways that we can't even yet imagine.

The opportunity for vision technology to expand the capabilities of IoT devices is part of a much larger trend. Vision processing can add valuable capabilities to existing products, as well as create brand new classes of products. And it can provide significant new markets for hardware, software and semiconductor suppliers. The Embedded Vision Alliance, a worldwide organization of technology developers and providers, is working to empower product creators to transform this potential into reality. For more information on how to implement visual intelligence into your system and software products, including tutorial articles, videos, code downloads, a discussion forum staffed by technology experts, and information about Embedded Vision Summit technical educational conferences, please visit the Alliance website at http://www.Embedded-Vision.com.