This blog post was originally published at AImotive’s website. It is reprinted here with the permission of AImotive.

Providing an end-to-end environment, which can one day replace validation requires refined, high-fidelity models of every part of the world. Using aiSim’s open APIs and SDK makes sure integration of these many elements is seamless and performance efficient, supporting use-cases on both sides of the V-model.

Predetermined or spontaneous testing

Two distinct approaches can be followed in testing various road scenarios in a simulator.

In most cases, we want to test our automated driving software (Ego vehicle) in a very specific, well-defined situation. We set the different starting positions and speed for each participant. Then we define what trajectories they should follow, or what actions they should execute when a specific condition is met, or a preset time has passed. These scenarios always execute the same way, without anything left unspecified, so we always know exactly what we are testing. We can then specify various, strict conditions to evaluate the actions and reactions of the Ego-vehicle, and eventually, conclude the scenario with a fail or pass result.

This approach is particularly important to checking compliance with different standards like NCAP, ISO11270, ISO15622, ISO17361, ISO17387 and the coming GSR, ALKS for example. aiSim provides an advanced Scenario Editor to easily design accident avoidance scenarios and a deterministic simulation execution to ensure correlation with real-world testing.

This approach to scenario testing is widely adopted in the world, and there’s also a relatively new standard on the topic. Since OpenSCENARIO 1.0 is now publicly available, every automotive simulator developer company can opt to support it, and by doing so, any scenario built with any toolchain can be shared with others, and be executed in other simulators, if they support the same standard.

Most of the time, especially when a new feature is being developed in automated driving or driving assistant software, we want to be very specific on what we are testing and rely on the method described above. However, when a feature has already been well tested within specific scenarios, we will scale up testing, and aim to prove that the system doesn’t only work well in constrained environments, but is also robust enough to pass situations which aren’t artificially created, but are closer to real-world cases. This approach can also be split into two, different methods.

Creating scenarios from real-world recordings

If the aim is to create scenarios that are as close to real-world use cases as possible, one way to do it would be to hit the road with a vehicle equipped with specific sensors, which is then able to capture the environment and the actions of all participants continuously. When an interesting scenario happens, we can then use all of these recording and sensor logs to replicate the exact same scenario in a simulator. Allowing us to recreate whatever happened in the real world. Obviously, this method has very high costs, since we need to have various, cutting-edge sensors to be able to capture the smallest details of the road. Our teams also forced to drive around for extended periods with the possibility that nothing useful will happen. Even if we manage to collect interesting situations, there’s still a large amount of work needed to be done to convert these recordings into virtual scenarios, recreate the locations to match how they appeared in the recordings, etc… This process is complex, this patent describes an example of such a system in detail: US10482003 – Method and system for modifying a control unit of an autonomous car.

Procedural traffic generation is also an option

The other approach is using existing locations already available in the simulator and then procedurally generating traffic based on various requirements. The ultimate goal of these systems is usually to model the real-world behavior of drivers and traffic flow as closely as possible, but to date, any available system has its own limitations.

Naturally, when we started developing our simulator, we started off by hand-crafting each subsystem needed so we could quickly deliver features for testing. While keeping our interfaces open and building our systems through a modular approach is very important to us, when experimenting with new verification requirements, it’s often much more efficient to create a working prototype first, independent of the intended final implementation, only to see if the solution provided is indeed something that was requested. Therefore, we first created our own traffic system, which was able to quickly populate any road segment by procedurally generating vehicles around the Ego-vehicle. The center of the simulation however, always remained the Ego-vehicle, so each parameter set was developed from the Ego’s perspective. We were able to define how many vehicles we wanted to see in the lane, or to the left or right lanes of the Ego-vehicle. We were also able to define specific maneuvers in a high-level descriptor, and then observe various cars overtaking each other, changing lanes, merging into traffic, or exiting the highways. This system proved to be very valuable for a while, especially since we mostly tested highway scenarios, where only a very limited subset of traffic rules are being used.

As long as the vehicles were able to follow each other at a safe distance and perform the necessary lane changes, we were able to generate various, highly diverse situations.

Recently we started to shift our focus towards urban scenarios and we saw that although our traffic generator system works quite well, there are various features that are lacking to fulfill the needs of more complex traffic scenarios. One such case was when the vehicles arrived at an intersection without traffic signs and needed to give way to one and another. The procedurally generated vehicles were able to follow simple traffic rules. Nevertheless, creating such negotiations was one of the examples where we saw that more development would be needed if we wanted to solve the complex traffic situations which could arise in a city environment.

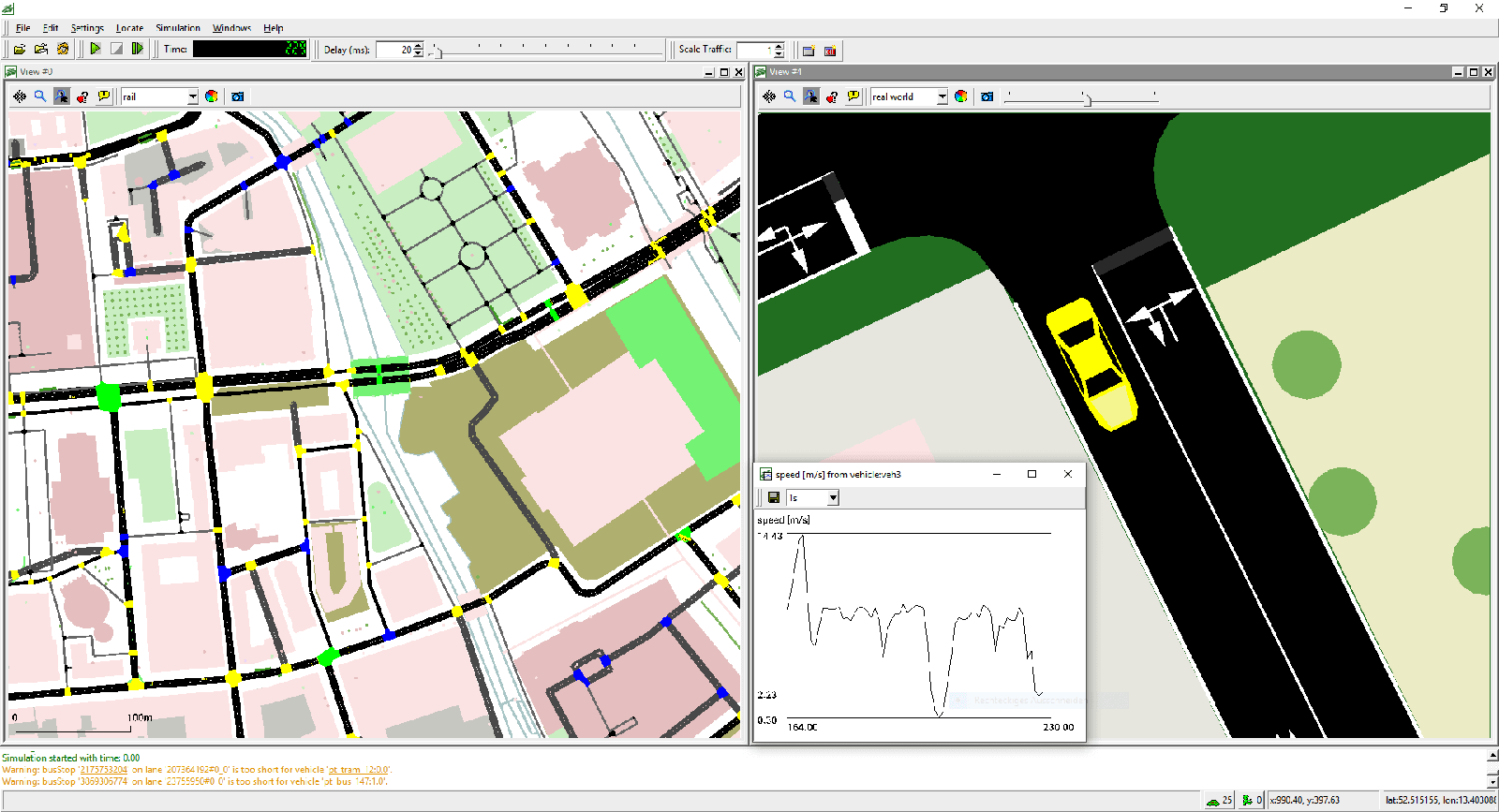

SUMO in aiSim

This was the point where we decided to look around the market to see what third-party solutions were available. We were aware of SUMO (Simulation of Urban MObility), but had never evaluated it for our internal needs. As a natural next step, we started researching if and how we could integrate it into our simulator, to replace our own solution for traffic generation. Although the SUMO library is very complex, luckily there’s ample documentation available online to support the first steps. After deep diving into the documentation and familiarizing ourselves with the structure of the library, we were able to integrate it into aiSim easily thanks to our Traffic API. As a result, SUMO can now be used in place of our former procedural system to generate traffic on roads. At the current level, we can model the behavior of vehicles in SUMO accurately, and we are continuously working on adding support for additional features.

With aiSim, we aim to deliver a modular package to our partners, and we also kept this in mind when looking at integrating SUMO into the simulator. We wanted to keep the integration at the minimum, but also provide full support for all the features available in SUMO, so in the end, we decided to implement co-simulation. When a scenario is loaded in the simulator, if a SUMO config file is referenced in the scenario, an instance of the SUMO world is also created. At each time step, we simply synchronize these two worlds with each other, querying and positioning the SUMO-controlled vehicles in aiSim, while feeding back the position of the Ego-vehicle to the SUMO world. This allows the generated vehicles to respect the actions of a remote-controlled vehicle. We also implemented the relevant code for the integration in a module which is open-sourced to our partners. If the current implementation is not sufficient, or an update to the library or any further modification is necessary, it can be performed easily without active cooperation from our end. This way, iteration times when working together are much faster, since any waiting for AImotive to do something is eliminated completely.

And this brings up back to standards and the exchangeability of scenarios. The main uncertainty with open standards (like OpenSCENARIO) at the moment, is that they mostly rely on implementation. If two different simulator developers implement the standard in different ways, they will get different results, and as a consequence, the created scenario will not be exchangeable. However, as SUMO provides a prebuilt library and a public interface to communicate with, this means that any SUMO configuration file will be executed identically in any simulator that supports it, providing true exchangeability for the users of this library.

András Bondor

Senior AI Engineer, AImotive