This article was originally published at videantis' website. It is reprinted here with the permission of videantis.

Advanced driver assistance systems (ADAS) automate, adapt, or enhance automotive vehicles to increase safety and enhance the driving experience. These systems use sensors such as radar, ultrasound, and especially standard digital cameras to capture their surroundings. Video analytics of these images enables the driver with extra information, warnings, or can even autonomously decide to adapt speed and direct the steering wheel.

Market research firm ABI Research forecasts that the market for ADAS will grow from US$11.1 billion in 2014 to US$91.9 billion by 2020, passing the US$200 billion mark by 2024. ADAS packages have long been available as optional extras on luxury and executive vehicles, but recent years have seen the more popular systems penetrating through to affordable family cars.

The images captured by the ADAS cameras require huge amounts of processing in order to extract meaningful data out of them. A 2 megapixel camera running at 60 frames per second generates about half a gigabyte of information every second. This data needs to be analyzed and processed very quickly, in order to respond in real-time to others vehicles, pedestrians that may cross, and to steer the car to follow the road. The amount of processing that takes place is staggering and requires extremely powerful supercomputing capabilities.

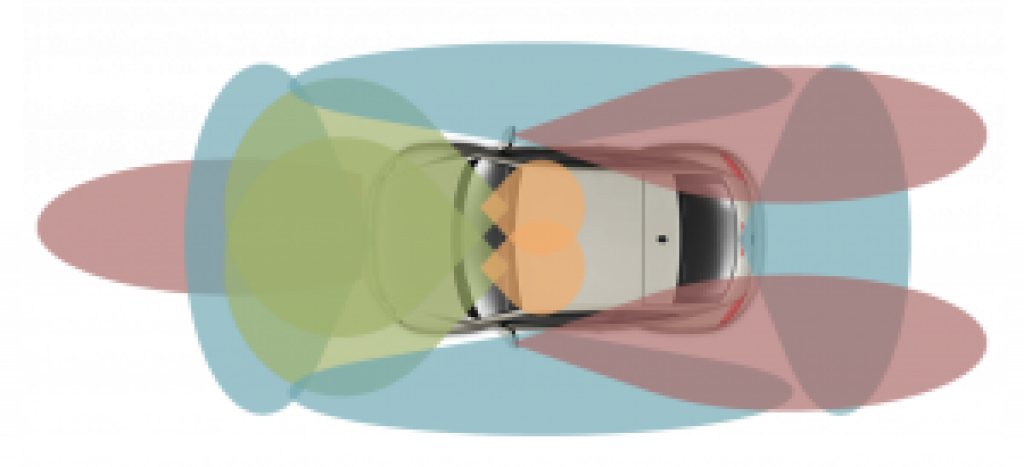

What further complicates the matter is that we’re not talking about a single camera, but many. There are already cars on the road that include 7 cameras. A common setup has multiple forward-looking cameras: long-range, shorter-range, and sometimes stereo cameras for controlling speed and steering. Additionally, there are typically another four cameras to present a surround-view overview to the driver. Mirror replacement and interior cameras for driver monitoring or gesture recognition increase the camera count further.

Automotive cameras in the car

The question now becomes how do you connect all these cameras, where does the computation take place, and what does the system architecture look like?

Connecting the cameras

Most automotive cameras today are connected using LVDS. LVDS cables are expensive though, as they need shielding. This increases the bill of material and production cost. The trend is now to use Ethernet technology, which uses unshielded twisted-pair. Ethernet networking offers lowest cost with proven reliability, and has already proven its value in consumer electronics. Another advantage of Ethernet is that it can carry other data besides video, and that the networking streams can be switched, which allows video data to be directed to different devices in a very flexible manner. BMW was first to adopt Ethernet for the car, and many other car manufacturers are now following and standardizing on Ethernet as the key in-vehicle communication network.

Smart cameras enable modularity and reduce cost

A new class of cameras is now emerging. Cameras that not only produce a compressed video stream, but also analyze the images, and transmit meta-data along with the video stream. Such a smart camera can for instance detect pedestrians, calculate how objects are moving, and measure distances. This information can then be sent to the head unit.

There are at least three important advantages to this approach:

- The video analytics algorithms can run on the raw, uncompressed images, before they’re encoded and transmitted over Ethernet. As a result, the video-analyzing ADAS algorithms downstream don’t suffer from any artifacts that may have been introduced during compression and retain their accuracy.

- When processing is moved into the camera module, entry-level car models can be shipped with a lower-cost head unit. When the customer selects for instance a driver monitoring or automatic parking option, the camera modules can simply be plugged in, and don’t require the car manufacturer to also upgrade the head unit to a higher-performance, higher-cost model. This reduces the total cost of materials and operations significantly for the car manufacturer.

- The system becomes completely scalable. Each camera that is added to the car also increases the computer vision and compute capabilities. Without processing inside the camera, performance bottlenecks will invariably arise on the head unit, especially when several cameras are added to the car. Placing significant visual processing capabilities inside the camera removes this bottleneck.

Whether the cameras are used for rear view, surround view, mirror replacement, or driver monitoring, moving video processing into the camera is a clear trend.

Low power is a must

Adding computer vision and image processing engines to the camera module consumes power though. You would think that there’s lots of power available in a car, and this is true. Power isn’t the issue though. The issue is heat. Automotive camera modules are small, rugged, waterproof, and don’t dissipate heat very well. Any heat that builds up gets transferred to the image sensor, which is affected by it. When sensors get warm, they start generating image noise, reducing the quality of the picture. Any video processing engine inside the camera therefore must consume minimal electricity, and low-power operation is crucial.

videantis unified video/vision architecture

The videantis v-MP4000HDX architecture is an ideal fit for automotive cameras. It combines high performance, low power consumption, low silicon cost, and flexibility in a single unified architecture. The programmable architecture has been meticulously optimized, trimmed, and improved for well over a decade and a half, resulting in a much higher performance per Watt than more recently introduced competing visual computing architectures. Another advantage is the flexibility: the unified architecture runs both video compression algorithms as well as a wide variety of computer vision algorithms very efficiently. This allows flexible trade offs and a tight integration of the video compression and computer vision algorithms. The additional benefit of using a single development environment, and a single supplier for these functions further reduces total cost of ownership.

The videantis processor architecture is licensed to leading semiconductor companies, and videantis works closely with the automotive Tier 1 and OEM companies to ensure fastest time-to-market, smooth integration, resulting in a sustainable competitive advantage.

We’re looking forward to continue working with our customers and bringing our leading performance-per-Watt and performance-per-dollar video/vision processing architecture to the consumer. You may not notice, but those small camera modules in your next car will have little super computers inside, analyzing every pixel in the camera many times per second, ensuring you’ll arrive at your destination safely and comfortably.