This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm.

Qualcomm AI Research’s latest cutting-edge research in 3D perception

The world is 3D and, as humans, we perceive the world in 3D. 3D perception offers many advantages over 2D, allowing us to more accurately perceive and engage in the world around us – which is why we see the importance of enabling machines with this capability. For example, 3D perception facilitates reliable results in varying light conditions, provides confident cues for object and scene recognition, and allows accurate size, pose, and motion estimation.

Enabling and enhancing key use cases

3D perception empowers many applications across devices and industries to make our lives better, everything from XR and autonomous driving to IoT, camera, and mobile. For example, to achieve immersive XR, 3D perception is crucial for 6 degrees-of-freedom motion estimation, obstacle avoidance, object placement, photorealistic rendering, hand pose estimation, and interacting in virtual environments.

3D perception greatly facilitates immersive XR.

It also greatly facilitates autonomous driving, utilizing the 3D data streaming from cameras, LiDAR, and radar to help pave the way for safer travel. 3D perception is used for 3D map reconstruction, positioning the vehicle on the road, finding navigable road surfaces, avoiding obstacles, and estimating trajectories of vehicles, pedestrians, and other objects for path planning.

New challenges to overcome for 3D perception

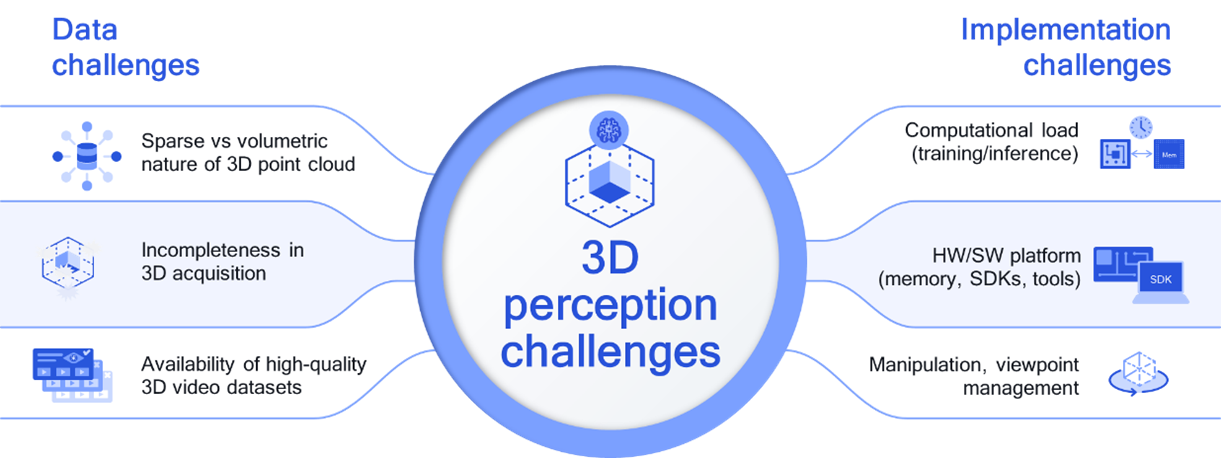

3D perception relies on several tasks to better understand the world, many of which are similar in concept to 2D perception. Making the state-of-the-art (SOTA) 3D perception AI models a reality, and at scale, for real-world deployment on edge devices constrained by power, thermal, and performance has been a challenge. Notably, two categories of challenges stand out – data and implementation challenges. Unlike 2D images where pixels are arranged on a uniform grid, a 3D point cloud is very sparse and not uniform, which can cause accessibility vs. memory trade-offs.

3D perception faces data and implementation challenges.

Making 3D perception at scale a reality

What makes Qualcomm AI Research’s leading 3D perception research unique is that we develop novel AI techniques, build real-world deployments through our full-stack AI research, and create energy-efficient platforms to make 3D perception ubiquitous. Our purposeful innovation has led to many 3D perception breakthroughs both in novel research and in proof-of-concept demonstrations on target devices, thanks to our full-stack optimizations using the Qualcomm AI Stack toolkits and SDKs. I’d like to highlight four key areas of our leading 3D perception research: depth estimation, object detection, post estimation, and scene understanding, as shown here:

Our leading 3D perception research.

Accurate depth estimation across different modalities

Depth estimation and 3D reconstruction is the perception task of creating 3D models of scenes and objects from 2D images. Our research leverages input configurations including a single image, stereo images, and 3D point clouds. We’ve developed SOTA supervised and self-supervised learning methods for monocular and stereo images with transformer models that are not only highly efficient but also very accurate. Beyond the model architecture, our full-stack optimization includes using neural architecture search with DONNA (Distilling Optimal Neural Networks Architectures) and quantization with the AI Model Efficiency Toolkit (AIMET). As a result, we demonstrated the world’s first real-time monocular depth estimation on a phone that can create 3D images from a single image. Watch my 3D perception webinar for more details.

Efficient and accurate 3D object detection

3D object detection is the perception task of finding positions and regions of individual objects. For example, the goal could be detecting the corresponding 3D bounding boxes of all vehicles and pedestrians on LiDAR data for autonomous driving. We are making possible efficient object detection in 3D point clouds. We’ve developed an efficient transformer-based 3D object detection architecture that utilizes 2D pseudo-image features extracted in the polar space. With a smaller, faster, and lower power model, we’ve achieved top accuracy scores in the detection of vehicles, pedestrians, and traffic signs on LiDAR 3D point clouds.

Low latency and accurate 3D pose estimation

3D pose estimation is the perception task of finding the orientation and key-points of objects. For XR applications, accurate and low-latency hand and body pose estimation are essential for intuitive interactions with virtual objects within a virtual environment. We’ve developed an efficient neural network architecture with dynamic refinements to reduce the model size and latency for hand pose estimation. Our models can interpret 3D human body pose and hand pose from 2D images, and our computationally scalable architecture iteratively improves key-point detection with less than 5mm error – achieving the best average 3D error.

3D scene understanding

3D scene understanding is the perception task of decomposing a scene into its 3D and physical components. We’ve developed the world’s first transformer-based inverse rendering for scene understanding. Our end-to-end trained pipeline estimates physically-based scene attributes from an indoor image, such as room layout, surface normal, albedo (surface diffuse reflectivity), material type, object class, and lighting estimation. Our AI model leads to better handling of global interactions between scene components, achieving better disambiguation of shape, material, and lighting. We achieved SOTA results on all 3D perception tasks and enable high-quality AR applications such as photorealistic virtual object insertion into real scenes.

Our method correctly estimates lighting to realistically insert objects, such as a bunny.

More 3D perception breakthroughs to come

Looking forward, our ongoing research in 3D perception is expected to produce additional breakthroughs in neural radiance fields (NeRF), 3D imitation learning, neuro-SLAM (Simultaneous Localization and Mapping), and 3D scene understanding in RF (Wi-Fi/5G). In addition, our perception research is much broader than 3D perception as we continue to drive high-impact machine learning research efforts and invent technology enablers in several areas. We are focused on enabling advanced use cases for important applications, including XR, camera, mobile, autonomous driving, IoT, and much more. The future is looking bright as more perceptive devices become available that enhance our everyday lives.

Download the 3D Perception Presentation

Our latest cutting-edge research in 3D perception, from a recent webinar.

Fatih Porikli

Senior Director of Technology, Qualcomm Technologies