This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group.

Co-packaged optics (CPO) has gained a lot of attention recently due to its networking power efficiency in data centers. To satisfy market demands and convince end-users of CPO’s viability, multi-vendor business models and considerable cost and power savings must be proven.

Recent news indicates that most of CPO’s main proponents targeting networking applications have suspended support for CPO programs due to macroeconomic headwinds. Even end-users have stopped looking at CPO. There are several reasons why CPO is losing attractiveness, and the first is the well-established industrial ecosystem around pluggables. Also, new materials for integrated electro-optic modulators for pluggable form factor can help achieve the required low power and can be introduced to the market without any change to existing network system designs.

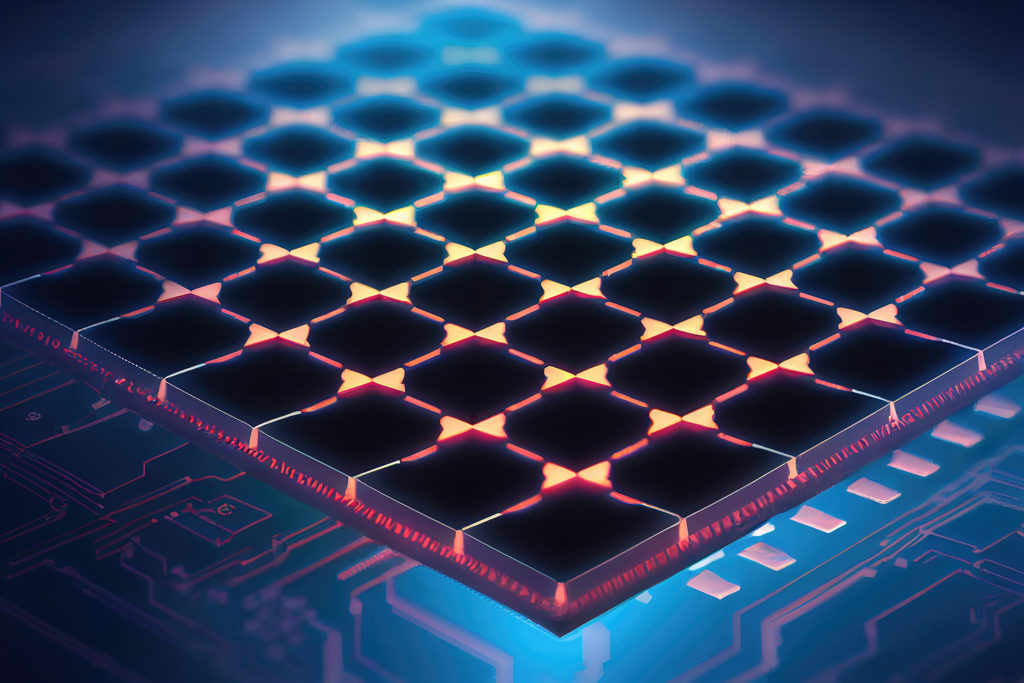

However, CPO maintains its attractivity for AI/ML systems. AI models are growing in size at an unprecedented rate, and the capabilities of the traditional architectures (copper-based electrical interconnects) for chip-to-chip or board-to-board will become the main bottleneck for scaling ML. As a result, new, very-short-reach optical interconnects have emerged for high-performance computing (HPC) and its new disaggregated architecture. Disaggregated design distinguishes the compute, memory, and storage components found in a server and pools them separately. Using optical-based interconnect for xPUs (CPUs, DPUs, GPUs, TPUs, FPGAs, and ASICs), memory and storage – by means of advanced in-package optical I/O technology – can help achieve the necessary transmission speeds and bandwidths.

Moreover, the potential for billions of optical interconnects (chip-chip, board-board) in the future is driving big foundries to prepare for mass production. Since most photonics manufacturing IP is held by non-foundry firms (AyarLabs, Ranovus, Cisco, Nvidia, Marvell, Lightmatter, and many others), big foundries such as Tower Semiconductor, GlobalFoundries, ASE Group, TSMC, and Samsung are preparing silicon photonics process flows to accept any PIC architecture from design houses. All of these foundries are joining forces in industry consortiums such as PCIe, CXL, and UCIe. The common specification of chiplet interconnect enables the construction of large system-on-chip (SoC) packages that exceed maximum reticle size. This allows for intermixing components from different vendors within the same package and improves manufacturing yields by using smaller dies. Each chiplet can use different silicon manufacturing processes suitable for a specific device type or computing performance / power-draw requirement.

Revenue generated by the CPO market reached around US$38 million in 2022 and is expected to reach US$2.6 billion in 2033, at a 46% CAGR for 2022 – 2033. Projections of rapidly-growing training dataset sizes show that data will become the main bottleneck for scaling ML models, and as a result, a slowdown in AI progress might be observed. Accelerating data movement in AI/ML gear is the main driver for adopting optical interconnects for next-generation HPC systems, and using optical I/O in ML hardware can help to solve the explosive growth of data.

Deep photonics integration, driven by advances in silicon photonics, has already demonstrated viability in certain data center applications. CPO architecture will definitely continue its story even beyond datacom. Stay tuned with us to follow the story of CPO.

Acronyms

- AI: Artificial intelligence

- CXL: Compute express link

- HPC: High-performance computing

- CPO: Co-packaged optics

- CAGR: Compound annual growth rate

- I/O: Input/output

- ML: Machine learning

- PCIe: Peripheral component interconnect express

- PIC: Photonics integrated circuit

- xPU: Silicon chip – processing unit has different architectures: CPU, DPU, GPU, TPU, FPGA, ASIC

- UCIe: Universal chiplet interconnect express