This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm.

Qualcomm AI Research explores fundamental research to combine causality with AI

At its core, science is all about finding causal relations. For example, it is not enough to know that smoking and cancer are correlated; what is important is to know that if we start or stop smoking, then that will change our probability of getting cancer.

Machine learning (ML) as it exists today usually does not take causality into account, and instead is merely concerned with prediction based on statistical associations. This can lead to problems when using such models for decision making. For example, we may find a strong and statistically robust correlation between the chocolate consumption per capita, and the number of Nobel Prizes per capita but if we would use this model to inform policy (encouraging people to eat more chocolate), we would probably not obtain the desired result.

Over the last few decades, computer scientists and statisticians have developed a comprehensive framework for causal inference that makes it possible (under certain circumstances) to infer causal relations from observational and experimental data, and more generally to do formal reasoning about cause and effect.

These frameworks allow us to answer three kinds of questions.

- Associational: if I see X, what is the probability that I will see Y?

- Interventional: if I do X, what is the probability that I will see Y?

- Counterfactual: what would Y have been, had I done X?

As we will discuss below, being able to answer such questions is very important in many AI tasks.

Causal reasoning is also a key component of human intelligence. This is no surprise, because the main purpose of our brain is to decide how to act — a tree does not have a brain. Developmental psychologists have found that already at a young age, children develop the capacity to reason about cause and effect, and the causal models mentioned earlier give a fairly good description of human causal inferences.

Since causal reasoning is key both to our scientific understanding of the world, and our common-sense understanding of it, it stands to reason that causal reasoning is also critical in the quest to build AI. At Qualcomm AI Research we are exploring various ways in which causal models can be used to solve long-standing fundamental open problems in AI and ML.

How causality improves representation learning

In many learning problems, the input consists of low-level sensor data such as images from a camera. Learning how to act (e.g., to drive a car or control a robot) using only such low-level inputs is very challenging. The field of representation learning is concerned with training neural networks to turn such low-level inputs, consisting of millions of individually meaningless variables like pixels, into high-level semantically meaningful variables like the class and appearance of an object or its position.

A common finding is that with the right representation, the problem becomes much easier. However, how to train the neural network to learn useful representations is still poorly understood. Here, causality can help. In causal representation learning, the problem of representation learning is framed as finding the causal variables, as well as the causal relations between them.

The variables found using such methods tend to be much more interpretable. They also facilitate reasoning. For instance, an AI agent may reason that other cars on the road are driving slowly because they noticed that an ambulance is coming, even if we haven’t seen it yet. In our paper, Weakly supervised causal representation learning, we study under which conditions we can learn the “correct” high-level representations – meaning the ones associated with the data-generating process. We also provide a simple proof-of-concept example where we learn a representation that disentangles various buttons and lights from visual input, and then figures out which buttons a robot arm should press to cause different lights to turn on.

In addition to learning the causal variables and causal relations, we are working on methods that can learn how to intervene on the causal variables. For instance, for causal knowledge about buttons and lights to be useful, the robot needs to learn practical skills to actually press the button and link these skills to its reasoning capabilities.

How causality improves imitation learning

Another area where causal inference can help is imitation learning. This is a class of methods where the AI agent is trained to imitate the actions of an expert. For example, we could collect video and LIDAR data, as well as steering and braking commands from expert human drivers, and train an AI agent to predict the actions of the expert when given the same video and LIDAR input.

This approach is appealing because of its simplicity, but it can fail catastrophically if causality is not considered. One kind of problem has been dubbed causal confusion. Imagine that the visual input of our agent includes a small light on the dashboard, that turns on whenever the brake is pressed. The agent, lacking common sense, may discover that when the light is on, it is almost always the case that the action in the next time step (30fps) is to brake. Statistically, this is a correct inference, because most of the time when the expert is braking during one time step, they will do the same during the next time step. But causally speaking it is wrong: the expert was braking because of, for example, a dangerous situation or a stoplight, not because of a light on the dashboard. Clearly, if the imitator learns to keep braking whenever the brakes are pressed, and keep driving otherwise, then it will not do very well in the real world.

The agent learned to brake only when the indicator is on (causal confusion).

Another problem occurs when the imitator fails to exactly imitate the expert. For example, if the imitator manages to be 99.9 percent accurate, it will still drift away from the trajectory of the expert driver over time. As a result, the imitator will find itself in a (potentially dangerous) situation it has not seen during training. Unless it can answer a counterfactual question, “what would the expert have done had they been in this situation?”, the imitator will not know how to drive safely. In order to imitate properly, rather than “monkey see monkey do”-type imitation, the imitator needs to understand why the expert chose the action they chose. And why questions are causal questions.

A final problem occurs when the expert has access to more information than the imitator, which can lead to a problem known as confounding in the causality literature. For instance, imagine a robot learning to imitate an expert (who remotely controls the robot) at lifting coffee cups. If the expert knows the weight of the cup, they know how much force to apply to lift it. This can lead the imitator to infer that if the past actions applied a moderate amount of force, then the weight must be moderate, and hence at the next time step it is appropriate to apply a moderate force again. But of course, when it is not the expert but the agent performing the actions, the past actions do not contain information about the weight. Instead, in such circumstances, the imitator should employ exploratory behavior, for instance to first carefully gauge the weight of the cup. We can train such safe exploratory behaviors through a causality-aware imitation learning algorithm.

How causality improves planning and credit assignment

Two key problems in sequential decision-making problems are planning and credit assignment. In planning, the AI agent considers different sequences of actions and predicts their outcomes before deciding what to do next. To do this well, the agent needs to answer interventional questions: what would happen if I performed a certain action? Hence, the agent needs to have a causal model of its environment.

Another problem is credit assignment. In many problems, the agent takes a long sequence of small actions before some desirable or undesirable outcome is reached. At that point, it is critical to know which of those actions actually caused the outcome, so that we can learn from this experience by changing (or not) the action that led to the observed outcome. At present, reinforcement learning agents still have a lot of difficulty with this, because they do not take causality into account explicitly.

How causality improves robustness and distribution shift

Current ML models can be trained to predict extremely well in a controlled environment, but their performance can easily degrade when they are deployed in an environment that differs even a little bit from the training environment. A notorious example is a medical diagnosis model trained on medical images, where the data was obtained from two hospitals. In this case, hospital A had more serious cases of the disease in question, while hospital B had more mild cases. Furthermore, the two hospitals used different scanners to obtain the images, and one of them put a small logo in the corner of the image. This led the model to predict the severity based on the logo – a strategy that will clearly fail when applied in other hospitals with different scanners.

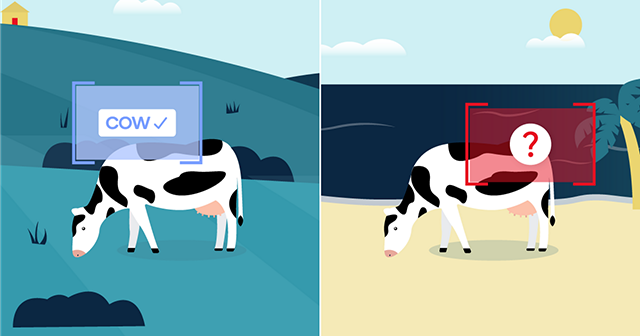

A similar example that is often discussed is an image classifier that can label cows in an image. In the training data, cows were often seen standing on grass, and so the system takes the presence of grass to be evidence that the image contains a cow. When the system sees a cow on the beach at test time, it may fail to recognize it.

The system fails to recognize a cow on the beach.

In both cases, the ML model erroneously took some feature of the image to be the cause of the observed label. In order to avoid such problems, the system needs to understand the true causes of the label; to know why the human labeler chose the label they did. Only then can we hope to generalize to new settings; to build AI that can operate in a changing world.

Conclusion

Causal inference is not a magic bullet, and at this point it is not completely clear how to fruitfully combine the tools of causal inference with highly successful methods of deep learning. But as we have argued in this post, many of the fundamental open problems in AI are related to the issue of causality. For this reason, the causality and interactive learning team at Qualcomm AI Research is investigating ways to leverage these tools to solve long-standing open problems in AI, and address limitations of real-world AI systems.

Taco Cohen

Principal Engineer, Qualcomm AI Research