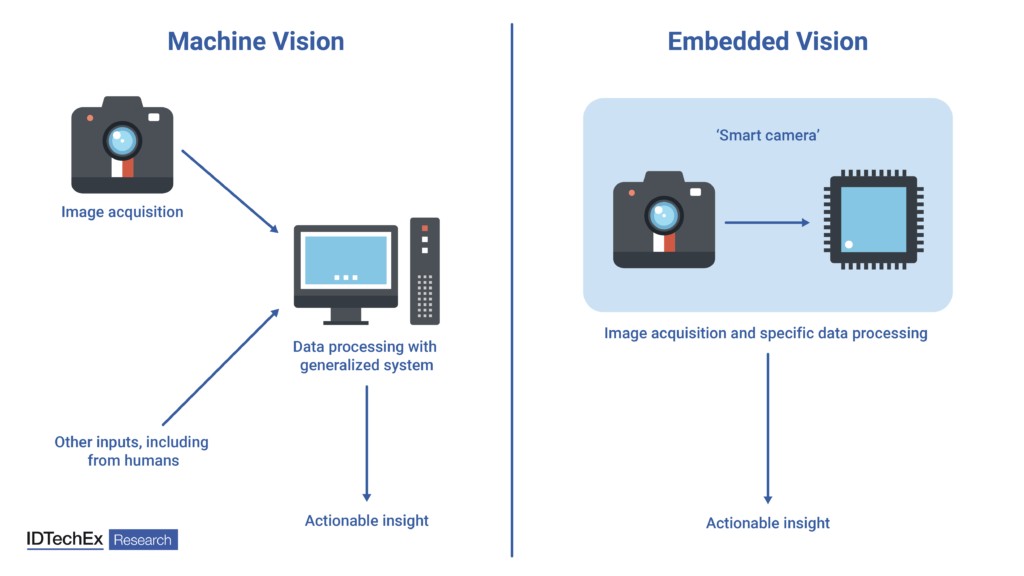

Anyone who has used a ‘smart lens’ app on their smartphone is familiar with machine vision. Rather than simply reproducing and storing a picture for later viewing, machine vision applies an image processing algorithm to obtain additional insight, such as locating edges or object identification. While few would regard a ‘smart lens’ app as essential, machine vision is a critical component of many industrial processes, such as material sorting and quality control. It is also crucial for ADAS (advanced driver assistance systems) and, ultimately, autonomous vehicles.

Embedded vision brings these computational capabilities to the ‘edge’. Rather than images being sent from a sensor to a central processor, initial analysis is performed adjacent to the sensor on a dedicated, often application-specific, processor. This greatly reduces data transmission requirements since rather than sending all the acquired information (i.e., each pixel’s intensity over time), only the conclusions (e.g., object locations) are transmitted. The combination of reduced data transmission and application-specific processing also reduces latency, enabling quicker system responses.

Minimizing Size, Weight, and Power (SWAP)

While in many image sensing applications maximizing performance metrics such as resolution and dynamic range is the priority, sensors for embedded vision are typically designed with other priorities in mind. Collected data needs to be good enough to meet the requirements of the image processing algorithm, but since the picture will not be seen, then maximizing image quality is somewhat redundant. Instead, the aim is to make the system as light and compact as possible while minimizing power requirements. This SWAP reduction enables more sensors to be deployed in devices with size and weight constraints, such as drones, while also reducing associated costs. As such, small image sensors are typically deployed, often those originally developed for smartphones. Over time, expect to see greater integration, such as stacking the sensing and processing functionalities.

Miniaturized Spectral Sensing

Embedded vision isn’t restricted to conventional image sensors that detect RGB pixels in the visible range. Monochromatic sensing will likely suffice for simpler algorithms, such as edge detection for object location, reducing sensor cost. Alternatively, using more sophisticated sensors with additional capabilities arguably supports the essence of embedded vision by minimizing subsequent processing requirements.

Adding spectral resolution to image sensors can expedite subsequent processing for applications such as material identification, since the additional spectral dimension generally means a smaller training data set is required. However, meeting the SWAP requirements of most embedded vision systems is challenging for many spectral sensors due to their bulky architectures housing diffractive optics. Emerging approaches aiming to resolve this challenge include MEMS (micro electromechanical systems) spectrometers, increasing optical path length using photonic chips, and adding multiple narrow bandwidth spectral filters to image sensors using conventional semiconductor manufacturing techniques.

Event-Based Vision

Another sensing approach that aims to minimize subsequent processing requirements is event-based vision. Rather than acquiring images at a constant frame rate, with event-based vision, each pixel reports timestamps that correspond to intensity changes. As such, these sensors combine greater temporal resolution of rapidly changing regions with far less data from static background regions, thus reducing data transfer and subsequent processing requirements. Furthermore, because each pixel acts independently, the dynamic range is increased. Crucially, this alternative acquisition approach is occurring within the sensing chip, not with post-processing algorithms, enabling an associated embedded vision system to be simpler since it has less data to handle.

Further Insights

The adoption of embedded vision systems will continue to grow as more devices gain autonomous capabilities, with robotics, industrial vision, and vehicles as the dominant markets. This represents an opportunity not just for compact image sensors, optics, and processing ICs, but also for emerging sensor types such as spectral imaging and event-based vision that can reduce processing requirements and thus support embedded vision’s value proposition of reducing size, weight, power, and latency.

IDTechEx’s report “Emerging Image Sensor Technologies 2023-2033: Applications and Markets” explores a diverse range of image sensing technologies capable of resolutions and wavelength detection far beyond what is currently attainable. This includes the spectral and event-based sensing outlined here, but also InGaAs alternatives for short-wave infrared (SWIR) imaging, thin film photodetectors, perovskite photodetectors, wavefront imaging, and more. Many of these emerging technologies are expected to gain traction across sectors, including robotics, industrial imaging, healthcare, biometrics, autonomous driving, agriculture, chemical sensing, and food inspection.

To find out more about this report, including downloadable sample pages, please visit www.IDTechEx.com/imagesensors.

About IDTechEx

IDTechEx guides your strategic business decisions through its Research, Subscription and Consultancy products, helping you profit from emerging technologies. For more information, contact [email protected] or visit www.IDTechEx.com.

Dr Matthew Dyson

Senior Technology Analyst, IDTechEx