This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm.

On-device generative AI is critical for scale, privacy and performance: Here’s how sub-10 billion parameter models are critical for the proliferation of on-device generative AI

Previous series posts established the prohibitive costs and AI privacy issues inherent in running generative artificial intelligence (AI) models solely in the cloud. As such, the only viable solution for driving widespread AI adoption and explosive innovation is through on-device generative AI.

Two converging vectors will enable on-device generative AI to proliferate.

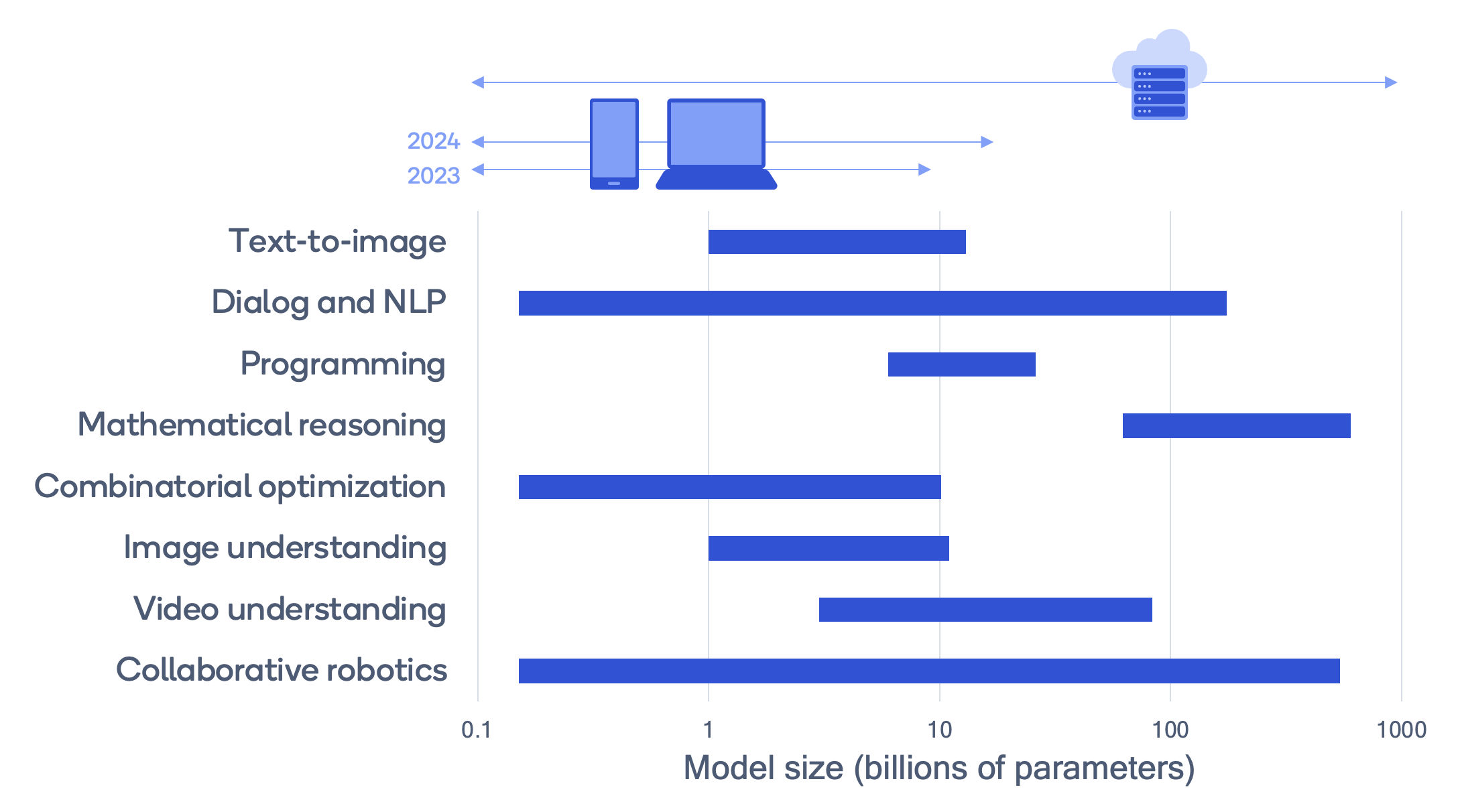

One vector is continued processing and memory performance advancements in chipsets designed for edge devices such as smartphones, extended reality headsets and personal computers (PCs). Models like Stable Diffusion, with over 1 billion parameters, are already running on phones and PCs, and many other generative AI models with 10 billion parameters or more are slated to run on device in the near future.

The other vector is the reduction in the number of parameters a generative AI model needs to provide accurate results. Smaller models in the range of 1 to 10 billion parameters are already exhibiting accurate results and continue to improve.

A significant number of capable generative AI models can run on the device, offloading the cloud (graph assumes INT4 parameters).

How useful are sub-10 billion parameter models?

In a world where generative AI models continue to grow from 10s of billions to 100s of billions and even trillions of parameters, how useful can sub-10 billion parameter models be?

There is already a growing wave of sub-10 billion parameter large language models (LLMs), automatic speech recognition (ASR) models, programming models and language vision models (LVMs) that provide useful outputs covering a broad set of use cases. These smaller AI models are not just proprietary or only available to a handful of leading AI companies. They are also being borne out of the availability of open-source foundation models that are being developed and then further optimized by a global open-source community that is driving model innovation and customization at a breakneck pace.

Publicly available foundation LLMs are often available in several sizes, such as Meta’s Llama 2 with parameter sizes of 7B, 13B and 70B. The bigger models are often more accurate and can be used for knowledge distillation, a machine learning technique that involves transferring knowledge from a large model to a smaller one, or for creating high-quality training examples.

Improving imitation learning and knowledge distillation to significantly shrink the number of model parameters while maintaining accuracy and reasoning capability is drawing a lot of attention. Recently, Microsoft introduced Orca — a 13 billion parameter model that aims to do exactly that by “imitating the logic and reasoning of larger models.”1 It learns from step-by-step explanations from GPT-4 and surpasses conventional state-of-the-art instruction-tuned models.

Open-source foundation models are commonly fine-tuned with targeted datasets for a particular use case, topic or subject matter to create domain-specific applications, often outperforming the original foundation model. The cost and the amount of time to fine-tune models continues to decrease with techniques such as low-rank adaptation (LoRA).

Given their more focused purview, these fine-tuned models can be smaller than the larger foundation models while still generating highly valuable and actionable outputs.

It is worth noting that there are other techniques that are used to customize and shrink models while maintaining accuracy. These techniques, along with knowledge distillation, LoRA and fine-tuning will be explored further in future AI on the Edge posts.

Stable Diffusion images generated with the prompt: “Super cute fluffy cat warrior in armor, photorealistic, 4K, ultra detailed, vray rendering.”

What can these sub-10 billion parameter models do?

Along with LLMs and LVMs, there are other classes of generative AI models for specific use cases such as the aforementioned ASR, real-time translation and text to speech (TTS).

The following are just a few examples of these types of sub-10 billion parameter models and their various use cases.

- Llama 2, a family of publicly available foundation LLMs developed by Meta, includes a 7 billion parameter model that is the basis for many other LLMs that span use cases from answering general knowledge questions to programming and summarizing content. Llama-2-Chat has been fine-tuned for chat use cases based on, “publicly available instruction datasets and over 1 million human annotations,” and has been tested across various benchmarks for reasoning, coding, proficiency and knowledge tests.2 Code Llama is a state-of-the-art LLM capable of generating code and natural language about code.

- Gecko is a proprietary Google-developed LLM with fewer than 2 billion parameters and is part of the PaLM 2 family of foundation LLMs. Gecko is designed to summarize text and help write emails and text messages. PaLM 2 has been trained on multilingual text spanning more than 100 languages, scientific papers and mathematical expressions for improved reasoning and source code datasets for improved coding capabilities.3

- Stable Diffusion (version 1.5), developed by Stability AI, is a 1 billion parameter open-source LVM that has been fine-tuned for image generation based on short text prompts. Using quantization (a model efficiency technique) to further shrink the memory footprint, Stable Diffusion has been demonstrated by Qualcomm Technologies at Mobile World Congress 2023 running on a smartphone powered by Snapdragon 8 Gen 2 processor while in airplane mode (e.g., no Internet connection). ControlNet, a 1.5 billion open-sourced generative AI image-to-image model builds on top of Stable Diffusion and allows more precise control for generating images by conditioning on an input image and an input text description — we demonstrated this running on a smartphone at CVPR 2023.4

- Whisper, developed by OpenAI, is a proprietary 1.6 billion parameter ASR model that enables transcription in multiple languages as well as translation from those languages to English.5

- DoctorGPT is an open-source 7 billion parameter LLM that has been fine-tuned from Llama 2 with a medical dialogue dataset to act as a medical assistant and is capable of passing the United States Medical Licensing Exam.6 The ability to fine-tune foundation models for specific domains is a very powerful and disruptive capability — this is just one of many examples of fine-tuned chatbots being created from foundation models.

- Ernie Bot Turbo is an LLM developed by Baidu available in 3 billion, 7 billion and 10 billion parameter versions. This model has been trained from large Chinese language datasets to deliver “stronger dialogue question and answer, content creation and generation capabilities, and faster response speed” for Chinese-speaking customers.7 It is primarily for use by Chinese device manufacturers as an alternative to ChatGPT and other LLMs that have been trained primarily on English datasets.

There are many other sub-10B parameter generative AI models available, such as Bloom (1.5B), chatGLM (7B), and GPT-J (6B). The list is growing and changing so quickly that it is hard to keep track of all the innovation happening in the world of generative AI.

Generative AI models under 10 billion parameters will be able to run on a variety of edge devices, including phones, PCs, XR headsets, vehicles, and IoT.

With plenty of interesting models under 10 billion parameters, what devices will run them?

Edge devices such as smartphones, tablets and PCs have an installed base of billions of units and have established annual cadences for new and higher performing upgrades. While a large portion of these devices are already taking advantage of some form of AI, devices capable of running sub-10 billion parameter generative AI models have already hit the market over the past year. Even in that short time frame, there are already millions of devices in the installed base capable of running these models.

For example, as previously mentioned, devices with a Snapdragon 8 Gen 2 processor can run a 1 billion parameter version of Stable Diffusion completely on device. LLMs with 7 billion parameters are already running on the Samsung Galaxy S23 smartphone powered by Snapdragon as well. Devices with these capabilities will only increase as the natural replacement cycles refresh the installed base.

With the increasing availability of open-source foundation models, the rapid innovation in the AI research and open-source communities, and the lowering cost to fine-tune generative AI models, we are seeing a growing wave of small yet accurate generative AI models covering a broad set of use cases and customized for specific domains. It’s an exciting time for on-device generative AI and the possibilities are virtually limitless.

References

- Sen A. (Jun 27, 2023). Microsoft releases new open source AI model Orca. Retrieved on Aug 25, 2023 from https://www.opensourceforu.com/2023/06/microsoft-releases-open-source-ai-model-orca/

- (Jul 18, 2023). Llama 2: Open foundation and fine-tuned chat models. Retrieved on Aug 21, 2023 from https://ai.meta.com/research/publications/llama-2-open-foundation-and-fine-tuned-chat-models/

- Ghahramani, Z. (May 10, 2023). Introducing PaLM 2. Retrieved on Aug 21, 2023 from https://blog.google/technology/ai/google-palm-2-ai-large-language-model/

- (Apr 22, 2023), Below is ControlNet 1.0. Retrieved on Aug 29, 2023 from https://github.com/lllyasviel/ControlNet

- (Sep 21, 2022). Introducing Whisper. Retrieved on Aug 21, 2023 from https://openai.com/research/whisper

- Open sourced. (Aug 2023). Doctor GPT. Retrieved on Aug 21, 2023 from https://github.com/llSourcell/DoctorGPT

- (Aug 16, 2023), ERNIE-Bot-turbo. Retrieved on Aug 29, 2023 from https://cloud.baidu.com/doc/WENXINWORKSHOP/s/4lilb2lpf

Pat Lawlor

Director, Technical Marketing, Qualcomm Technologies

Jerry Chang

Senior Manager, Marketing, Qualcomm Technologies