By Eric Gregori

BDTI

This article was originally published at EE Times' Embedded.com Design Line. It is reprinted here with the permission of EE Times.

You now can hold in the palm of your hand computing power that required a desktop PC form factor just a decade ago. And with its contributions to the development of open-source OpenCV4Android, NVIDIA has brought the power of the OpenCV computer vision library to the smartphone and tablet.

The tools described in this article provide a unique implementation opportunity to do robust development on low-cost and full-featured hardware and software, for computer vision experimenters, academics, and professionals alike.

The present and future of embedded vision

Beginning in 2011, the number of smartphones shipped exceeded shipments of client PCs (netbooks, nettops, notebooks and desktops). [1] And nearly three quarters of all smartphones sold worldwide during the third quarter of 2012 were based on Google’s Android operating system. [2] Analysts forecast that more than 1 billion smartphones and tablets will be purchased in 2013. [3]

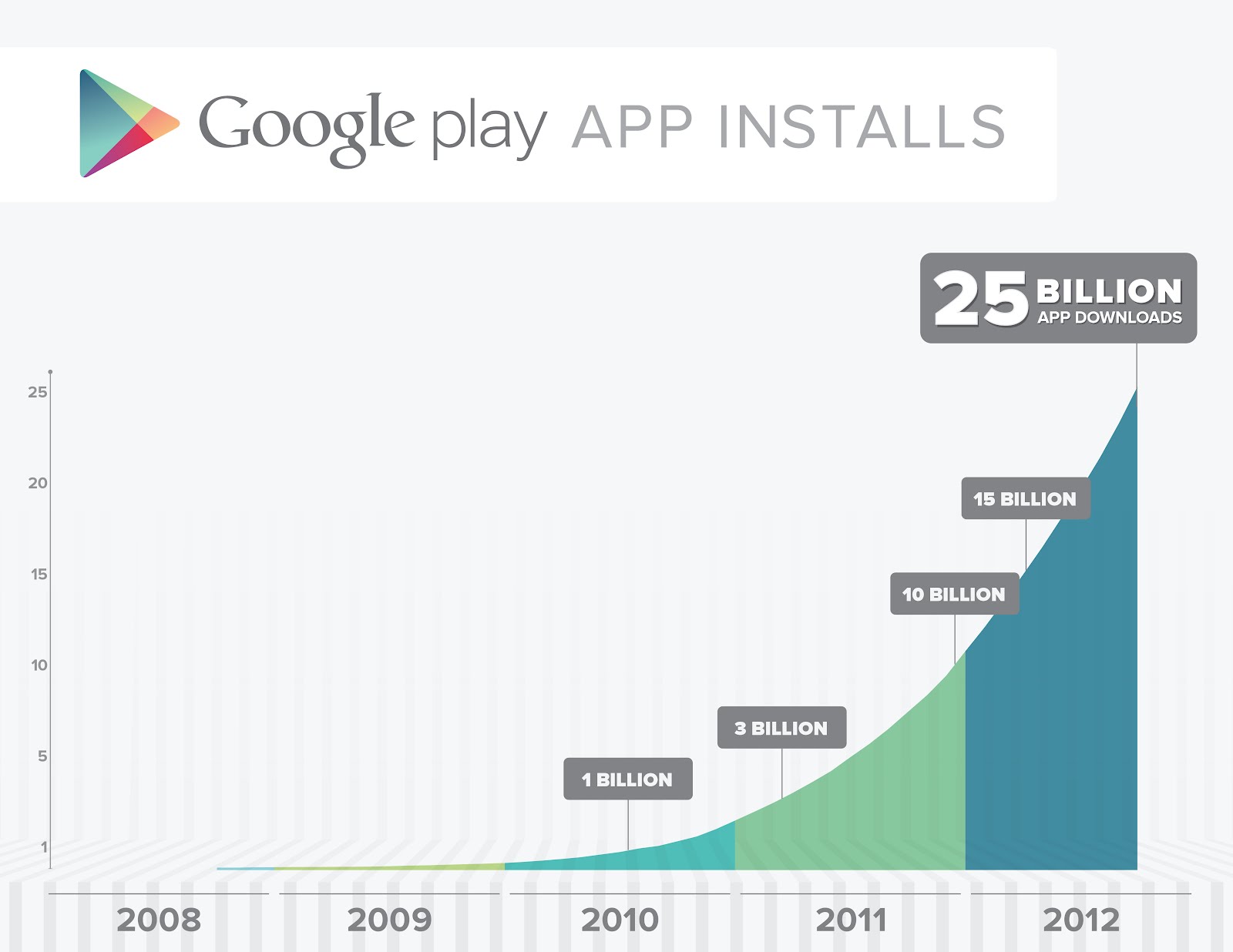

Statistics such as these suggest that smartphones and tablets, particularly those based on Android, are becoming the dominant computing platforms. This trend is occurring largely because the performance of these devices is approaching that of laptops and desktops. [4] Reflective of smartphone and tablet ascendance, Google announced that as of September 2012 more than 25 billion apps had been downloaded from Google's online application store, Google Play (Figure 1). [5]

Figure 1: Google's Play online application store has experienced dramatic usage growth

The term “embedded vision” refers to the use of computer vision technology in systems other than conventional computers. Stated another way, “embedded vision” refers to non-computer systems that extract meaning from visual inputs. Vision processing algorithms were originally only capable of being implemented on costly, bulky, and power-hungry high-end computers. As a result, computer vision has historically been primarily confined to a scant few application areas such as factory automation and military equipment.

However, mainstream systems such as smartphones and tablets now include SoCs with dual- and quad-core GHz+ processors, along with integrated GPUs containing abundant processing capabilities and dedicated image processing function blocks. Some SoCs even embed general-purpose DSP cores. The raw computing power in the modern-day mobile smartphone and tablet is now at the point where the implementation of embedded vision is not just possible but practical. [6] NVIDIA recognized the power of mobile embedded vision in 2010 and began contributing to the OpenCV computer vision library, to port code originally intended for desktop PCs to the Android operating system.

OpenCV

The OpenCV (Open Source Computer Vision) Library was created to provide a common resource for diverse computer vision applications and to accelerate the use of computer vision in everyday products. [7] OpenCV is distributed under the liberal BSD open-source license and is free for commercial or research use.

Top universities and Fortune 100 companies, among other sources, develop and maintain the 2,500 algorithms contained in the library. OpenCV is written in C and C++, but the Applications Interface also includes "wrappers" for Java, MATLAB and Python. Community-supported ports currently exist for Windows, Linux, Mac OS X, Android, and iOS platforms. [8]

The OpenCV library has been downloaded more than five million times and is popular with both academics and design engineers. Quoting from the Wiki for the Google Summer of Code 2010 OpenCV project (http://opencv.willowgarage.com/wiki/GSOC_OpenCV2010), “OpenCV is used extensively by companies, research groups, and governmental bodies. Some of the companies that use OpenCV are Google, Yahoo, Microsoft, Intel, IBM, Sony, Honda, and Toyota. Many startups such as Applied Minds, VideoSurf, and Zeitera make extensive use of OpenCV. OpenCV's deployed uses span the range from stitching Streetview images together, detecting intrusions in surveillance video in Israel, monitoring mine equipment in China (more controversially, OpenCV is used in China's "Green Dam" internet filter), helping robots navigate and pick up objects at Willow Garage, detection of swimming pool drowning events in Europe, running interactive art in Spain and New York, checking runways for debris, inspecting labels on products in factories around the world on to rapid face detection in Japan.”

With the creation of the OpenCV Foundation in 2012, OpenCV has a new face and a new infrastructure [9, 10]. It now encompasses more than 40 different "builders", which test OpenCV in various configurations on different operating systems, both mobile and desktop (a “builder” is an environment used to build the library under a specific configuration. The goal is to verify that the library builds correctly under the specified configuration). A "binary compatibility builder" also exists, which evaluates binary compatibility of the current snapshot against the latest OpenCV stable release, along with a documentation builder that creates reference manuals and uploads them to the OpenCV website.

OpenCV4Android

OpenCV4Android is the official name of the Android port of the OpenCV library. [11] OpenCV began supporting Android in a limited "alpha" fashion in early 2010 with OpenCV version 2.2. [12] NVIDIA subsequently joined the project and accelerated the development of OpenCV for Android by releasing OpenCV version 2.3.1 with beta Android support. [13] This initial beta version included an OpenCV Java API and native camera support. The first official non-beta release of OpenCV for Android was in April 2012, with OpenCV 2.4. At the time of this article's publication, OpenCV 2.4.3 has just been released, with even more Android support improvements and features, including a Tegra 3 SoC-accelerated version of OpenCV.

OpenCV4Android supports two languages for coding OpenCV applications to run on Android-based devices. [14] The easiest way to develop your code is to use the OpenCV Java API. OpenCV exposes most (but not all) of its functions to Java. This incomplete implementation can pose problems if you need an OpenCV function that has not yet received Java support. Before choosing to use Java for an OpenCV project, then, you should review the OpenCV Java API for functions your project may require.

OpenCV Java API

With the Java API, each ported OpenCV function is "wrapped" with a Java interface. The OpenCV function itself, on the other hand, is written in C++ and compiled. In other words, all of the actual computations are performed at a native level. However, the Java wrapper results in a performance penalty in the form of JNI (Java Native Interface) overhead, which occurs twice: once at the start of each call to the native OpenCV function and again after each OpenCV call (i.e. during the return). This performance penalty is incurred for every OpenCV function called; the more OpenCV functions called per frame, the bigger the cumulative performance penalty.

Although applications written using the OpenCV Java API run under the Android Dalvik virtual machine, for many applications the performance decrease is negligible. Figure 2 shows an OpenCV for Android application written using the Java API. This application calls three OpenCV functions per video frame. The ellipses highlight each cluster of two JNI penalties (entry and exit); this particular application will incur six total JNI call penalties per frame.

Figure 2: When using the Java API, each OpenCV function call incurs JNI overhead, potentially decreasing performance

A slightly more difficult but more performance optimized development method uses the Android NDK (Native Development Kit). In this approach, the OpenCV vision pipeline code is written entirely in C++, with direct calls to OpenCV. You simply encapsulate all of the OpenCV calls in a single C++ class, calling it once per frame. With this method, only two JNI call penalties are incurred per frame, so the per-frame JNI performance penalty is significantly reduced. Java is still used for non-vision portions of the application, including the GUI.

Using this method, you first develop and test the OpenCV implementation of your algorithm on a host platform. Once your code works the way you want it to, you simply copy the C++ implementation into an Android project and rebuild it using the Android tools. You can also easily port the C++ implementation to another platform such as iOS by rebuilding it with the correct tools in the correct environment.

Figure 3 shows the same OpenCV for Android application as in Figure 2, but this time written using the native C++ API. It calls three OpenCV functions per video frame. The ellipse highlights the resulting JNI penalties. The OpenCV portion of the application is written entirely in C++, thereby incurring no JNI penalties between OpenCV calls. Using the native API for this application reduces the per-video frame JNI penalties from six to two.

Figure 3: Using native C++ to write the OpenCV portion of your application reduces JNI calls, optimizing performance

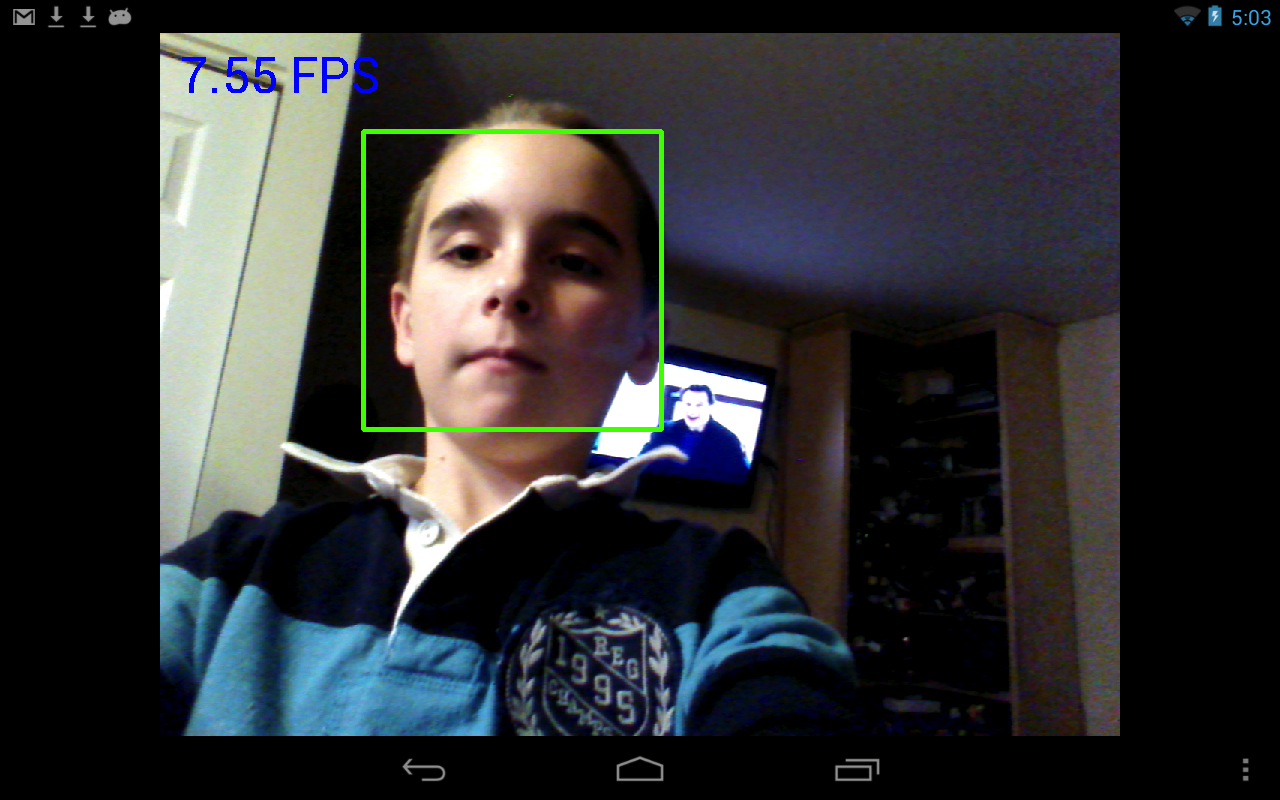

Figure 4 shows the OpenCV4Android face detection demo running on a NVIDIA Tegra 3-based Nexus 7 tablet. The demo has two modes: Java mode uses the Java API, while native mode uses the C++ API. Note the frame rates in the two screen snapshots: in this case, the OpenCV face detection Java API is performing at the same frame rate as the C++ API.

Figure 4: A face detection algorithm implemented using OpenCV4Android delivers identical performance in Java mode (a) and native mode (b): 7.55 fps

How is this possible, considering the previously discussed performance discrepancies between Java and C++ API development? Unlike the previous application, this example implements only one function call (detectMultiScale()) per frame, for face detection purposes. Calling only a single OpenCV function, regardless of whether you're using the native C++ or Java API, incurs the same two JNI call penalties.

In this case, the slight difference in performance is most likely the result of the number of parameters that have to pass through the JNI interface. The native C++ face detector call only has two parameters; the remainder are passed during the initialization phase. The Java API detectMultiScale() call, on the other hand, passes seven parameters through the JNI interface.

The OpenCV Manager

With the release of OpenCV version 2.4.2, NVIDIA introduced the OpenCV Manager, an application that can be downloaded and installed on an Android device from the Google Play Store or directly installed using the Tegra Android Development Pack (Figure 5). Once installed, the OpenCV Manager manages the OpenCV libraries on the device, automatically updating them to the latest versions and selecting the one that is optimized for the device.

Figure 5: NVIDIA's OpenCV Manager is available for download from the Google Play store

The best practice for Android OpenCV applications is to use dynamically linked versions of the OpenCV libraries. Said another way, the OpenCV libraries should not be a part of the application (i.e., statically linked); instead, they should be dynamically linked (i.e., runtime linked) when the application is executed. The biggest advantage of dynamically linking the application to an OpenCV library involves updates. If an application is statically linked to the OpenCV library, the library and application must be updated together. Therefore, every time a new version of OpenCV is released, if the application is dependent on changes in the new version (bug fixes, for example), the application must also be upgraded and re-released. With dynamic linking, on the other hand, the application is only released once. Subsequent OpenCV updates do not require application upgrades.

An additional advantage of dynamic linking in conjunction with the OpenCV Manager involves the latter's automatic hardware detection feature. The OpenCV Manager automatically detects the platform it is installed on and selects the optimum OpenCV library for that hardware. Prior to NVIDIA's release of the OpenCV Manager, no mechanism existed for selecting the optimum library for a particular hardware platform. Instead, the application developer had to release multiple versions of his application for various hardware types. [15]

OpenCV for Android Tutorials

The OpenCV4Android project has developed a series of tutorials that walk the reader through the process of creating an OpenCV4Android host build machine and developing an OpenCV4Android application. The root node of the documentation tree can be found in the Android section of the OpenCV website.

The first tutorial, "Introduction into Android Development," covers two methods of creating an Android host build machine. The automatic method, using NVIDIA’s TADP, is described later. The manual method requires that you install the following software:

- Sun/Oracle JDK 6

- Android SDK

- Android SDK components

- Eclipse IDE

- ADT plugin for Eclipse

- Android NDK, and

- CDT plugin for Eclipse

The next tutorial, "OpenCV for Android SDK," covers the OpenCV4Android SDK package, which enables development of Android applications using the OpenCV library.

The SDK structure is illustrated here: [16]

OpenCV-2.4.3-android-sdk

|_ apk

| |_ OpenCV_2.4.3_binary_pack_XXX.apk

| |_ OpenCV_2.4.3_Manager.apk

|

|_ doc

|_ samples

|_ sdk

| |_ etc

| |_ java

| |_ native

| |_ 3rdparty

| |_ jni

| |_ libs

| |_ armeabi

| |_ armeabi-v7a

| |_ x86

|

|_ license.txt

|_ README.android

- The sdk folder contains the OpenCV API and libraries for Android.

- The sdk/java folder contains an Android library Eclipse project, providing an OpenCV Java API that can be imported into developer’s workspace.

- The sdk/native folder contains OpenCV C++ headers (for JNI code) and native Android libraries (*.so and *.a) for ARM-v5, ARM-v7a and x86 architectures.

- The sdk/etc folder contains the Haar and LBP cascades distributed with OpenCV.

- -The apk folder contains Android packages that should be installed on the target Android device to enable OpenCV library access via OpenCV Manager API. On production devices that have access to the Internet and the Google Play Market, these packages will be installed from the Market on the first application launch, via the OpenCV Manager API. Development kits without Internet and Market connections require these packages to be manually installed. Specifically, you must install the Manager.apk and corresponding binary_pack.apk, dependent on the device CPU (the Manager GUI provides this info). However, installation from the Internet is the preferable approach, since the OpenCV team may publish updated versions of various packages via the Google Play Market.

- The samples folder contains sample applications projects and their prebuilt packages (the APK). Import them into Eclipse workspace and browse the code to learn ways of using OpenCV on Android.

- The doc folder contains OpenCV documentation in PDF format. This documentation is also available online at the preceding link. The most recent (i.e. nightly build) documentation is at this location. Although it's generally more up-to-date, it can refer to not-yet-released functionality.

Beginning with version 2.4.3, the OpenCV4Android SDK uses the OpenCV Manager API for library initialization.

Finally, the "Android Development with OpenCV" tutorial walks the reader through how to create his or her first OpenCV4Android application. This tutorial covers both Java and native development, using the Eclipse-based tools. This tutorial also provides a framework for binding to the OpenCV Manager, to take advantage of the dynamic OpenCV libraries. The example code snippet that follows is reproduced from the OpenCV website. [17]

public class MyActivity extends Activity implements HelperCallbackInterface

{

private BaseLoaderCallback mOpenCVCallBack = new BaseLoaderCallback(this) {

@Override

public void onManagerConnected(int status) {

switch (status) {

case LoaderCallbackInterface.SUCCESS:

{

Log.i(TAG, "OpenCV loaded successfully");

// Create and set View

mView = new puzzle15View(mAppContext);

setContentView(mView);

} break;

default:

{

super.onManagerConnected(status);

} break;

}

}

};

/** Call on every application resume **/

@Override

protected void onResume()

{

Log.i(TAG, "called onResume");

super.onResume();

Log.i(TAG, "Trying to load OpenCV library");

if (!OpenCVLoader.initAsync(OpenCVLoader.OPENCV_VERSION_2_4_2, this, mOpenCVCallBack))

{

Log.e(TAG, "Cannot connect to OpenCV Manager");

}

}

NVIDIA’s TADP (Tegra Android Development Pack)

NVIDIA has been a significant contributor to the OpenCV library since 2010. NVIDIA has continued its support of OpenCV and Android more generally with the TADP (see "Designing visionary mobile apps using Tegra Android Development Pack”). The development pack was originally intended only for general Android development. However, with release 2.0, OpenCV was added as part of the TADP download direct from NVIDIA.

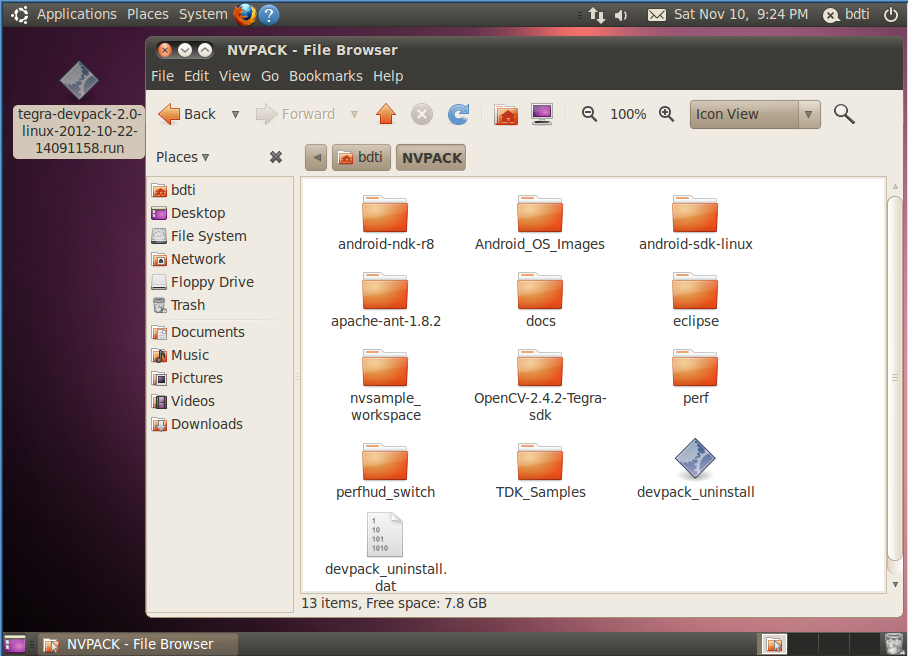

Per NVIDIA's documentation, the Tegra Android Development Pack 2.0 installs all software tools required to develop for Android on NVIDIA’s Tegra platform. This suite of developer tools is targeted at Tegra devices, but will configure a development environment that will work with almost any Android device. TADP 2.0 is available on Windows, Mac OS X, Ubuntu Linux 32-bit and Ubuntu Linux 64-bit (Figure 6).

Figure 6: The TADP 2.0 can be installed on 32-bit Ubuntu Linux, along with other operating systems

Tegra Android Development Pack 2.0 features include:

- Android Development

- Android SDK r18

- Android APIs

- Google USB Driver

- Android NDK r8

- JDK 6u24

- Cygwin 1.7

- Eclipse 3.7.1

- CDT 8.0.0

- ADT 18.0.0

- Apache Ant 1.8.2

- Python 2.7

Tegra libraries and tools include

- Nsight Tegra 1.0, Visual Studio Edition (Windows only)

- NVIDIA Debug Manager for Eclipse 12.0.1

- PerfHUD ES 1.9.7

- Tegra Profiler 1.0

- Perf for Tegra

- OpenCV for Tegra 2.4.2

- Tegra samples, documentation and OS images

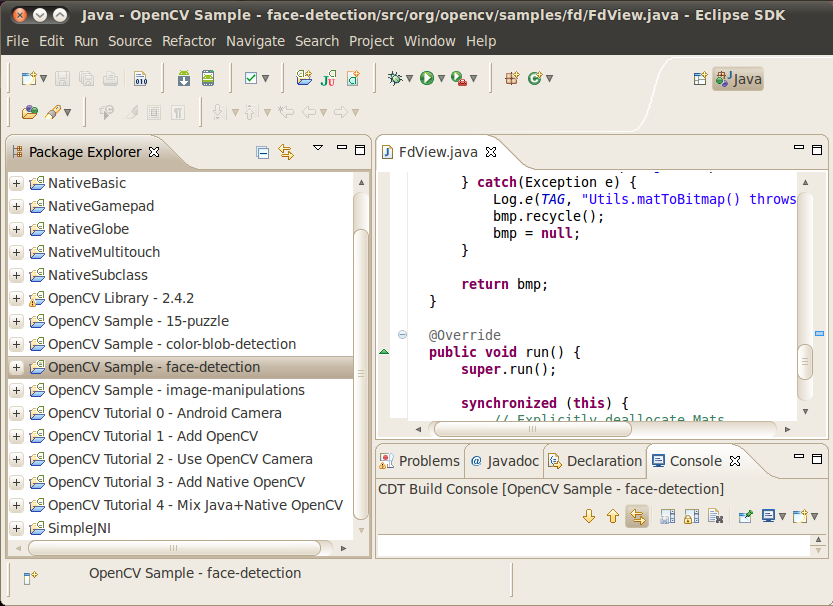

- Tegra SDK samples (all of which can also be imported into an Eclipse workspace, see Figure 7)

- Tegra SDK documentation

- Tegra Android OS images for Cardhu, Ventana and Enterprise development kits

Figure 7: TADP examples can also be imported into an Eclipse workspace

OpenCV for Tegra

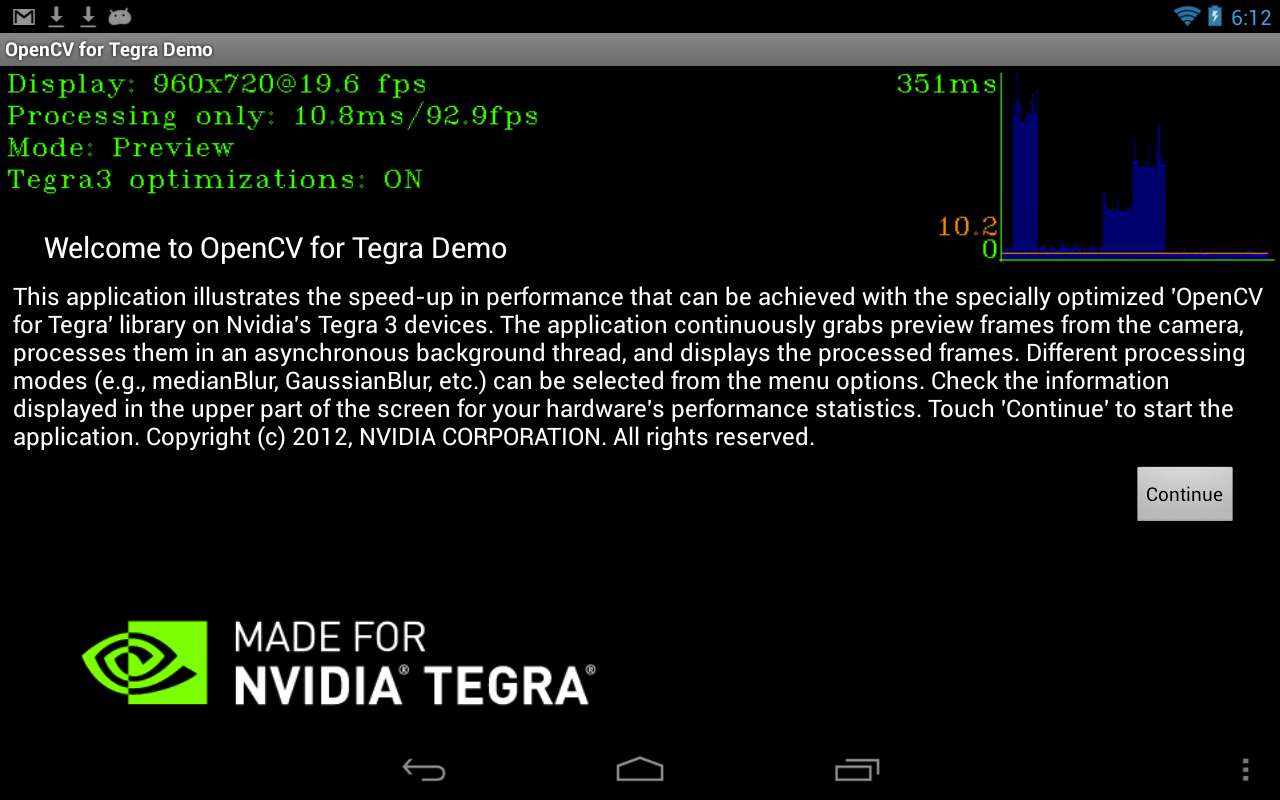

OpenCV for Tegra is a version of OpenCV for Android that NVIDIA has optimized for Tegra 3 platforms running the Android operating system. It currently supports Android API levels 9 through 16, and contains optimizations that enable OpenCV for Tegra to often run several times faster on Tegra 3 than does the generic open-source OpenCV for Android implementation. The TADP includes a SDK package for OpenCV for Tegra.

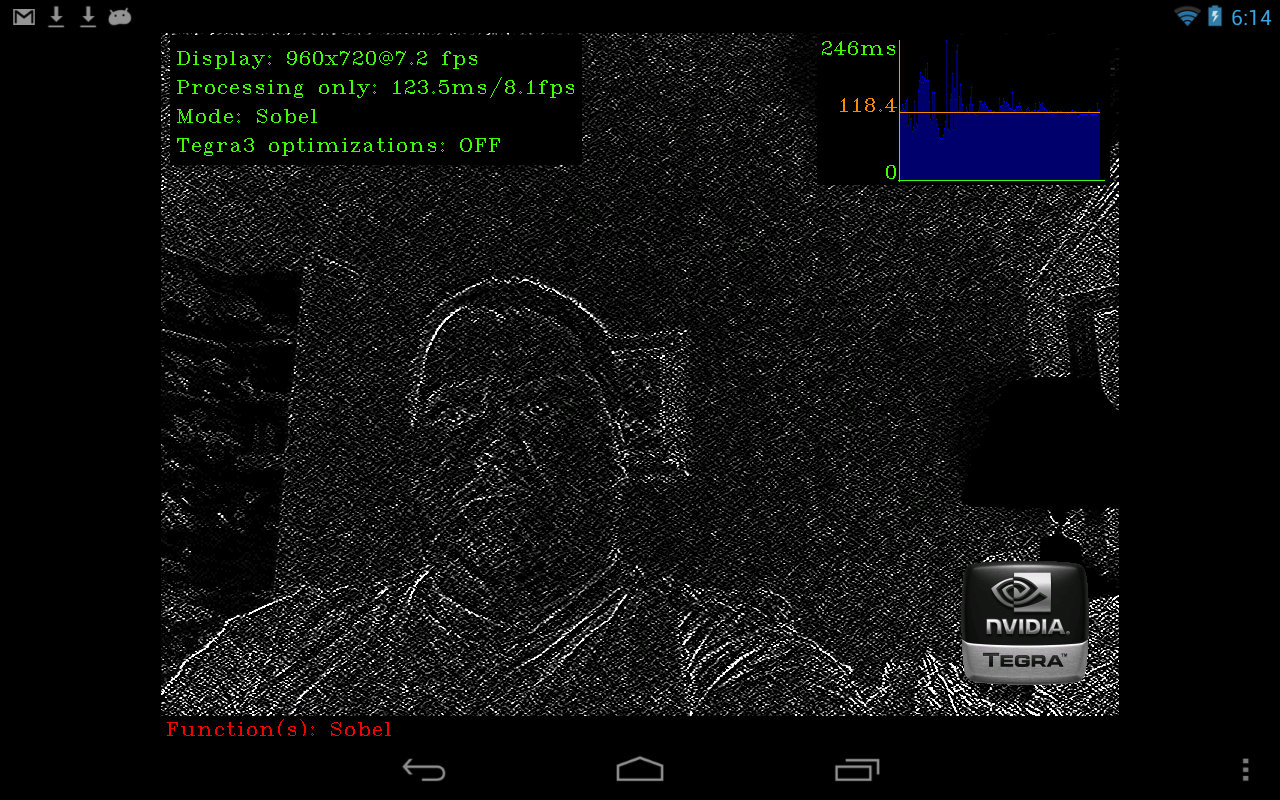

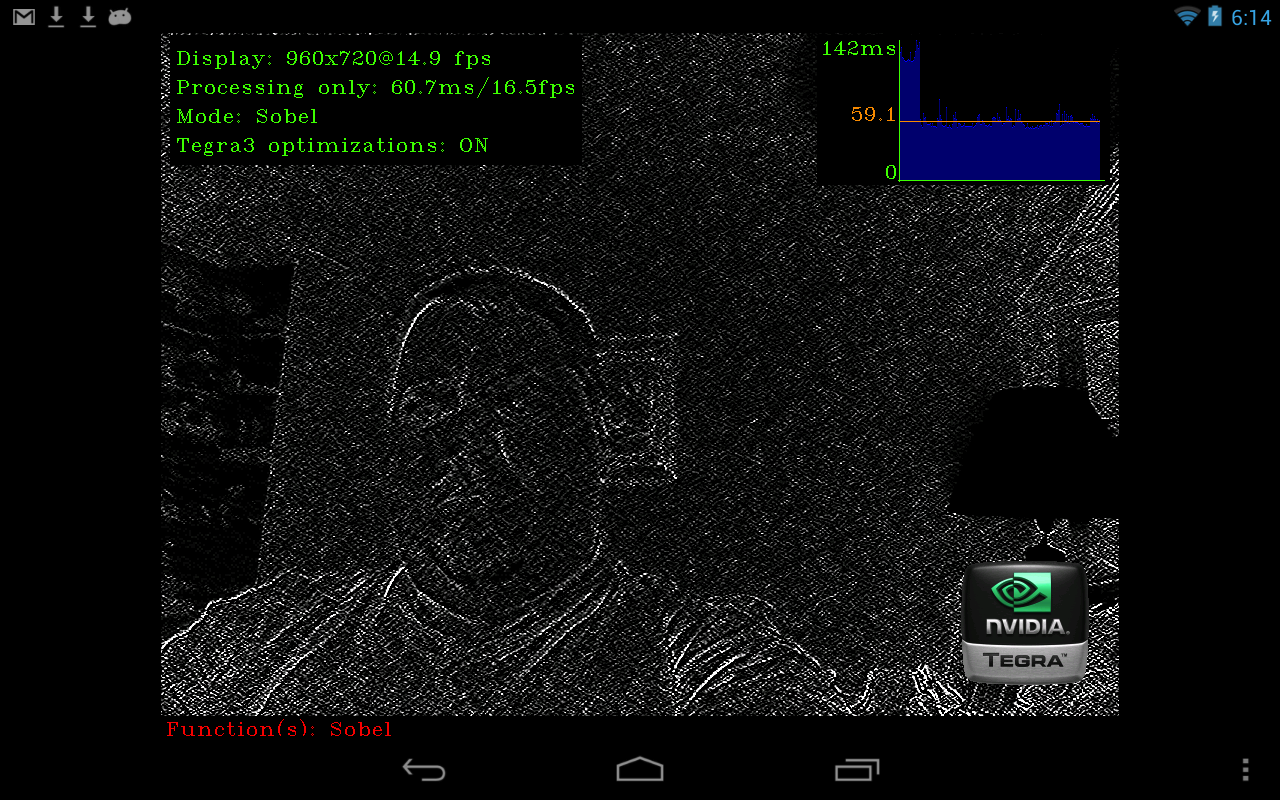

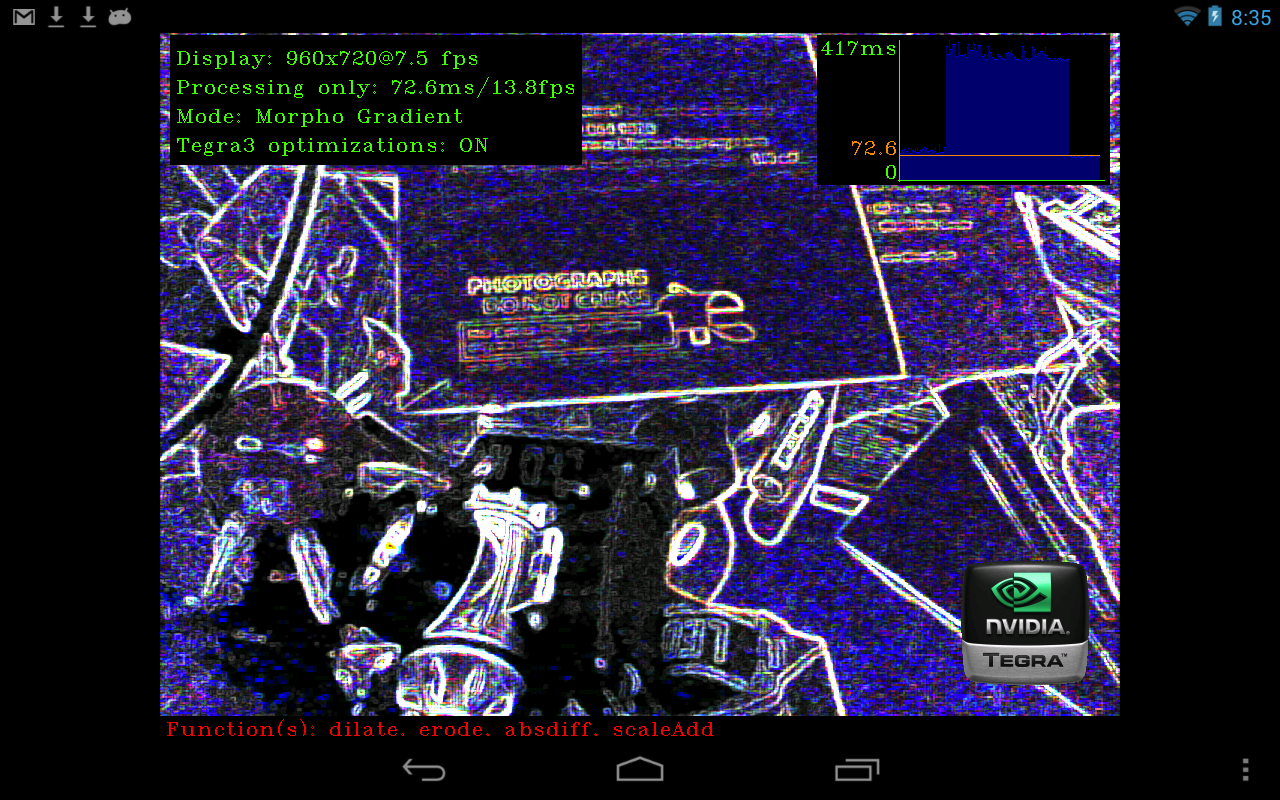

Figure 8 shows the OpenCV for Tegra Demo available for download from the Google Play store. Only the Sobel and Morphology algorithms are shown, although the demo supports additional algorithms such as various blurs and optical flow. The screen shots show performance both with and without Tegra optimizations enabled. Notice in Figures 8b and 8c that the Sobel algorithm runs twice as fast using the NVIDIA optimized version of OpenCV. Figures 8d and 8e show edge detection using morphology operators. The operations are listed in red at the bottom of the image. In this case, the NVIDIA-optimized OpenCV library executes the specified operators five times faster than the standard ARM version of OpenCV.

Figure 8: The OpenCV for Tegra demo is also available on Google Play (a). The included Sobel algorithm can be run either with Tegra optimizations off (b) or on (c); in the latter case it's twice as fast. Similarly, the morphology algorithm is five times (e).

Sample applications

The OpenCV4Android SDK includes four sample applications and five tutorials to help you get started in developing OpenCV applications for Android. The tutorials are meant to serve as frameworks or foundations for your specific application: simply open an appropriate tutorial project and start adding your code:

- Android Camera: This tutorial is a skeleton application for all of the others. It does not use OpenCV at all, but gives an example Android Java application working with a camera.

- Add OpenCV: This tutorial shows the simplest way to add a Java OpenCV call to the Android application.

- Use OpenCV Camera: This tutorial functions exactly the same as the previous one, but uses OpenCV’s native camera for video capture.

- Add Native OpenCV: This tutorial demonstrates how you can use OpenCV in the native part of your application, through JNI.

- Mix Java + Native OpenCV: This tutorial shows you how to use both the C++ and Java OpenCV APIs within a single application.

The sample applications, on the other hand, are complete applications that you can build and run:

- Image-manipulations: This sample demonstrates how you can use OpenCV as an image processing and manipulation library. It supports several filters and demonstrates color space conversions and working with histograms.

- 15-puzzle: This sample shows how you can implement a simple game with just a few calls to OpenCV. It is also available on Google Play.

- Face-detection: This sample is the simplest implementation of the face detection functionality on Android. It supports two modes of execution: an available-by-default Java wrapper for the cascade classifier, and a manually crafted JNI call to a native class which supports tracking. Even the Java version is able to deliver close to real-time performance on a Google Nexus One device.

- Color-blob-detection: This sample shows a trivial implementation of a color blob tracker. After the user points to a particular image region, the algorithm attempts to select the whole blob of a similar color.

Conclusion

We are at a notable point in the evolution of computing. Modern smartphones and tablets are now quite capable of running useful computer vision algorithms. And by delivering significant advancements to OpenCV4Android, NVIDIA has brought the power of OpenCV to the smartphone and tablet. Developers can implement their algorithms using either Java or native C++ API’s. The Java API in particular exposes computer vision to a whole new level of developers. This is a very exciting time to be involved with computer vision!

Eric Gregori is a Senior Software Engineer and Embedded Vision Specialist with Berkeley Design Technology, Inc. (BDTI), which provides engineering services for embedded vision applications. He is a robot enthusiast with over 17 years of embedded firmware design experience, with specialties in computer vision, artificial intelligence, and programming for Windows Embedded CE, Linux, and Android operating systems.

References

- Smart phones overtake client PCs in 2011

- Gartner Says Worldwide Sales of Mobile Phones Declined 3 Percent in Third Quarter of 2012; Smartphone Sales Increased 47 Percent

- Gartner Says 821 Million Smart Devices Will Be Purchased Worldwide in 2012; Sales to Rise to 1.2 Billion in 2013

- December 2012 Embedded Vision Alliance Member Summit Technology Trends Presentation (requires registration)

- Google Play hits 25 billion downloads

- July 2012 Embedded Vision Alliance Member Summit Technology Trends Presentation on OpenCL (requires registration)

- Introduction To Computer Vision Using OpenCV (registration required)

- Home page of OpenCV.org

- September 2012 Embedded Vision Summit Afternoon Keynote: Gary Bradski, OpenCV Foundation (requires registration)

- July 2012 Embedded Vision Alliance Member Summit Keynote: Gary Bradski, Industrial Perception (requires registration)

- Introduction into Android Development

- OpenCV Change Logs

- Android Release Notes 2.3.1 (beta1)

- OpenCV4Android Usage Models

- OpenCV4Android Reference

- OpenCV4Android SDK

- Android development with OpenCV