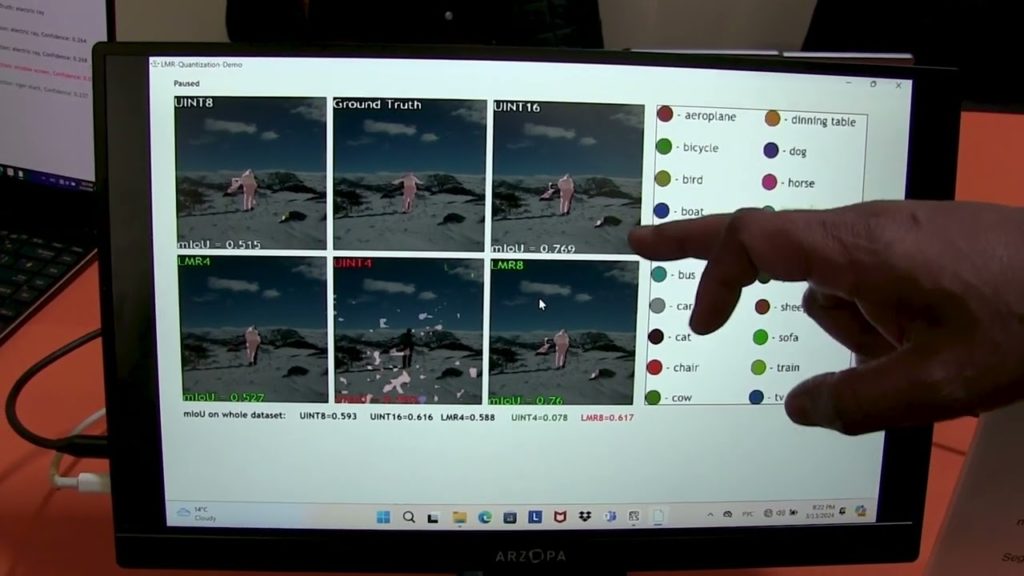

Noman Hashim, CEO of Lemur Imaging, demonstrates the company’s latest edge AI and vision technologies and products at the March 2024 Edge AI and Vision Alliance Forum. Specifically, Hashim demonstrates Lemur Imaging’s high-quality memory reduction (LMR) image compression technology on different networks, showing that compression outperforms quantization in edge AI subsystems.

The image quality delivered by Lemur’s innovative compression technology preserves the necessary data to ensure that popular vision detection modelsm such as ImageNet, YOLO8 and ResNET, will deliver better performance with 8-bit compressed data compared to INT8 data. In addition, 4-bit compressed data will give almost the same level of detection quality as INT8 with 50% less power consumption and silicon area, anbd with no compromise in detection quality.

Lemur Imaging has developed the most compact, high performance compression core in the industry, with core sizes under 10K ASIC gates and latency of just a few clock cycles, thus making it suitable for low cost, high performance edge AI SoCs. The IP core is available now for SoC integration, and customers can evaluate the IP via a bit-exact software simulator that comes preloaded with networks both with and without LMR, allowing them to test the IP with their own data sets.