This article was originally published at PathPartner Technology's website. It is reprinted here with the permission of PathPartner Technology.

Object detection/classification is a supervised learning process in machine vision to recognize patterns or objects from images or other data. It is a major component in Advanced Driver Assistance Systems (ADAS), for example, as it is used commonly to detect pedestrians, vehicles, traffic signs etc.

The offline classifier training process fetches sets of selected data/images containing objects of interest, extracts features from this input, and maps them to corresponding labelled classes to generate a classification model. Real time inputs are categorized based on the pre-trained classification model in an online process which finally decides whether the object is present or not.

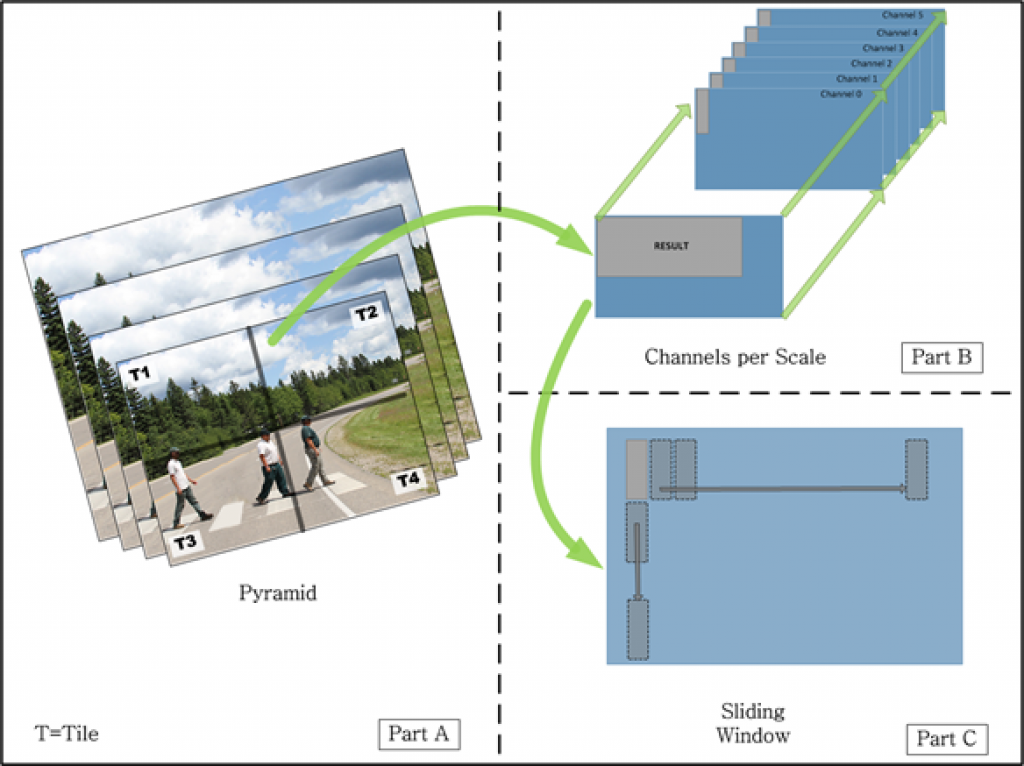

Feature extraction uses many techniques, such as the Histogram of Oriented Gradients (HOG), Local Binary Pattern (LBP), features extracted using integral image, etc. The classifier uses the sliding window, raster scanning or dense scanning approach to operate on an extracted feature image. Multiple image pyramids are used (as shown in part A of Figure 1) to detect objects of different sizes.

Computational complexity of classification for real-time applications

Dense pyramid image structures with 30-40 scales are also being used to obtain high detection accuracy, where each pyramid scale can have single or multiple levels of features depending upon the feature extraction method used. Classifiers such as AdaBoost (the adaptive boosting algorithm) use random data fetching from various data points located in a pyramid image scale. Double or single precision floating points are used for higher accuracy requirements with computationally intensive operations. Also, a significantly higher number of control codes are used as part of the classification process at various levels. These computational complexities make a classifier a complex module to be designed efficiently, in order to achieve real-time performance in critical embedded systems such as those used in the ADAS applications.

Consider a typical classification technique such as AdaBoost, which does not use all of the features extracted from sliding window. That makes it computationally less expensive compared to a classifier like SVM which uses all the features extracted from sliding windows in a pyramid image scale. Features are of fixed length in most of the feature extraction techniques such as HOG, gradient images, LBP etc. In the case of HOG, features contain many levels of orientation bins. Each pyramid image scale can therefore have multiple levels of orientation bins, and these levels can be computed in any order, as shown in part B and C of Figure 1.

Figure 1: Pyramid image scales with multiple orientation bins levels

Design constraints for porting A classifier to An ADAS SoC

Object classification is generally categorized as a high level vision processing use case, since it operates on extracted features generated by low- and mid-level vision processing. It requires more control codes by nature, as it involves a comparison process at various levels. Also, as mentioned earlier, it involves precision at the double/float level. These computational characteristics depict classification as a problem for a DSP rather than a problem for a vector processor, which has more parallel data processing power or SIMD operations.

Typical ADAS processors, such as Texas Instruments' TDA2x/TDA3x SoC, incorporate multiple engines/processors targeted for high-, mid- and low-level vision processing. The TMS320C66x DSP in the TDA2x SoC has fixed and floating-point operation support, with a maximum 8-way VLIW to issue up to 8 new operations every clock cycle, and with SIMD operations for fixed point and fully-pipelined instructions. It has support for up to 32 8-bit or 16-bit multiplies per cycle, and up to eight 32-bit multiplies per cycle. The EVE processor in the TDA2x has a 512-bit vector coprocessor (VCOP) with built-in mechanisms and vision-specialized instructions for concurrent, low-overhead processing. There are three parallel flat memory interfaces, each with 256-bit load-store memory bandwidth, providing a combined 768-bit wide memory bandwidth. Efficient management of the load/store bandwidth, internal memory, software pipeline, and integer precisions are major design considerations for achieving maximum throughput from processors such as these.

The classifier framework can be redesigned/modified to adapt to vector processing requirements, thereby processing more data in one instruction or achieving more SIMD operations.

Addressing major design considerations in porting a classifier to an ADAS SoC

Load/store bandwidth management

Each pyramid scale can be rearranged to compensate for limited internal memory. Functional modules and regions can be selected and arranged appropriately to limit DDR load/store bandwidth to the required level.

Efficient utilization of limited internal memory and cache

Each image can be processed at optimum sizes, and memory requirements should fit into hardware buffers for efficient utilization of memory and computation resources.

Software pipeline design for achieving maximum throughput

Some of the techniques that can be used to achieve maximum throughput from software pipelining are mentioned below:

- The loop structure and its nested levels should fit into the hardware loop buffer requirements. For example, the C66x DSP in TDA3x has restrictions over its SPLOOP buffer, thereby influencing its initiation interval.

- Unaligned memory loads/store should be avoided, as they are computationally expensive and their computation cycles are twice as long compared to aligned memory loads/stores in most cases.

- Data can be arranged, and/or compiler directives and options can be set, to obtain peak SIMD load, store and compute operation levels.

- Double precision operations can be converted to floating point or fixed point representations, but retraining of offline classifier should occur subsequent to these precision changes.

- The innermost loop can be simplified, minimizing usage of control codes, to avoid register spilling and register pressure issues.

- Division operations can be avoided by the alternative use of corresponding table lookup multiplication or inverse multiplication operations.

Conclusions

Classification for real-time embedded vision applications is a difficult computational problem due to its dense data processing requirements, floating point precision requirements, multilevel control codes and data fetching requirements. These computational complexities involved in classifier design can significantly constrain vector processing capabilities. But the classifier framework on a target platform can be redesigned/modified to more efficiently leverage the platform architecture's vector processing capability by efficiently utilizing techniques such as load/store bandwidth management, internal memory and cache management, and software pipeline design.

References

TDA2X, A SOC OPTIMIZED FOR ADVANCED DRIVER ASSISTANCE SYSTEMS Dr. Jagadeesh Sankaran, Senior Member Technical Staff, Texas Instruments Incorporated. Dr. Nikolic Zoran, Member Group Technical Staff, Texas Instruments Incorporated. 2014 IEEE International Conference on Acoustic, Speech and Signal Processing (ICASSP)

Sudheesh TV

Technical Lead, PathPartner Technology

Anshuman S Gauriar

Technical Lead, PathPartner Technology