This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA.

Humans know more about deep space than we know about Earth’s deepest oceans. But scientists have plans to change that—with the help of AI.

“We have better maps of Mars than we do of our own exclusive economic zone,” said Nick Rotker, chief BlueTech strategist at MITRE, a US government-sponsored nonprofit research organization. “Around 70% of the Earth is covered in water and we’ve explored virtually none of it.”

Speaking at GTC to a packed ballroom, which included Robert Ballard, the famed oceanographer and Titanic discoverer, Rotker and two colleagues outlined a plan for developing detailed maps of the Earth’s oceans, sketching out a collaborative strategy involving the scientific, academic, and enterprise communities.

For more information, see Exploring Earth’s Oceans: Using Digital Twins to Drive Digital Ocean Collaboration on NVIDIA On-Demand.

Rotker explained how MITRE’s efforts rest, at least in part, on pursuing a three-computer strategy to create high-fidelity digital twins of the world’s oceans.

The first computer is powered by the NVIDIA DGX platform. It uses vast amounts of atmospheric and ocean-related data to train models, including data about salinity levels, current patterns, ice-formation, and the ocean’s floor topography. The myriad multimodal sources for that data comes from LiDAR data, the SOFAR network of spotter buoys, satellites, as well as different atmospheric inputs.

The second computer is actually a fleet of autonomous robots designed to explore the ocean, which are integrated with the third computer, a collection of Virginia-based computers running NVIDIA Omniverse.

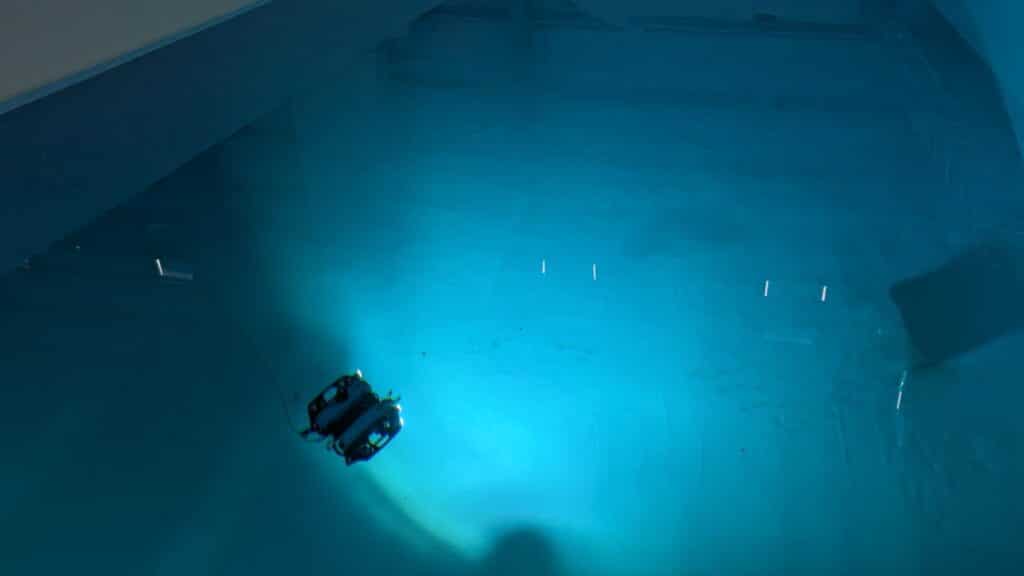

These three computers work together to train Vespa-sized, unmanned, underwater robots designed for autonomous observation.

MITRE’s Unmanned Underwater Autonomous Vehicle Digital Twin

Rotker’s team is training these robots in a MITRE BlueTech in Bedford, Massachusetts, where, tethered to communication lines, the autonomous robots share information they’re gathering back to the computers in Virginia.

Those computers, which are running NVIDIA Omniverse, create a digital twin of what the robot observes in real time and is used to help improve the robot’s underwater autonomy. The autonomous robots are packed with sensors, running edge compute with NVIDIA Jetson AGX Xavier cards.

When the robots are sufficiently trained to autonomously navigate zero-shot underwater situations, Rotker and his team will release them into the ocean, where they will gather more data that can provide new insights about the ocean.

Initial use cases for new ocean-related data include improving humans’ understanding of fishery dynamics, more accurate modeling of extreme weather events and how they could impact oceans and communities located near oceans, and identifying threats to coral reef health.

Figure 1. Training autonomous underwater vehicles in the MITRE BlueTech Lab

Figure 1. Training autonomous underwater vehicles in the MITRE BlueTech Lab

Long-term, MITRE plans to create integrated, autonomous platforms that can unlock an even deeper understanding of the world’s oceans.

“There are almost innumerable variables connected with the ocean which are driven by physics,” said Connor Lewellyn, MITRE’s chief environmental engineer. “We’re using new compute to better train weather models to drive a fuller predictive ocean model.

MITRE plans to collaborate with SOFAR and others to scale ocean exploration and advance a universal standard—a kind of USB port for underwater data—that researchers, academics, and businesses can plug into to better understand oceans.

Related resources

- DLI course: Deploying a Model for Inference at Production Scale

- GTC session: Exploring Earth’s Oceans: Using Digital Twins to Drive Digital Ocean Collaboration

- GTC session: Photo-Realistic 3D Digital Twin to Enhance Understanding of the Great Barrier Reef

- GTC session: Empower Complex Data Workflows: A Scalable Multi-Agent Solution

- NGC Containers: MATLAB

- SDK: IndeX – Amazon Web Services

Elias Wolfberg

Executive Communications team, NVIDIA