This blog post was originally published at Vision Systems Design's website. It is reprinted here with the permission of PennWell.

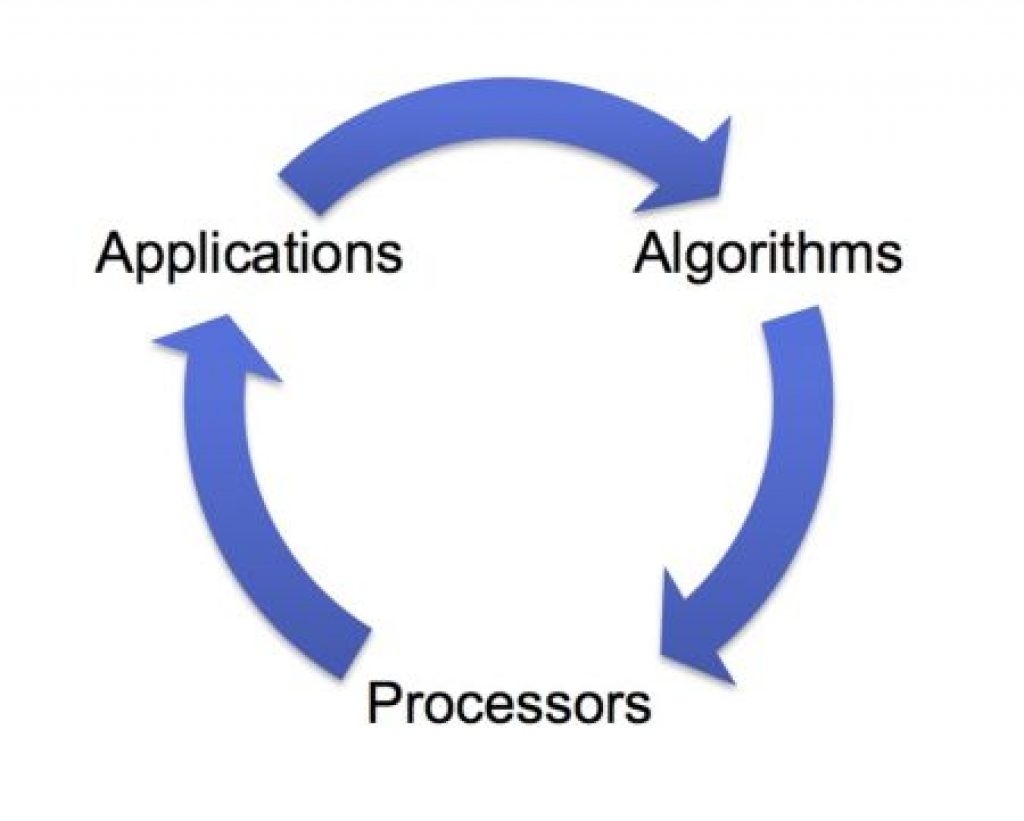

In his plenary session "Computer Vision 2.0: Where We Are and Where We're Going" at last May's Embedded Vision Summit, Embedded Vision Alliance founder Jeff Bier discussed the "virtuous circle of innovation," which he believes is already well underway for computer vision (Figure 1).

Figure 1. Computer vision benefits from a "virtuous circle" positive feedback loop of investments both in the underlying technology and applications based on it.

Key to an understanding of how computer vision will evolve, according to Bier, is the reality that it's an enabling technology, not an end in itself. As he’s seen with other technologies like speech recognition, it will eventually become ubiquitous and, in the process, "invisible." And as with other technologies that have already become success stories, as computer vision technology improves, new applications are enabled. Some of these applications become successful, and this success encourages further industry investment in algorithms, processors, sensors and the like, all of which further improves the underlying technology. Here's a preview of Bier's Embedded Vision Summit plenary talk:

Qualcomm's Raj Talluri, Senior Vice President of Product Management, echoed Bier's line of reasoning in his May 2016 Embedded Vision Summit talk, "Is Vision the New Wireless?". Talluri referenced the example of another technology, digital wireless communications (e.g. Bluetooth, cellular data, Wi-Fi), which like Bier's speech recognition example has over the past 20 years also become ubiquitous and "invisible," an essential technology for many industries, and a primary driver for the electronics industry.

Today, according to Talluri, computer vision is showing signs of following a similar trajectory. Once used only in low-volume applications such as manufacturing inspection, vision is now becoming an essential technology for a wide range of mass-market devices, from cars to drones to mobile phones. In his presentation, Talluri examined the motivations for incorporating vision into diverse products, presented case studies that illuminate the current state of vision technology in high-volume products, and explored critical challenges to ubiquitous deployment of visual intelligence. Here's a preview of Talluri's talk:

One emerging vision market which Talluri touched on in his talk, and which is definitely on his company's radar screen thanks to initiatives such as Google's Cardboard and Daydream platforms for mobile devices, is virtual reality. VR is very much on AMD's radar screen, too, judging from the May 2016 Embedded Vision Summit presentation "How Computer Vision Is Accelerating the Future of Virtual Reality" delivered by Allen Rush, a Fellow at the company. VR, according to Rush, is the new focus for a wide variety of applications, including entertainment, gaming, medical, science, and many others.

The technology driving the VR user experience has advanced rapidly in the past few years, and it is now poised to proliferate with solid products that offer a range of cost, performance and capabilities. How does computer vision intersect this emerging modality? Already we are seeing examples of the integration of computer vision and VR, for example for simple eye tracking and gesture recognition. This talk explores how we can expect more complex computer vision capabilities to become part of the VR landscape, and the business and technical challenges that must be overcome to realize these compelling capabilities. Here's a preview of Rush's presentation:

For more information on computer vision opportunities in virtual reality systems, I encourage you to check out the recent article "Vision Processing Opportunities in Virtual Reality" co-authored by Embedded Vision Alliance member companies Infineon Technologies, Movidius (now part of Intel), ON Semiconductor, and videantis. In it, the authors provide implementation details of vision-enabled VR functions such environmental mapping, gesture interface, and eye tracking.

I also encourage you to attend the next Embedded Vision Summit, taking place May 1-3, 2017 at the Santa Clara, California Convention Center. Designed for product creators interested in incorporating visual intelligence into electronic systems and software, the Summit is intended to inspire attendees' imaginations about potential applications for practical computer vision technology through exciting presentations and demonstrations, to offer practical know-how for attendees to help them incorporate vision capabilities into their hardware and software products, and to provide opportunities for attendees to meet and talk with leading vision technology companies and learn about their offerings.

Planned presentations on embedded vision applications include an "Executive Perspectives" talk from Tim Ramsdale, General Manager of the Imaging and Vision Group at ARM. As with Bier and Talluri's presentations at the 2016 Summit, Ramsdale's talk, "This Changes Everything: Why Computer Vision Will Be Everywhere," will provide big-picture perspectives on a number of vision trends and opportunities. Ramsdale's focus will span not only markets where embedded vision is already having an immediate impact, such as computational photography and security/surveillance, but also numerous other markets where computer vision provides opportunities to improve the functionality of many classes of devices, in the process creating completely new applications, products and services.

The 2017 Embedded Vision Summit will feature many more application-focused presentations. Scheduled talks include those from:

- Steven Cadavid, Founder and President of KinaTrax, who will describe the company's markerless motion capture system that computes the kinematic data of a pitch in a Major League Baseball game.

- James Crawford, Founder and CEO of Orbital Insight, a geospatial analytics company creating actionable data through proprietary deep learning analysis of satellite and UAV images to understand and characterize socioeconomic trends at scale.

- Aman Sikka, Vision System Architect at Pearl Automation, who will teach the audience about the algorithms, along with system design challenges and tradeoffs, which went into developing a wireless, solar powered stereo vision product.

- Mark Jamtgaard, Director of Technology at RetailNext, who will explain how retailers are using the company's embedded vision systems to optimize store layout and staffing based on measured customer behavior at scale.

- Divya Jain, Technical Director at Tyco Innovation, who will provide a "deep dive" into the real-world problem of fire detection, describing what it takes to build a complete, scalable and reusable platform using deep learning.

Check out the introductory video that follows, and then register now to secure your seat at the Summit. Additional information is now available on the Alliance website.

I'll be back again soon with more discussion on a timely computer vision topic. Until then, as always, I welcome your comments.

Regards,