A version of this article was originally published at EE Times' Embedded.com Design Line. It is reprinted here with the permission of EE Times.

Thanks to the emergence of increasingly capable and cost-effective processors, image sensors, memories and other semiconductor devices, along with robust algorithms, it's now practical to incorporate computer vision into a wide range of systems, enabling those systems to analyze their environments via video and still image inputs. Wearable devices are forecast to be a significant influencer of semiconductor and software development, and a dominant driver of industry sales and profits, in the coming years. Adding vision-processing capabilities to wearable systems not only cost-effectively enhances the capabilities of existing product categories, it also fundamentally enables new categories.

By Brian Dipert

Editor-in-Chief, Embedded Vision Alliance

Ron Shalom

Marketing Manager, Multimedia Applications, CEVA

Tom Wilson

Vice President, Business Development, CogniVue

and Tim Droz

Vice President and General Manager, SoftKinetic North America

Once-hot technology markets such as computers and smartphones are beginning to cool off; analyst firm IDC, for example, forecast earlier this year that smartphone sales will increase only 19% this year, down from 39% in 2013. IDC also believes that beginning in 2018, annual smartphone sales increases will diminish to single-digit rates. Semiconductor, software and electronic systems suppliers are therefore searching for the next growth opportunities, and wearable devices are likely candidates.

Analyst firm Canalys, for example, recently reported that it forecast shipments of more than 17 million "smart band" wearables this year, when the product category will become a “key consumer technology,” and that shipments will expand to more than 23 million units by 2015 and over 45 million by 2017. In the near term, the bulk of wearable shipments will consist of activity trackers and other smart bands, point of view cameras, and "smart watches," but other wearable product types will also become more common, including "smart glasses" and "life recorder" devices.

These wearable products can at minimum be greatly enhanced, and in some cases are fundamentally enabled, by their ability to process incoming still and video image information. Vision processing is more than just capturing snapshots and video clips for subsequent playback and sharing; it involves automated analysis of the scene and its constituent elements, along with appropriate device responses based on the analysis results. Historically known as "computer vision," as its name implies it was traditionally the bailiwick of large, heavy, expensive and power-hungry PCs and servers.

Now, however, although "cloud"-based processing may be used in some cases, the combination of fast, high-quality, inexpensive and energy-efficient processors, image sensors and software are enabling robust vision processing to take place right on your wrist (or your face, or elsewhere on your person), at price points that enable adoption by the masses. And an industry alliance comprised of leading technology and service suppliers is a key factor in this burgeoning technology success story.

Form Factor Alternatives

The product category known as "wearables" comprises a number of specific product types in which vision processing is a compelling fit. Perhaps the best known one of these, by virtue of by Google’s advocacy, are the "smart glasses" exemplified by Google's Glass (Figure 1). The current Glass design contains a single camera capable of capturing 5 Mpixel images and 720p streams. Its base functionality encompasses conventional still and video photography. But it's capable of much more, as both Google's and third-party developers' initial applications are making clear.

Figure 1. Google Glass has singlehandedly created the smart glasses market (top), for which vision processing-enabled gestures offer a compelling alternative to clumsy button presses for user interface control purposes (bottom).

Consider that object recognition enables you to comparison-shop, displaying a list of prices offered by online and brick-and-mortar merchants for a product that you're currently looking at. Consider that this same object recognition capability, in "sensor fusion" combination with GPS, compass, barometer/altimeter, accelerometer, gyroscope, and other facilities, enables those same "smart glasses" to provide you with an augmented-reality set of information about your vacation sight-seeing scenes. And consider that facial recognition will someday provide comparably augmented data about the person standing in front of you, whose name you may or may not already recall.

Trendsetting current products suggest that these concepts will all become mainstream in the near future. Amazon's Fire Phone, for example, offers Firefly vision processing technology, which enables a user to "quickly identify printed web and email addresses, phone numbers, QR and bar codes, plus over 100 million items, including movies, TV episodes, songs, and products." OrCam's smart camera accessory for glasses operates similarly; intended for visually impaired, it recognizes text and products, and speaks to the user via a bone-conduction earpiece. And although real-time individual identification via facial analysis may not yet be feasible in a wearable device, a system developed by the Fraunhofer Institute already enables accurate discernment of the age, gender and emotional state of the person your Google Glass set is looking at.

While a single camera is capable of implementing such features, speed and accuracy can be improved when a depth-sensing sensor is employed. Smart glasses' dual-lens arrangement is a natural fit for a dual-camera stereoscopic depth discerning setup. Other 3D sensor technologies such as time-of-flight and structured light are also possibilities. And, versus a smartphone or tablet, glasses' thicker form factors are amenable to the inclusion of deeper-dimensioned, higher quality optics. 3D sensors are also beneficial in accurately discerning finely detailed gestures used to control the glasses' various functions, in addition to (or instead of) button presses, voice commands, and Tourette Syndrome-reminiscent head twitches.

Point of view (POV) cameras are another wearable product category that can benefit from vision processing-enabled capabilities (Figure 2). Currently, they're most commonly used to capture the wearer's experiences while doing challenging activities such as bicycling, motorcycling, snowboarding, surfing and the like. In such cases, a gesture-based interface to stop and stop recording may be preferable to button presses that are difficult-to-impossible with thick gloves and in other cases when fingers aren't available.

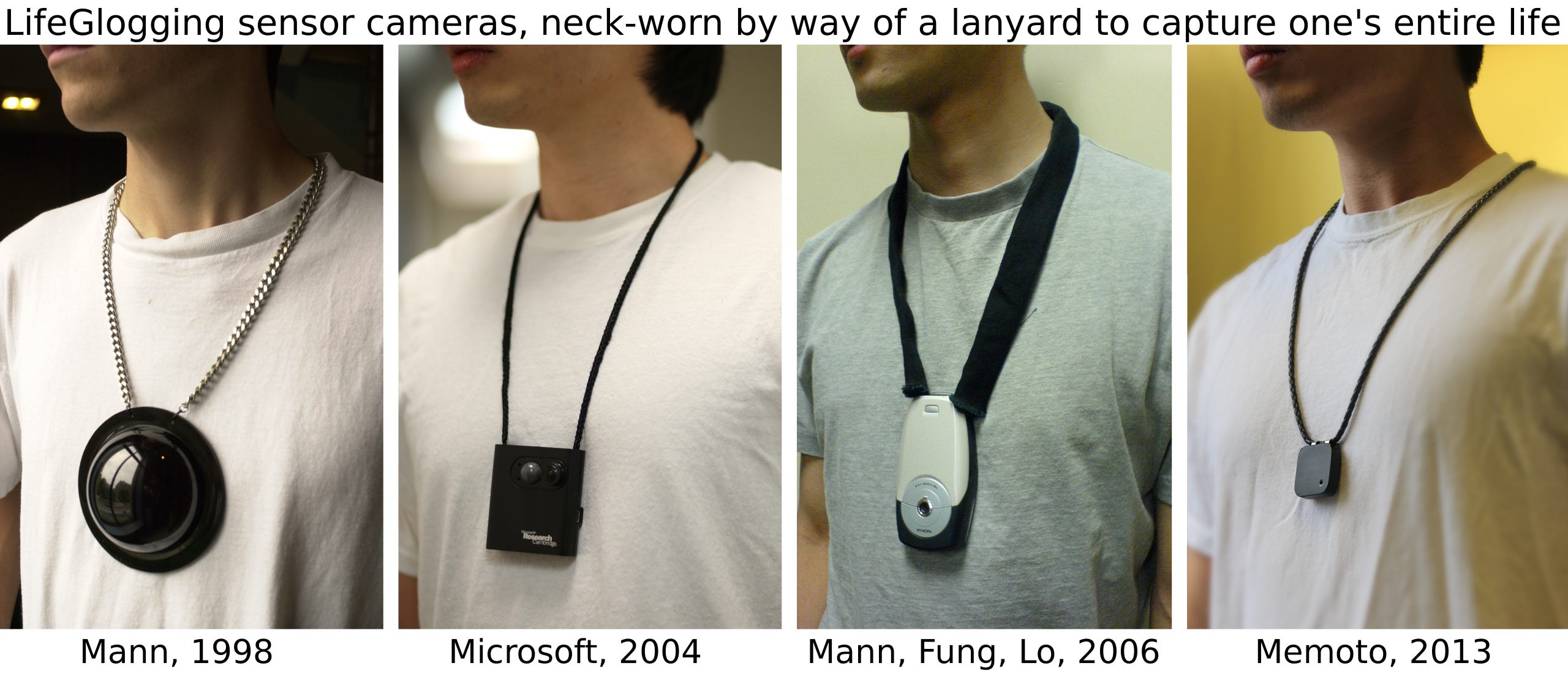

Figure 2. The point of view (POV) camera is increasingly "hot", as GoPro's recent initial public offering and subsequent stock-price doubling exemplify (top). With both it and the related (and more embryonic) life camera, which has experienced rapid product evolution (bottom), intelligent image post-processing to cull uninteresting portions of the content is a valuable capability.

POV cameras are also increasingly being used in situations where wearer control isn't an option, such as when they're strapped to pets and mounted on drones. And the constantly recording, so-called "life camera" is beginning to transition from a research oddity to an early-adopter trendsetter device. In all of these examples, computational photography intelligence can be brought to bear to only render in final form those images and video frame sequences whose content is of greatest interest to potential viewers, versus generating content containing a high percentage of boring or otherwise unappealing material (analogies to snooze-inducing slide shows many of us have been forced to endure by friends and family members are apt).

Vision Processing That's "Handy"

A wrist-strapped companion (or, perhaps, competitor) to the earlier mentioned smart glasses is the "smart watch", intended to deliver a basic level of functionality when used standalone, along with an enhanced set of capabilities in conjunction with a wirelessly tethered smartphone, tablet or other device (Figure 3). While a camera-inclusive smart watch could act as a still or video image capture device, its wrist-located viewpoint might prove to be inconvenient for all but occasional use.

Figure 3. The Android-based Moto 360 is a particularly popular example of a first-generation smart watch, a product category that will benefit from the inclusion of visual intelligence in future iterations.

However, gesture interface support in smart watches for flipping through multiple diminutive screens' worth of information is more obviously appealing, particularly given that the touch screen alternative is frequently hampered by sweat and other moisture sources, not to mention non-conductive gloves. Consider, too, that a periodically polling camera, driving facial detection software, could keep the smart watch's display disabled, thereby saving battery life, unless you're looking at the watch.

Finally, let's consider the other commonly mentioned wrist wearable, the activity tracker, also referred to as the fitness band or smart band when worn on the wrist (Figure 4). A recently announced, currently smartphone-based application gives an early glimpse into how vision may evolve in this product category. The app works in conjunction with Jawbone's Up fitness band to supplement the band's calorie-consuming measurements by tracking food (i.e. calorie) intake, in order to get a fuller picture of fitness, weight loss and other related trends.

Figure 4. Fitness bands (top) and other activity trackers (bottom) are increasingly popular wearable products for which vision processing is also a likely near-future feature fit.

However, Jawbone's software currently requires that the user truthfully (or not) and consistently (or not) enters the meal and snack information manually. What if, instead, object recognition algorithms were used to automatically identify items on the user's plate and, after also assessing their portion sizes, calorie counts? And what if, instead of running on a separate mobile device as is currently the case, they were to leverage a camera built directly into the activity tracker?

Function Implementations

The previously mentioned case studies implement various vision-processing functions. Let's look first at the ability to detect and recognize objects in the field of view of the wearable device. In addition to the already mentioned applications of the technology, this function may also be used, for example, to automatically tag images in real time while doing video recording in a POV or life camera. Such a feature can be quite useful in generating metadata associated with detected and recognized objects, to make the resulting video much more search-friendly. Object recognition can also be combined with gaze tracking in a smart glasses implementation so that only those objects specifically being looked at are detected and classified.

Object detection and recognition will also be a key component of augmented reality (AR) across a number of applications for wearables, ranging from gaming to social media, advertising and navigation. Natural feature recognition for AR applications uses a feature matching approach, recognizing objects by matching features in a query image to a source image. The result is a flexible ability to train applications on images and use natural feature recognition for AR, employing wirelessly delivered augmented information coming from social media, encyclopedia or other online sources, and displayed using graphic overlays.

Natural feature detection and tracking avoids the need to use more restrictive marker-based approaches, wherein pre-defined fiducial markers are required to trigger the AR experience, although it's is more challenging than marker-based AR from a processing standpoint. Trendsetting feature tracking applications can be built today using toolsets such as Catchoom's CraftAR, and as the approach becomes more pervasive, it will allow for real-time recognition of objects in users' surroundings, with an associated AR experience.

Adding depth sensing to the AR experience brings surfaces and rooms to life in retail and other markets. The IKEA Catalog AR application, for example, gives you the ability to place virtual furniture in your own home using a mobile electronics device containing a conventional camera. You start by scanning a piece of furniture in an IKEA catalog page, and then “use the catalog itself to judge the approximate scale of the furnishings – measuring the size of the catalog itself (laid on the floor) in the camera and creating an augmented reality image of the furnishings so it appears correctly in the room.” With the addition of a depth sensor in a tablet or cellphone, such as one of Google's prototype Project Tango devices, the need for the physical catalog as a measuring device is eliminated as the physical dimensions of the room are measured directly, and the furnishings in the catalog can be accurately placed to scale in the scene.

Not Just Hand Waving

Wearable devices can include various types of human machine interfaces (HMIs). These interfaces can be classified into two main categories – behavior analysis and intention analysis. Behavior analysis uses the vision-enabled wearable device for functions such as sign language translation and lip reading, along with behavior interpretation for various security and surveillance applications. Intention analysis for device control includes such vision-based functions as gesture recognition, gaze tracking, and emotion detection, along with voice commands. By means of intention analysis, a user can control the wearable device and transfer relevant information to it for various activities, such as games and AR applications.

Intention analysis use cases can also involve wake-up mechanisms for the wearable. For example, as briefly mentioned previously, a smart watch with a camera that is otherwise in sleep mode may keep a small amount of power allocated to the image sensor and a vision-processing core to enable a vision-based wake up system. The implementation might involve a simple gesture (like a hand wave) in combination with face detection (to confirm that the discerned object motion was human-sourced) to activate the device. Such vision processing needs to occur at ~1mA current draw levels in order to not adversely impact battery life.

Photographic Intelligence

Wearable devices will drive computational photography forward by enabling more advanced camera subsystems and in general presenting new opportunities for image capture and vision processing. As previously mentioned, for example, smart glasses' deeper form factor compared to smartphones allows for a thicker camera module, which enables the use of a higher quality optical zoom function along with (or instead of) pixel-interpolating digital zoom capabilities. The ~6" "baseline" distance between glasses' temples also inherently enables wider stereoscopic camera-to-camera spacing than is possible in a smartphone or tablet form factor, thereby allowing for accurate use over a wider depth range.

One important function needed for a wearable device is stabilization, for both still and video images. While the human body (especially the head) naturally provides some stabilization, wearable devices will still experience significant oscillation and will therefore require robust digital stabilization facilities. Furthermore, wearable devices will frequently be used outdoors and will therefore benefit from algorithms that compensate for environmental variables such as changing light and weather conditions.

These challenges to image quality will require strong image enhancement filters for noise removal, night-shot capabilities, dust handling and more. Image quality becomes even more important with applications such as image mosaic, which builds up a panoramic view by capturing multiple frames of a scene. Precise computational photography to even out frame-to-frame exposure and stabilization differences is critical to generating a high quality mosaic.

Depth-discerning sensors have already been mentioned as beneficial in object recognition and gesture interface applications. They're applicable to computational photography as well, in supporting capabilities such as high dynamic range (HDR) and super-resolution (an advanced implementation of pixel interpolation). Plus, they support plenoptic camera features that allow for post-image capture selective refocus on a portion of a scene, and other capabilities. All of these functions are compute-intensive, and wearable devices sizes are especially challenging in this regard, with respect to factors such as size, weight, cost, power consumption and heat dissipation.

Processing Locations and Allocations

One key advantage of using smart glasses devices for image capture and processing is ease of use – the user just records what he or she is looking at, hands-free. In combination with the previously mentioned ability to use higher quality cameras with smart glasses, vision processing in wearable devices makes a lot of sense. However, the batteries in today's wearable devices are much smaller than those in other mobile electronics devices – 570 mAh with Google Glass, for example vs ~2000 mAh for high-end smartphones.

Hence, it is currently difficult to do all of the necessary vision processing in a wearable device, due to power consumption limitations. Evolutions and revolutions in vision processors will make such a completely resident processing scenario increasingly likely in the future. Meanwhile, in the near term, a portion of the processing may instead be done on a locally tethered device such a smartphone or tablet, and/or at "cloud"-based servers. Note that the decision to do local vs. remote processing doesn't involve battery life exclusively – thermal issues are also at play. The heat generated by compute-intensive processing can produce real discomfort, as has been noted with existing smart glasses even during prolonged video recording sessions where no post-processing is occurring.

When doing video analysis, feature detection and extraction can today be performed directly on the wearable device, with the generated metadata transmitted to a locally tethered device for object matching either there or, via the local device, in the cloud. Similarly, when using the wearable device for video recording with associated image tagging, vision processing to generate the image tag metadata can currently be done on the wearable device, with post-processing then continuing on an external device for power savings.

For 3-D discernment, a depth map can be generated on the wearable device (at varying processing load requirements depending on the specific depth camera technology chosen), with the point cloud map then sent to an external device to be used for classification or (for AR) camera pose estimation. Regardless of whether post-processing occurs on a locally tethered device or in the cloud, some amount of pre-processing directly on the wearable device is still desirable in order to reduce data transfer bandwidth locally over Bluetooth or Wi-Fi (therefore saving battery life) or over a cellular wireless broadband connection to the Internet.

Even in cases like these, where vision processing is split between the wearable device and other devices, the computer vision algorithms running on the wearable device require significant computation. Feature detection and matching typically uses algorithms like SURF (Speeded Up Robust Features) or SIFT (the Scale-Invariant Feature Transform), which are notably challenging to execute in real time with conventional processor architectures.

While some feature matching algorithms such BRIEF (Binary Robust Independent Elementary Features) combined with a lightweight feature detector are providing lighter processing loads with reliable matching, a significant challenge still exists in delivering real-time performance at the required power consumption levels. Disparity mapping for stereo matching to produce a 3D depth map is also very compute-intensive, particularly when high quality results are needed. Therefore, the vision processing requirements of various wearable applications will continue to stimulate demand for optimized vision processor architectures.

Industry Assistance

The opportunity for vision technology to expand the capabilities of wearable devices is part of a much larger trend. From consumer electronics to automotive safety systems, vision technology is enabling a wide range of products that are more intelligent and responsive than before, and thus more valuable to users. The Embedded Vision Alliance uses the term “embedded vision” to refer to this growing use of practical computer vision technology in embedded systems, mobile devices, special-purpose PCs, and the cloud, with wearable devices being one showcase application.

Vision processing can add valuable capabilities to existing products, such as the vision-enhanced wearables discussed in this article. And it can provide significant new markets for hardware, software and semiconductor suppliers. The Embedded Vision Alliance, a worldwide organization of technology developers and providers, is working to empower product creators to transform this potential into reality. CEVA, CogniVue and SoftKinetic, the co-authors of this article, are members of the Embedded Vision Alliance.

First and foremost, the Alliance's mission is to provide product creators with practical education, information, and insights to help them incorporate vision capabilities into new and existing products. To execute this mission, the Alliance maintains a website providing tutorial articles, videos, code downloads and a discussion forum staffed by technology experts. Registered website users can also receive the Alliance’s twice-monthly email newsletter, among other benefits.

The Embedded Vision Alliance also offers a free online training facility for vision-based product creators: the Embedded Vision Academy. This area of the Alliance website provides in-depth technical training and other resources to help product creators integrate visual intelligence into next-generation software and systems. Course material in the Embedded Vision Academy spans a wide range of vision-related subjects, from basic vision algorithms to image pre-processing, image sensor interfaces, and software development techniques and tools such as OpenCV. Access is free to all through a simple registration process.

The Alliance also holds Embedded Vision Summit conferences in Silicon Valley. Embedded Vision Summits are technical educational forums for product creators interested in incorporating visual intelligence into electronic systems and software. They provide how-to presentations, inspiring keynote talks, demonstrations, and opportunities to interact with technical experts from Alliance member companies. These events are intended to:

- Inspire attendees' imaginations about the potential applications for practical computer vision technology through exciting presentations and demonstrations.

- Offer practical know-how for attendees to help them incorporate vision capabilities into their hardware and software products, and

- Provide opportunities for attendees to meet and talk with leading vision technology companies and learn about their offerings.

The most recent Embedded Vision Summit was held in May, 2014, and a comprehensive archive of keynote, technical tutorial and product demonstration videos, along with presentation slide sets, is available on the Alliance website. The next Embedded Vision Summit will take place on May 12, 2015 in Santa Clara, California; please reserve a spot on your calendar and plan to attend.

Brian Dipert is Editor-In-Chief of the Embedded Vision Alliance. He is also a Senior Analyst at BDTI (Berkeley Design Technology, Inc.), which provides analysis, advice, and engineering for embedded processing technology and applications, and Editor-In-Chief of InsideDSP, the company's online newsletter dedicated to digital signal processing technology. Brian has a B.S. degree in Electrical Engineering from Purdue University in West Lafayette, IN. His professional career began at Magnavox Electronics Systems in Fort Wayne, IN; Brian subsequently spent eight years at Intel Corporation in Folsom, CA. He then spent 14 years at EDN Magazine.

Ron Shalom is the Marketing Manager for Multimedia Applications at CEVA DSP. He holds an MBA from Tel Aviv University's Recanati Business School. Ron has over 15 years of experience in the embedded world; 9 years in software development and R&D management roles, and 6 years as a marketing manager. He has worked at CEVA for 10 years; 4 years as a team leader in software codecs, and 6 years as a product marketing manager.

Tom Wilson is Vice President of Business Development at CogniVue Corporation, with more than 20 years of experience in various applications such as consumer, automotive, and telecommunications. He has held leadership roles in engineering, sales and product management, and has a Bachelor’s of Science and PhD in Science from Carleton University, Ottawa, Canada.

Tim Droz is Senior Vice President and General Manager of SoftKinetic North America, delivering 3D time-of-flight (TOF) image sensors, 3D cameras, and gesture recognition and other depth-based software solutions. Prior to SoftKinetic, he was Vice President of Platform Engineering and head of the Entertainment Solutions Business Unit at Canesta, acquired by Microsoft. Tim earned a BSEE from the University of Virginia, and a M.S. degree in Electrical and Computer Engineering from North Carolina State University.