November 13, 2019 – Today we are very excited to share details of our collaboration with Microsoft, announcing preview of Graphcore® Intelligence Processing Units (IPUs) on Microsoft Azure. This is the first time a major public cloud vendor is offering Graphcore IPUs which are built from the ground up to support next generation machine learning. It’s a landmark moment for Graphcore and is testament to the maturity of our patented IPU technology, both of our IPU hardware and of our Poplar® software stack.

Microsoft and Graphcore have been collaborating closely for over two years. Over this period, the Microsoft team, led by Marc Tremblay, Distinguished Engineer, has been developing systems for Azure and has been enhancing advanced machine vision and Natural Language Processing (NLP) models on IPUs. The Graphcore IPU preview on Azure is now open for customer sign-up with access prioritized for those focused on pushing the boundaries of NLP and developing new breakthroughs in machine intelligence. Information on how to sign up will be available at SC19 next week in the Microsoft booth #663 or by contacting us here.

The IPU has been designed from the ground up by Graphcore to support new breakthroughs in machine intelligence. Together with our production ready Poplar software stack, it gives developers a powerful, efficient, scalable, and high performance solution which enables new innovations in AI. Customers can tackle their most difficult AI workloads by accelerating more complex models and developing entirely new techniques.

State of the Art performance on today’s models

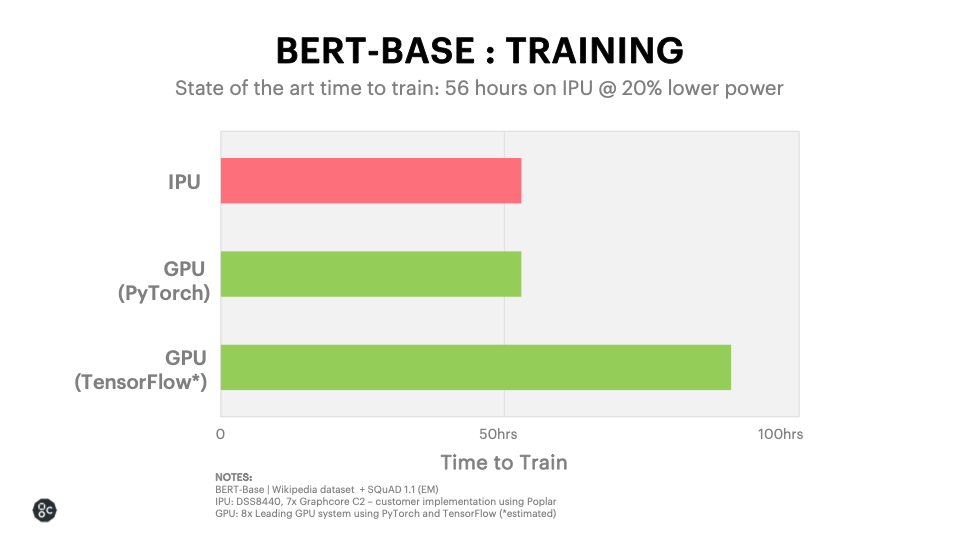

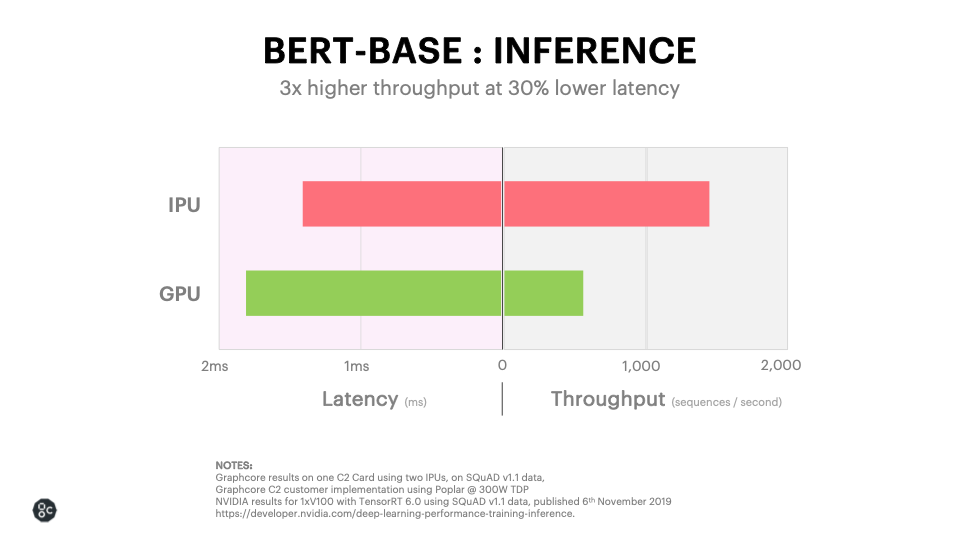

Microsoft and Graphcore developers have achieved state of the art performance and accuracy with the BERT language model, training BERT Base in 56 hours with just one IPU Server system of eight C2 IPU-Processor PCIe cards. With BERT inference, our customers are seeing 3x higher throughput with over 20% improvement in latency allowing results to be delivered faster than ever.

This level of performance in language understanding is essential for search engines to deliver more useful responses to queries and for text and conversational AI applications such as sentiment analysis and intelligent personal assistants that need human level comprehension. Natural language processing is a strategically important market segment for AI and is expected to grow to be a $15bn AI hardware market, just for NLP, by 2025 (Source: Tractica Q4 2018).

“Natural language processing models are hugely important to Microsoft – to run our internal AI workloads and for our AI customers on Microsoft Azure,” said Girish Bablani, corporate vice president, Azure Compute at Microsoft Corp. “We are extremely excited by the potential that this new collaboration on processors with Graphcore will deliver for our customers. The Graphcore offering extends Azure’s capabilities and our efforts here form part of our strategy to ensure that Azure remains the best cloud for AI.”

IPU enables new AI innovations

As well as delivering state of the art performance for today’s complex AI models, like BERT, the IPU also excels at accelerating new techniques. The IPU will open up new areas of research and allow companies to explore new techniques and to build more efficient machine learning systems that can be trained with much less data.

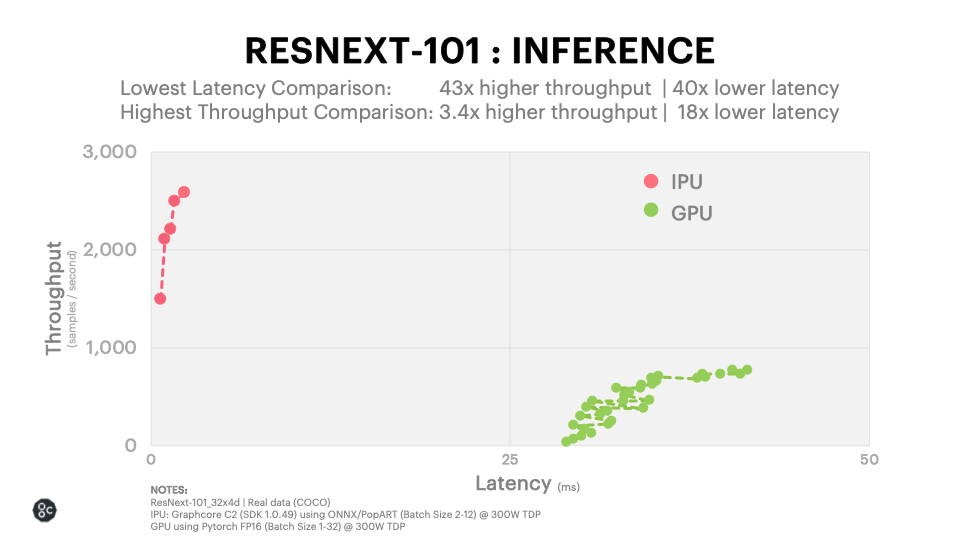

One of our IPU early access customers, European search engine Qwant, has seen high performance at much lower latency with IPUs running the next generation image recognition model – ResNext. As Qwant CEO, Eric Leandri explains:

“Our research team at Qwant works at the cutting edge of AI to quickly deliver the best possible results on our users search queries while ensuring the results are neutral, impartial and accurate. It’s a tall order. We see millions of searches each day for images alone. One of the latest AI innovations that we are implementing is a new class of image recognition model called ResNext, to improve our accuracy and speed when delivering image search results. We have been working closely with Microsoft and Graphcore to use IPU processor technology in Azure and are seeing a significant improvement – with 3.5x higher performance – in our image search capability using ResNext on IPUs, out of the box. There is huge potential for innovation with Graphcore IPUs on new machine intelligence models and we are working on these approaches to refine our search results so that we can deliver exactly what our customers are looking for.”

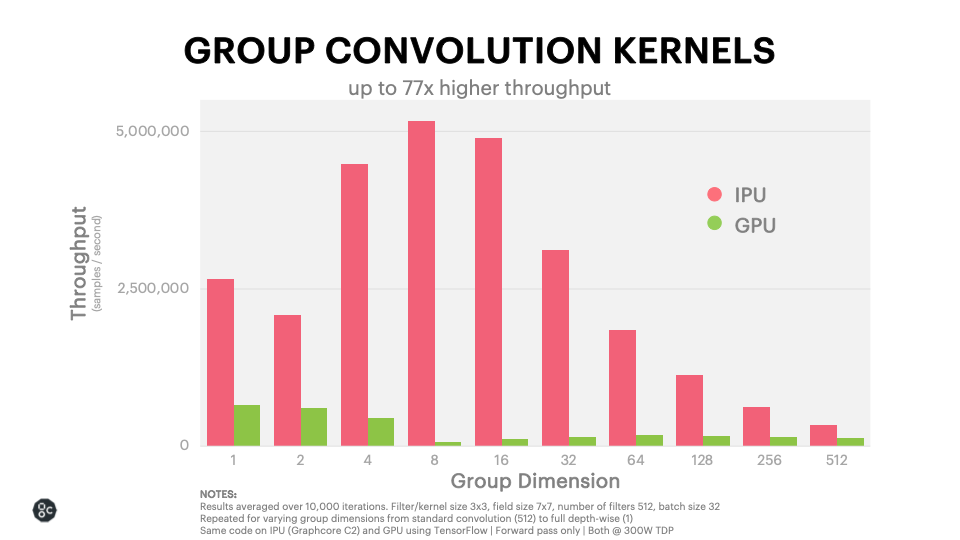

ResNext uses grouped convolutions and depth-wise separable convolutions for large efficiency improvements (accuracy/parameters). This involves splitting the convolution blocks into smaller, separable blocks, which is efficiently supported by IPUs.

To provide greater insight into the hardware suitability for group and fully depthwise separable convolutions, our customer engineering team has prepared a set of microbenchmarks, with typical convolution blocks, showing clear IPU advantage across the board, but with up to 77x throughput advantage for group convolutions.

Early access customers – Finance

Daniele Scarpazza, R&D Team Lead, High Performance Computing at Citadel said: “A big focus for us at Citadel is leveraging cutting edge technology to optimize the investment process across a range of strategies and asset classes. We believe that constantly reevaluating and improving the technology we offer our investment teams helps us stay ahead of our competition. We are very excited to be working with Graphcore as one of its early access partners to test the possible applications of the new processor technology within our business. The Graphcore IPU has been designed from the ground up for machine learning and its novel architecture is already enabling us to explore new techniques that have been inefficient or simply not possible before.”

Early Access Customer – Robotics

“We believe new processor technology is going to play a key part in the evolution of Spatial AI and SLAM (Simultaneous Localization And Mapping) in the near future and have been excited to get early research access to Graphcore’s innovative new hardware. Fully general Spatial AI will require sparse probabilistic and geometric reasoning on graphs as well as computations with deep neural nets. By paying as much attention to communication as computation, Graphcore have created a new scalable architecture which we believe has the potential to execute all these algorithms with low latency and power efficiency,” said Professor Andrew Davison, Professor of Robot Vision, Department of Computing, Imperial College London.”

Graphcore IPUs with Dell EMC DSS 8440 Server

We will also be demonstrating our first IPU technology with Dell Technologies, designed to address the needs of enterprise customers building out on-premise machine intelligence compute. On display in Dell Technologies’ booth at SC19 (#913), the Dell EMC DSS 8440 is a machine learning server with Graphcore technology. The system can deliver more than 1.6 PetaFlops of machine intelligence compute, using 8 Graphcore C2 IPU-Processor PCIe cards, each with 2 IPU processors, all connected with high speed IPU-Link™ technology in a standard 4U chassis.

What is an IPU?

The Intelligence Processing Unit (IPU) is completely different from today’s CPU and GPU processors. It is a highly flexible, easy to use, parallel processor that has been designed from the ground up to deliver state of the art performance on today’s machine intelligence models for both training and inference. But more importantly, the IPU has been designed to allow new and emerging machine intelligence workloads to be realized. The Graphcore IPU is unique in keeping the entire machine learning knowledge model inside the processor. With 16 IPU processors, all connected with IPU-Link technology in a server, an IPU system will have over 100,000 completely independent programs, all working in parallel on the machine intelligence knowledge model.

Full Software Stack & Framework Support

Over the last three years, hand in hand with designing the world’s most sophisticated silicon processor, we have also built the worlds very first graph tool chain specifically designed for machine intelligence – the Poplar software stack.

Poplar seamlessly integrates with TensorFlow and Open Neural Network Exchange (ONNX) allowing developers to use their existing machine intelligence development tools and existing machine learning models. Graphcore also delivers a full training runtime for ONNX and is working closely with the ONNX organisation to include this in the ONNX standard environment. Initial PyTorch support is available in Q4 2019 with full advanced feature support becoming available in early 2020.

We have been working extensively with a number of leading early access customers and partners for some time to ensure that the Poplar graph tool chain is ready for general release.

We are now extremely pleased that we are making Graphcore technology commercially available to a wider group of customers. We are looking forward to supporting innovators achieve the next great breakthroughs in machine intelligence on IPUs.

For more information or to be contacted by one of our sales team please register your interest here.