| LETTER FROM THE EDITOR |

|

Dear Colleague,

Deep neural networks (DNNs) are proving very effective for a variety of challenging machine perception tasks, but these algorithms are very computationally demanding. To enable DNNs to be used in practical applications, it’s critical to find efficient ways to implement them. The Embedded Vision Alliance will delve into these topics in the free webinar, "Efficient Processing for Deep Learning: Challenges and Opportunities," on Thursday, September 28 at 10 am Pacific Time. This webinar explores how DNNs are being mapped onto today’s processor architectures, and how both DNN algorithms and specialized processors are evolving to enable improved efficiency. It is presented by Dr. Vivienne Sze, Associate Professor of Electrical Engineering and Computer Science at MIT (www.rle.mit.edu/eems). Sze will also offer suggestions on how to evaluate competing processor solutions in order to address your application and design requirements. To register, see the event page.

The Embedded Vision Summit is the preeminent conference on practical computer vision, covering applications from the edge to the cloud. It attracts a global audience of over one thousand product creators, entrepreneurs and business decision-makers who are creating and using computer vision technology. The Embedded Vision Summit has experienced exciting growth over the last few years, with 98% of 2017 Summit attendees reporting that they’d recommend the event to a colleague. The next Summit will take place May 22-24, 2018 in Santa Clara, California. The deadline to submit presentation proposals is November 10, 2017. For detailed proposal requirements and to submit proposals, please visit https://www.embedded-vision.com/summit/2018/call-proposals. For questions or more information, please email [email protected].

Brian Dipert

Editor-In-Chief, Embedded Vision Alliance

|

| OPENCV IMPLEMENTATIONS |

|

Making OpenCV Code Run Fast

OpenCV is the de facto standard framework for computer vision developers, with a 16+ year history, approximately one million lines of code, thousands of algorithms and tens of thousands of unit tests. While OpenCV delivers decent performance out-of-the-box for some classical algorithms on desktop PCs, it lacks sufficient performance when using some modern algorithms, such as deep neural networks, and when running on embedded platforms. In this presentation, Vadim Pisarevsky, Software Engineering Manager at Intel, examines current and forthcoming approaches to performance optimization of OpenCV, including the existing OpenCL-based transparent API, newly added support for OpenVX, and early experimental results using Halide. He demonstrates the use of the OpenCL-based transparent API on a popular CV problem: pedestrian detection. Because OpenCL does not provide good performance-portability, he explores additional approaches. He discusses how OpenVX support in OpenCV accelerates image processing pipelines and deep neural network execution. He also presents early experimental results using Halide, which provides a higher level of abstraction and ease of use, and is being actively considered for future support in OpenCV.

OpenCV on Zynq: Accelerating 4k60 Dense Optical Flow and Stereo Vision

OpenCV libraries are widely used for algorithm prototyping by many leading technology companies and computer vision researchers. FPGAs can achieve unparalleled compute efficiency on complex algorithms like dense optical flow and stereo vision in only a few watts of power. However, unlocking these capabilities traditionally required hardware design expertise and use of languages like Verilog and VHDL. In this talk, Nick Ni, Senior Product Manager for SDSoC and Embedded Vision at Xilinx, presents a new approach that enables designers to unleash the power of FPGAs using hardware-tuned OpenCV libraries, a familiar C/C++ development environment, and readily available hardware development platforms.

|

| EMBEDDED VISION ENERGY EFFICIENCY |

|

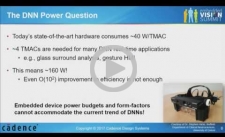

Techniques to Reduce Power Consumption in Embedded DNN Implementations

Deep learning is becoming the most widely used technique for computer vision and pattern recognition. This rapid adoption is primarily driven by the outstanding effectiveness deep learning has achieved on many fronts. However, the high computational requirements of deep learning algorithms typically drives power requirements to levels that are not reasonable for embedded applications. The biggest contributor to the high power consumption of deep neural network is the huge number of multiplications per pixel. In this talk, Samer Hijazi, Deep Learning Engineering Group Director at Cadence, presents a three-legged approach to solving this problem, by optimizing the network architecture, optimizing the problem definition, and minimizing word widths.

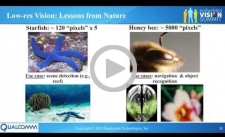

Always-on Vision Becomes a Reality

Intelligent devices equipped with human-like senses such as always-on touch, audio and motion detection have enabled a variety of new use cases and applications, transforming the way we interact with each other and our surroundings. While the vast majority (more than 80%) of human insight comes through the eyes, enabling always-on vision (defined as sub-1 mA current draw) for devices is challenging due to power-hungry hardware and the high complexity of inference algorithms. In this presentation, Evgeni Gousev, Senior Director at Qualcomm Research, describes the development of an always-on Computer Vision Module (CVM) combining innovations in system architecture, ultra-low power design and dedicated hardware for vision algorithms running at the "edge". With low end-to-end power consumption, a tiny form factor and low cost, the CVM can be integrated into a wide range of battery- and line-powered devices (IoT, mobile, VR/AR, automotive, etc.), performing object detection, feature recognition, change/motion detection, and other tasks. Its processor performs all computation within the module itself and outputs metadata.

|

| UPCOMING INDUSTRY EVENTS |

|

Synopsys ARC Processor Summit: September 26, 2017, Santa Clara, California

Embedded Vision Alliance Webinar – Efficient Processing for Deep Learning: Challenges and Opportunities: September 28, 2017, 10:00 am PT

Embedded Vision Summit: May 22-24, 2018, Santa Clara, California

More Events

|

| FEATURED COMMUNITY DISCUSSIONS |

|

Opportunity: Subject Matter Expert – Image Signal Processing Pipeline – Silicon Valley

More Community Discussions

|

| FEATURED NEWS |

|

Imagination Reveals PowerVR Neural Network Accelerator with 2x the Performance and Half the Bandwidth of Nearest Competitor

Videantis Receives Growth Financing from eCAPITAL to Accelerate Market Penetration in Embedded Vision and Autonomous Driving

HELLA Aglaia and NXP Reveal Open Vision Platform for Safe Autonomous Driving

Xilinx Software Defined Development Environment for Data Center Acceleration Now Available on Amazon Web Services

More News

|