| LETTER FROM THE EDITOR |

|

Dear Colleague,

The Embedded Vision Summit is the preeminent conference on practical computer vision, covering applications at the edge and in the cloud. It attracts a global audience of over one thousand product creators, entrepreneurs and business decision-makers who are creating and using computer vision technology. The Embedded Vision Summit has experienced exciting growth over the last few years, with 98% of 2017 Summit attendees reporting that they'd recommend the event to a colleague. The next Summit will take place May 22-24 in Santa Clara, California. Register for the Embedded Vision Summit while Early Bird discount rates are still available, using discount code NLEVI0227. Also note that entries for the premier Vision Product of the Year awards, to be presented at the Summit, are now being accepted.

Brian Dipert

Editor-In-Chief, Embedded Vision Alliance

|

| CAREER OPPORTUNITIES |

|

Positions Available for Computer Vision Engineers at DEKA Research

Inventor Dean Kamen founded DEKA to focus on innovations aimed to improve lives around the world. DEKA has deep roots in mobility: Dean invented the Segway out of his work on the iBot wheel chair. Now DEKA is adding autonomous navigation to its robotic mobility platform. The company is leveraging advances in computer vision to address the challenges of autonomous navigation in urban environments at pedestrian to bicycle speeds on streets, bike paths, and sidewalks. DEKA is seeking engineers with expertise in all facets of autonomous navigation and practical computer vision – from algorithm development to technology selection to system integration and testing – and who are passionate about building, designing, and shipping projects that have a positive, enduring impact on millions of people worldwide. Interested candidates should send a resume and cover letter to [email protected].

|

| DEEP LEARNING VISION IMPLEMENTATIONS |

|

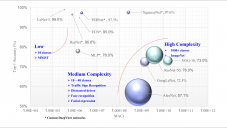

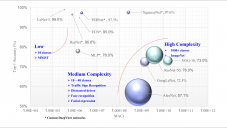

Implementing Vision with Deep Learning in Resource-constrained Designs

Deep neural networks have transformed the field of computer vision, delivering superior results on functions such as recognizing objects, localizing objects within a frame, and determining which pixels belong to which object. Even problems like optical flow and stereo correspondence, which had been solved quite well with conventional techniques, are now finding even better solutions using deep learning techniques. But deep learning is also resource-intensive, as measured by its compute requirements, memory and storage demands, network latency and bandwidth needs, and other metrics. These resource requirements are particularly challenging in embedded vision designs, which often have stringent size, weight, cost, power consumption and other constraints. This article from the Embedded Vision Alliance and member companies Au-Zone Technologies, BDTI and Synopsys reviews deep learning implementation options, including heterogeneous processing, network quantization, and software optimization. Sidebar articles present case studies on deep learning for ADAS applications and object recognition.

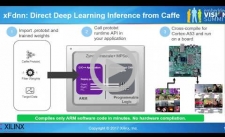

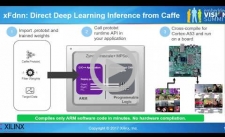

Caffe to Zynq: State-of-the-Art Machine Learning Inference Performance in Less Than 5 Watts

Machine learning research is advancing daily with new network architectures, making it difficult to choose the best CNN algorithm for a particular application. With this rapid rate of change in algorithms, embedded system developers who require high performance and low power consumption are increasingly considering Zync SoCs. Zynq SoCs are ideal for efficient CNN implementation as they allow creation of custom network circuitry in hardware, tuned exactly to the needs of the algorithm. The result is state-of-the-art performance-per-watt that outstrips CPU- and GPU-based embedded systems. In this talk, Vinod Kathail, Distinguished Engineer and leader of the Embedded Vision team at Xilinx, presents a method for easily migrating a CNN running in Caffe to an efficient Zynq-based embedded vision system utilizing Xilinx’s new reVISION software stack.

|

| COMPUTER VISION FOR COMPUTATIONAL PHOTOGRAPY |

|

Computer Vision in Surround View Applications

The ability to "stitch" together (offline or in real-time) multiple images taken simultaneously by multiple cameras or sequentially by a single camera, capturing varying viewpoints of a scene, is becoming an increasingly appealing (and in many cases necessary) capability in an expanding variety of applications. High quality of results is a critical requirement, one that's a particular challenge in price-sensitive consumer and similar applications due to their cost-driven limitations in optics, image sensors, and other components. And, as this technical article from the Alliance and member companies AMD and videantis explains, quality and cost aren't the sole factors that bear consideration in a design; power consumption, size and weight, latency, and other attributes are also critical.

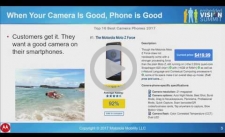

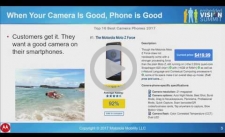

Designing and Implementing Camera ISP Algorithms Using Deep Learning and Computer Vision

Once we began to rely on our phone cameras as the primary means of sharing memories, emotions and important events in our lives, the quality of images became critical to a positive user experience. Tuning the mobile camera became a kind of black art, which grew in complexity with each advance in camera components such as sensors, lenses, voice coil motors and image signal processors. This many-dimensional optimization problem is further complicated by constraints imposed on mobile cameras due to the small size of the phone. Together, this complexity and these constraints create a rather complicated problem that needs to be solved faster and faster with each successive product cycle. Motorola/Lenovo looks at this situation as an opportunity to apply its engineering skills to achieve great image quality faster. In this talk, Val Marchevsky, Senior Director of Engineering at Motorola, explores intelligent tuning of image signal processing components, such as auto white balance, using deep learning. He also discusses applying computer vision for image enhancement technologies such as high dynamic range imaging.

|

| UPCOMING INDUSTRY EVENTS |

|

Embedded World Exhibition and Conference: February 27-March 1, 2018, Nuremberg, Germany

Embedded Vision Summit: May 22-24, 2018, Santa Clara, California

More Events

|

| DEEP LEARNING FOR COMPUTER VISION WITH TENSORFLOW TRAINING CLASSES |