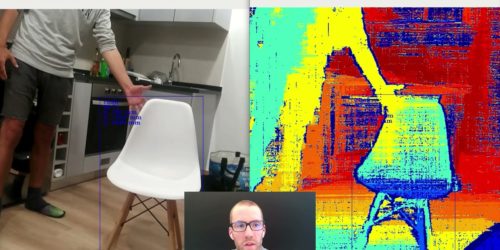

Algolux Demonstration of Highly Robust Computer Vision for All Conditions with the Eos Embedded Perception Software

Dave Tokic, Vice President of Marketing and Strategic Partnerships at Algolux, demonstrates the company’s latest edge AI and vision technologies and products at the 2021 Embedded Vision Summit. Specifically, Tokic demonstrates highly robust computer vision for all conditions with the Eos embedded perception software. The Eos end-to-end vision architecture enables co-design of imaging and detection […]