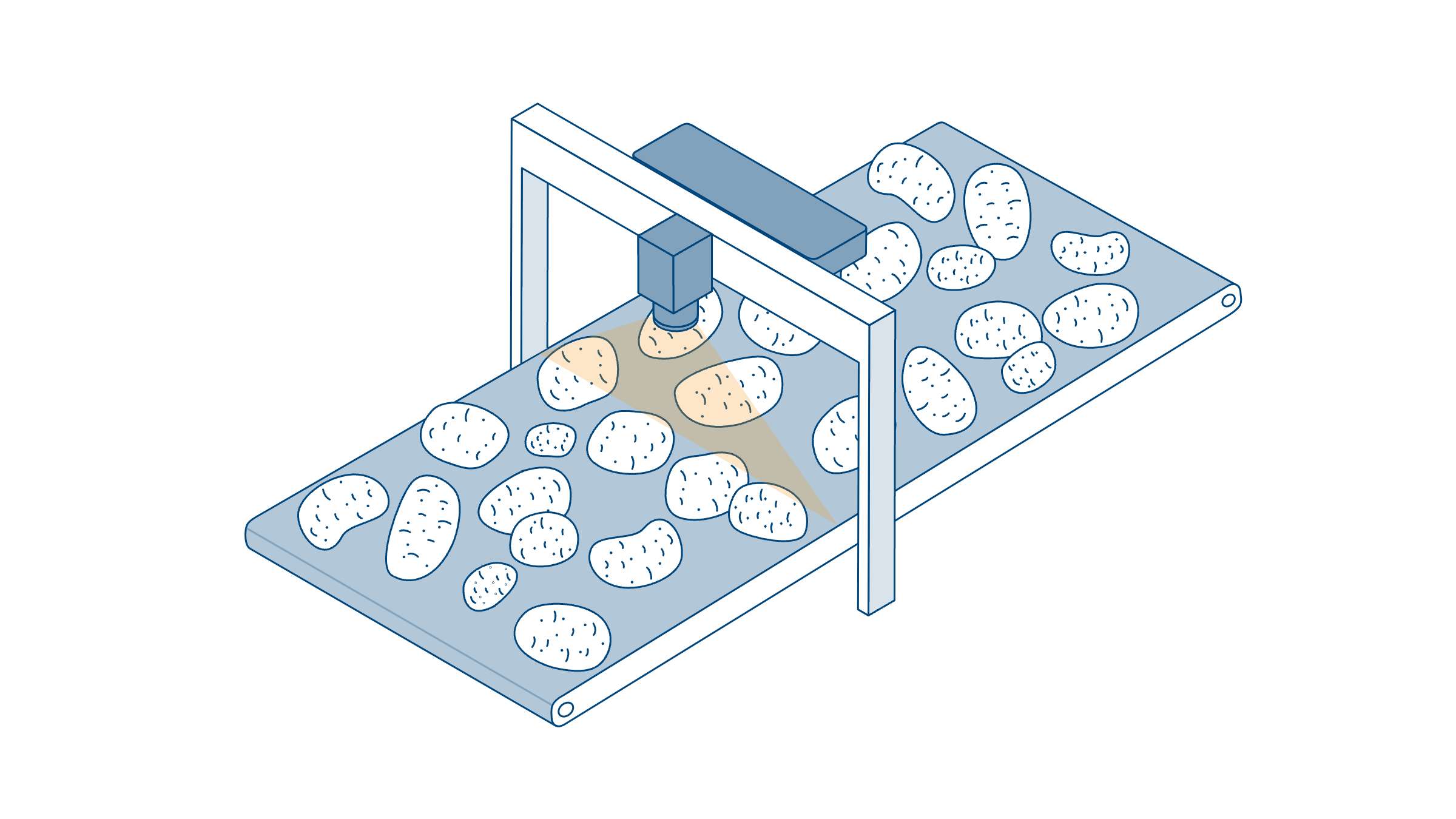

SWIR Vision Systems in Agricultural Production

This blog post was originally published at Basler’s website. It is reprinted here with the permission of Basler. Improved produce inspection through short-wave infrared light Ensuring the quality of fruits and vegetables such as apples or potatoes is crucial to meet market standards and consumer expectations. Traditional inspection methods are often based only on visual […]

SWIR Vision Systems in Agricultural Production Read More +