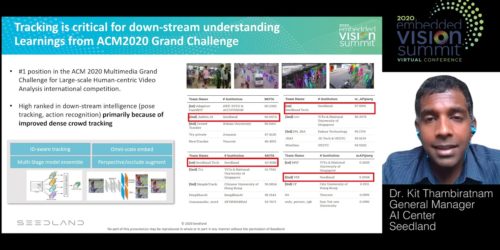

“Multi-modal Re-identification: IOT + Computer Vision for Residential Community Tracking,” a Presentation from Seedland

Kit Thambiratnam, General Manager of the Seedland AI Center, presents the “Multi-modal Re-identification: IOT + Computer Vision for Residential Community Tracking” tutorial at the September 2020 Embedded Vision Summit. The recent COVID-19 outbreak necessitated monitoring in communities such as tracking of quarantined residents and tracking of close-contact interactions with sick individuals. High-density communities also have […]