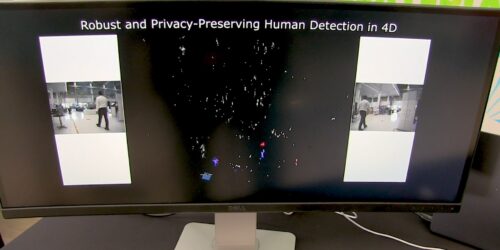

Waveye Demonstration of Ultra-high Resolution LIR Imaging Technology

Levon Budagyan, CEO of Waveye, demonstrates the company’s latest edge AI and vision technologies and products at the September 2024 Edge AI and Vision Alliance Forum. Specifically, Budagyan demonstrates his company’s ultra-high resolution LIR imaging technology, focused on key robotics applications: Robust, privacy-preserving human detection for robots 4D microwave imaging in high resolution for indoor […]

Waveye Demonstration of Ultra-high Resolution LIR Imaging Technology Read More +