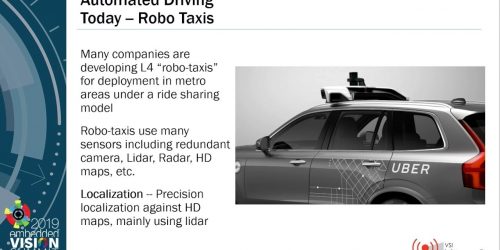

“Selecting the Right Imager for Your Embedded Vision Application,” a Presentation from Capable Robot Components

Chris Osterwood, Founder and CEO of Capable Robot Components, presents the “Selecting the Right Imager for Your Embedded Vision Application” tutorial at the May 2019 Embedded Vision Summit. The performance of your embedded vision product is inexorably linked to the imager and lens it uses. Selecting these critical components is sometimes overwhelming due to the […]