“Enabling Software Developers to Harness FPGA Compute Accelerators,” a Presentation from Intel

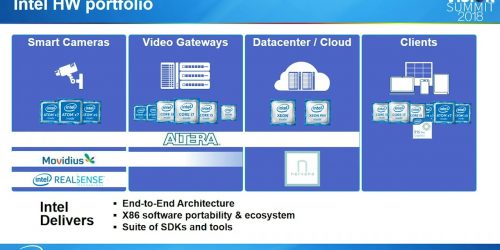

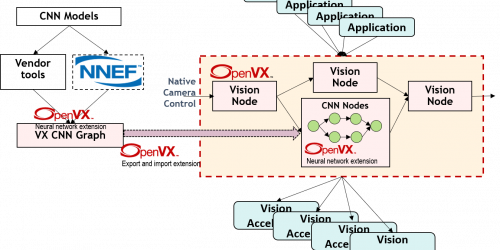

Bernhard Friebe, Senior Director of Marketing for the Programmable Solutions Group at Intel, presents the “Enabling Software Developers to Harness FPGA Compute Accelerators” tutorial at the May 2018 Embedded Vision Summit. FPGAs play a critical part in heterogeneous compute platforms as flexible, reprogrammable, multi-function accelerators. They enable custom-hardware performance with the programmability of software. The […]