Synopsys Demonstration of Deep Learning Inference and Sparse Optical Flow

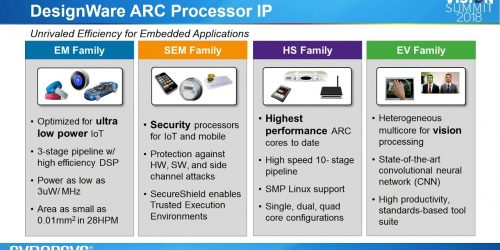

Gordon Cooper, product marketing manager at Synopsys, delivers a product demonstration at the May 2018 Embedded Vision Summit. Specifically, Cooper demonstrates combining deep learning with traditional computer vision by using the DesignWare EV6x Embedded Vision Processor¹s vector DSP and CNN engine. The tightly integrated CNN engine executes deep learning inference (using TinyYOLO, but any graph […]

Synopsys Demonstration of Deep Learning Inference and Sparse Optical Flow Read More +