Visual Intelligence: Foundation Models + Satellite Analytics for Deforestation (Part 2)

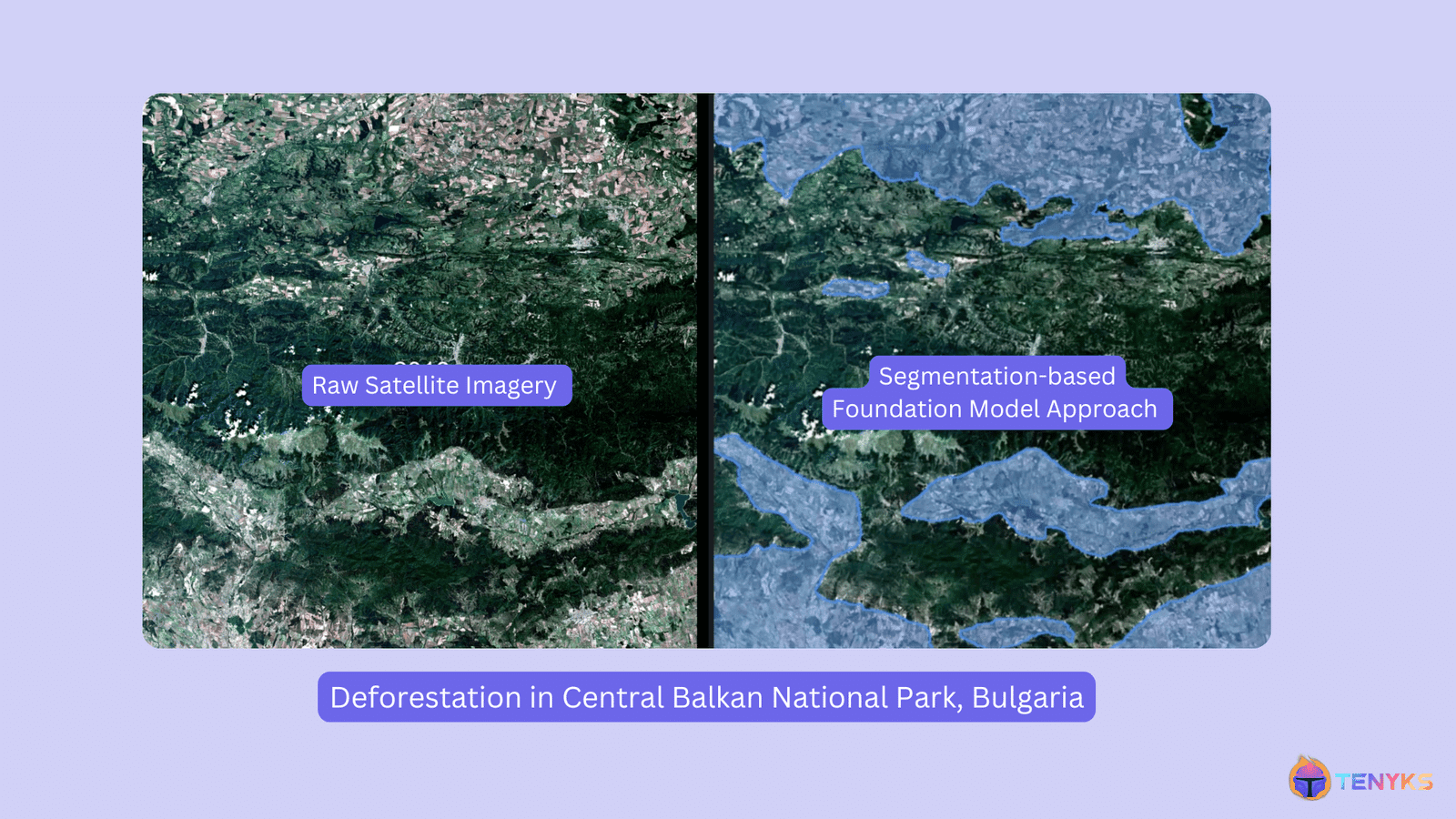

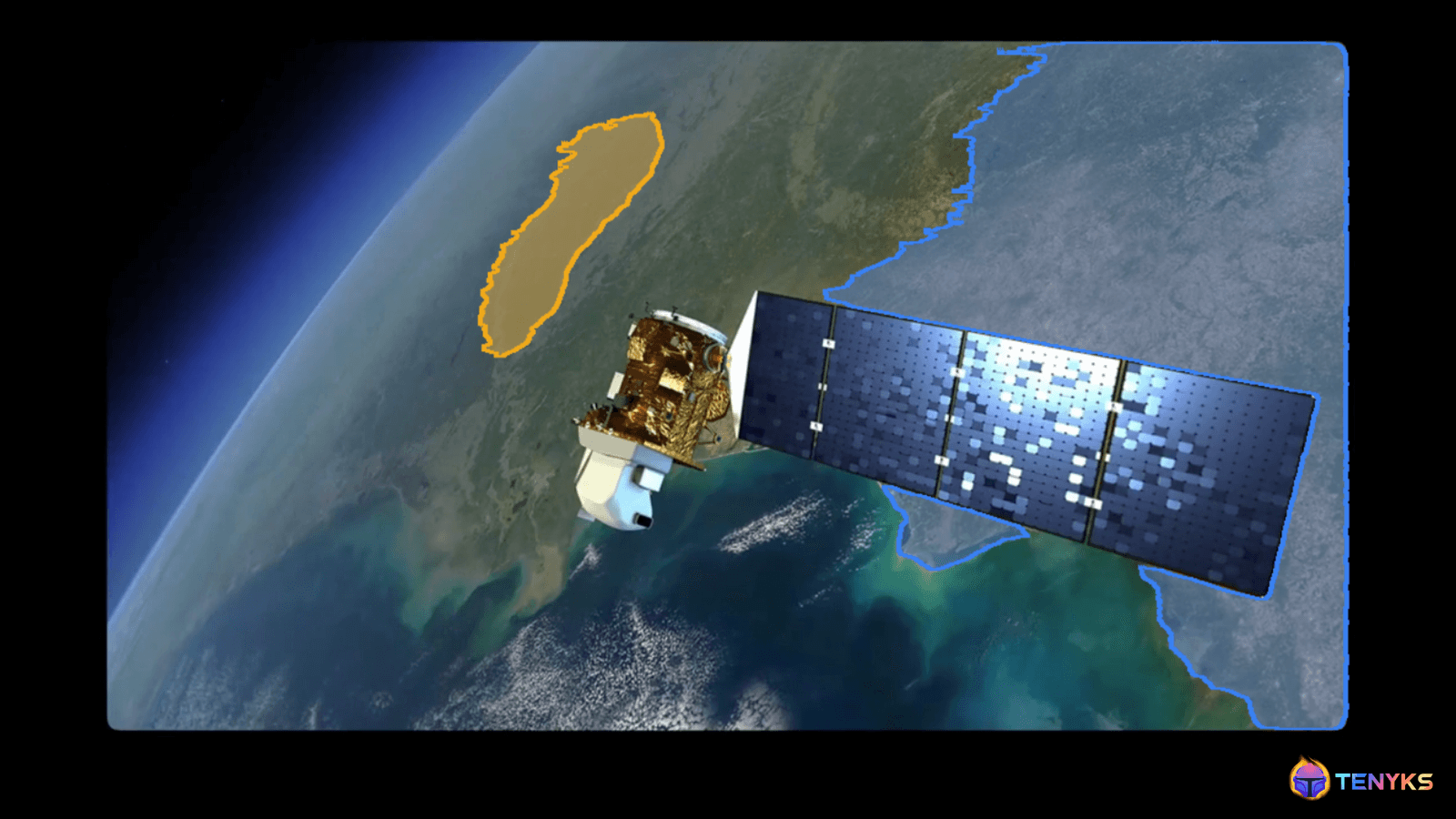

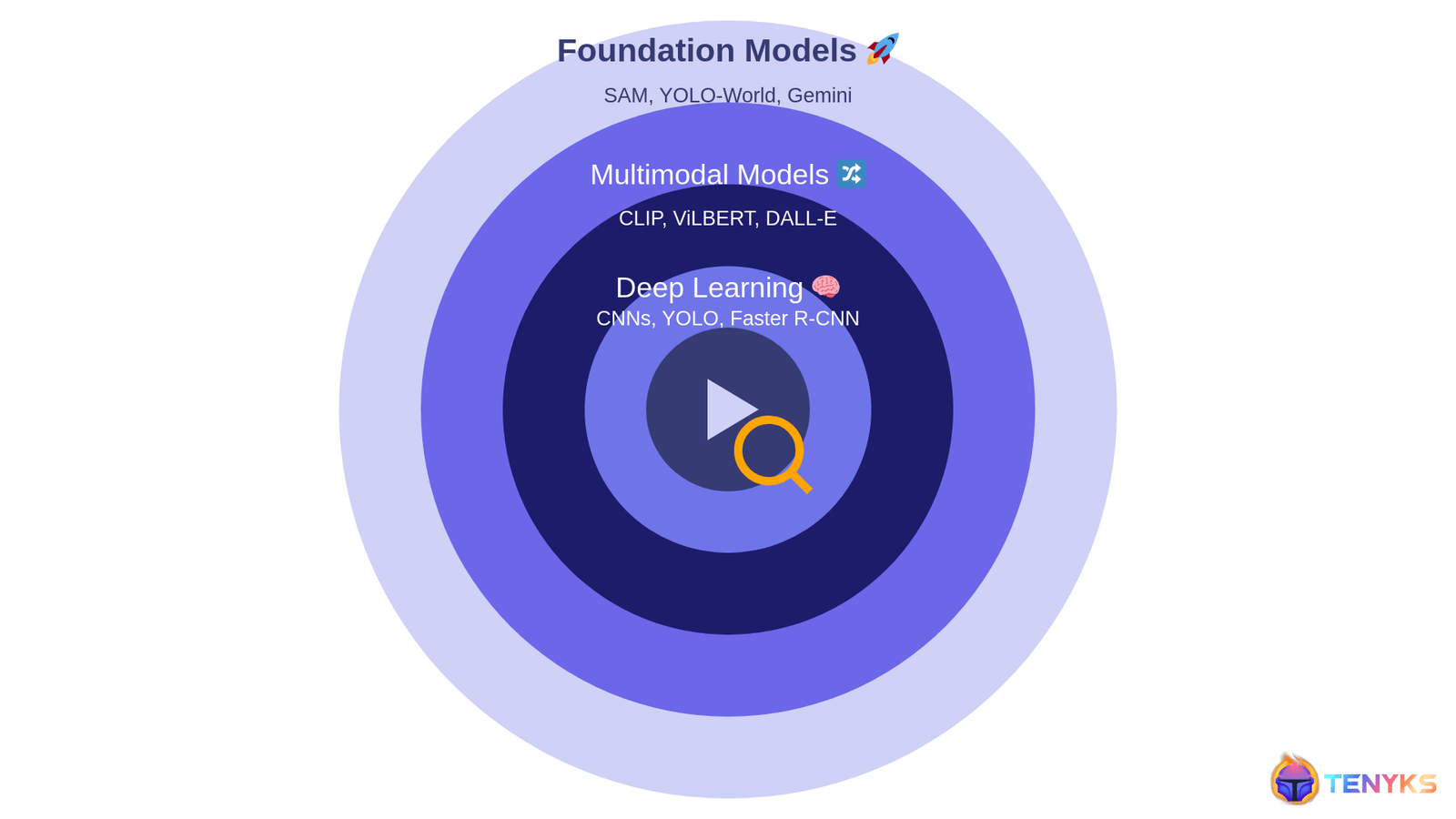

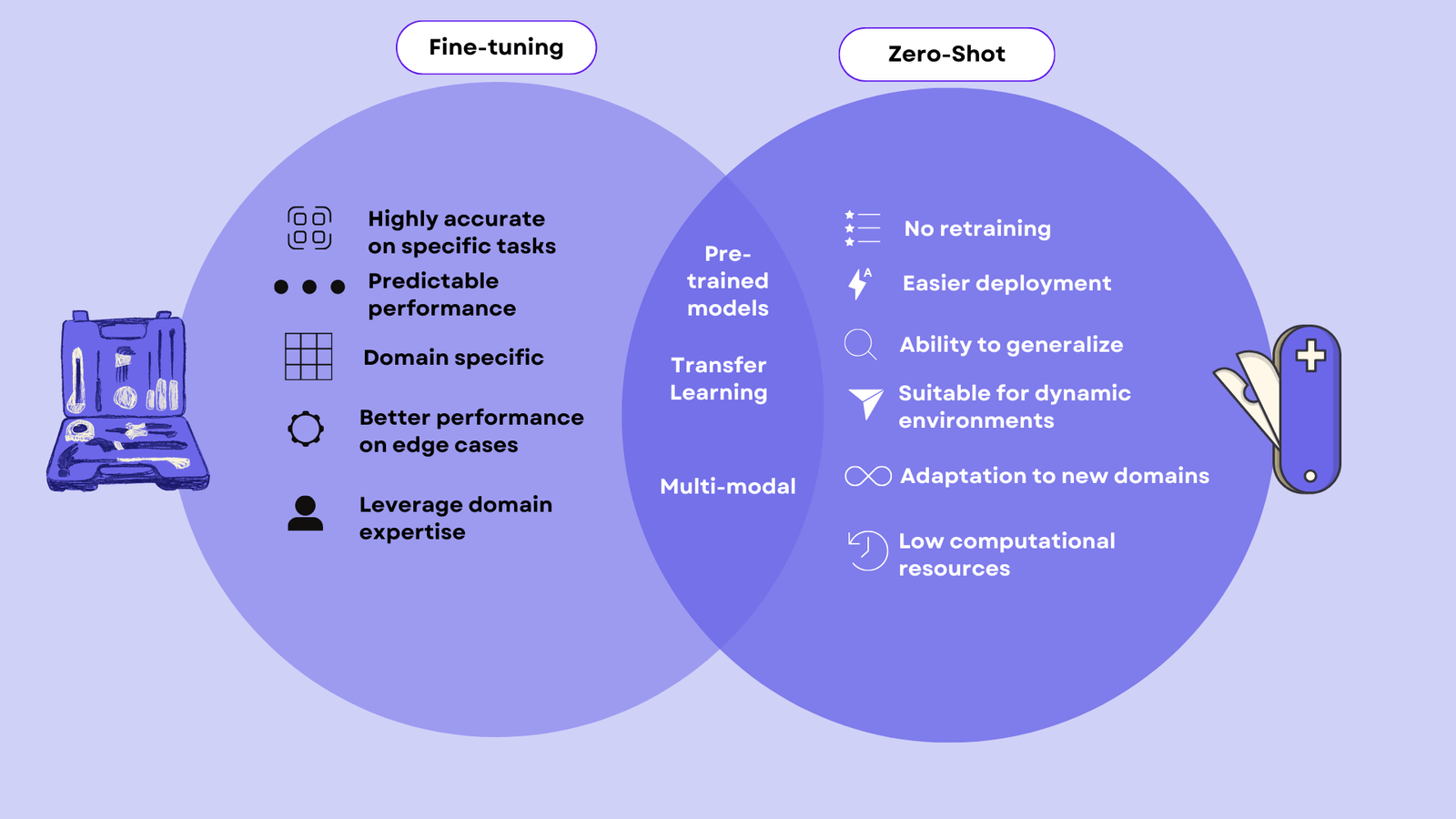

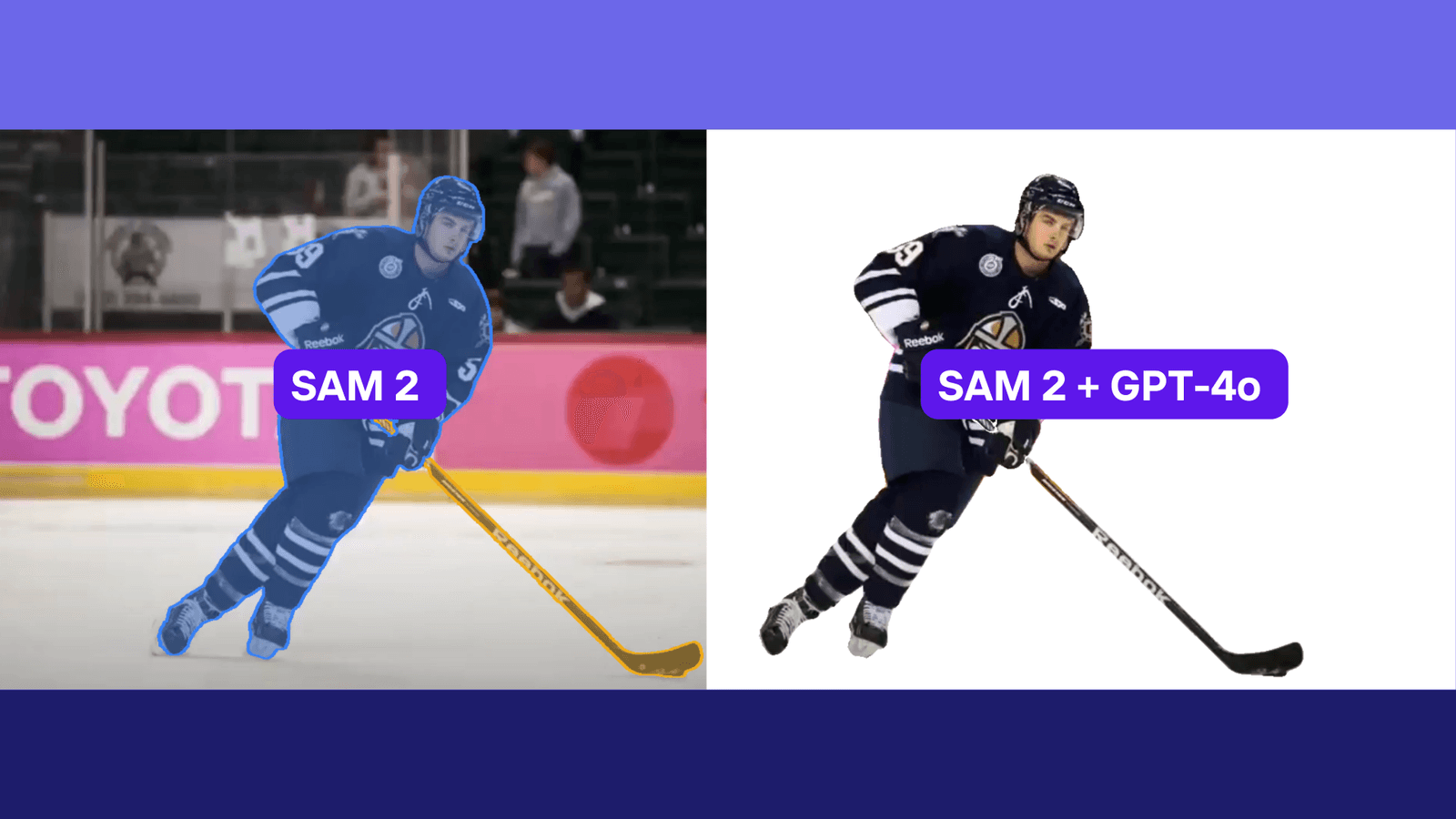

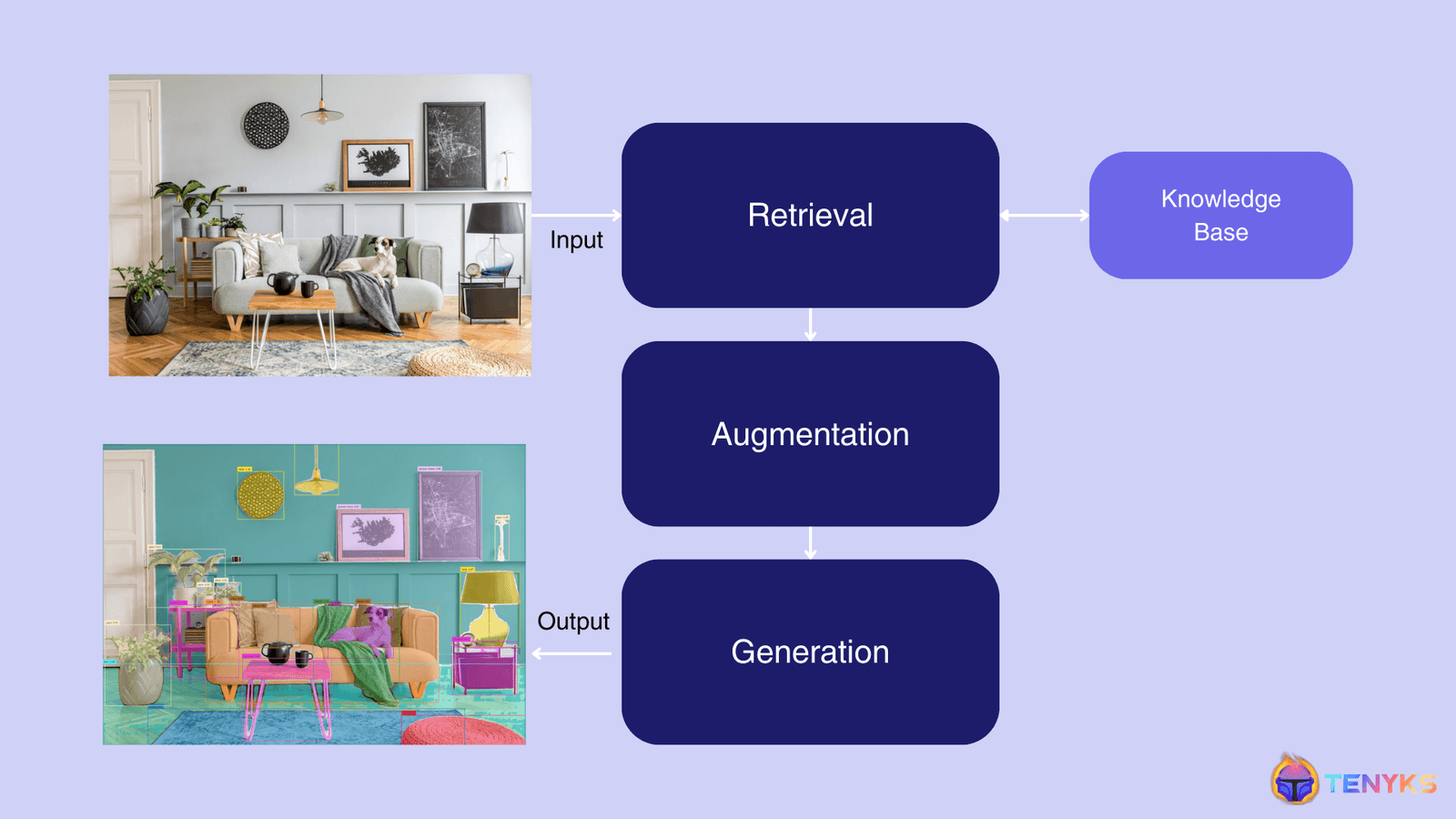

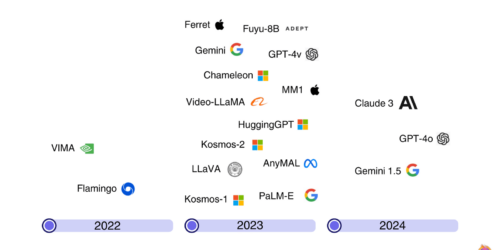

This blog post was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. In Part 2, we explore how Foundation Models can be leveraged to track deforestation patterns. Building upon the insights from our Sentinel-2 pipeline and Central Balkan case study, we dive into the revolution that foundation models have […]

Visual Intelligence: Foundation Models + Satellite Analytics for Deforestation (Part 2) Read More +