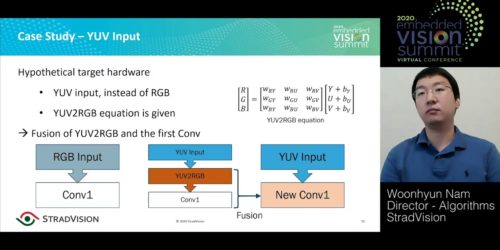

“Designing Bespoke CNNs for Target Hardware,” a Presentation from StradVision

Woonhyun Nam, Algorithms Director at StradVision, presents the “Designing Bespoke CNNs for Target Hardware” tutorial at the September 2020 Embedded Vision Summit. Due to the great success of deep neural networks (DNNs) in computer vision and other machine learning applications, numerous specialized processors have been developed to execute these algorithms with reduced cost and power […]

“Designing Bespoke CNNs for Target Hardware,” a Presentation from StradVision Read More +