Key Insights from Data Centre World 2025: Sustainability and AI

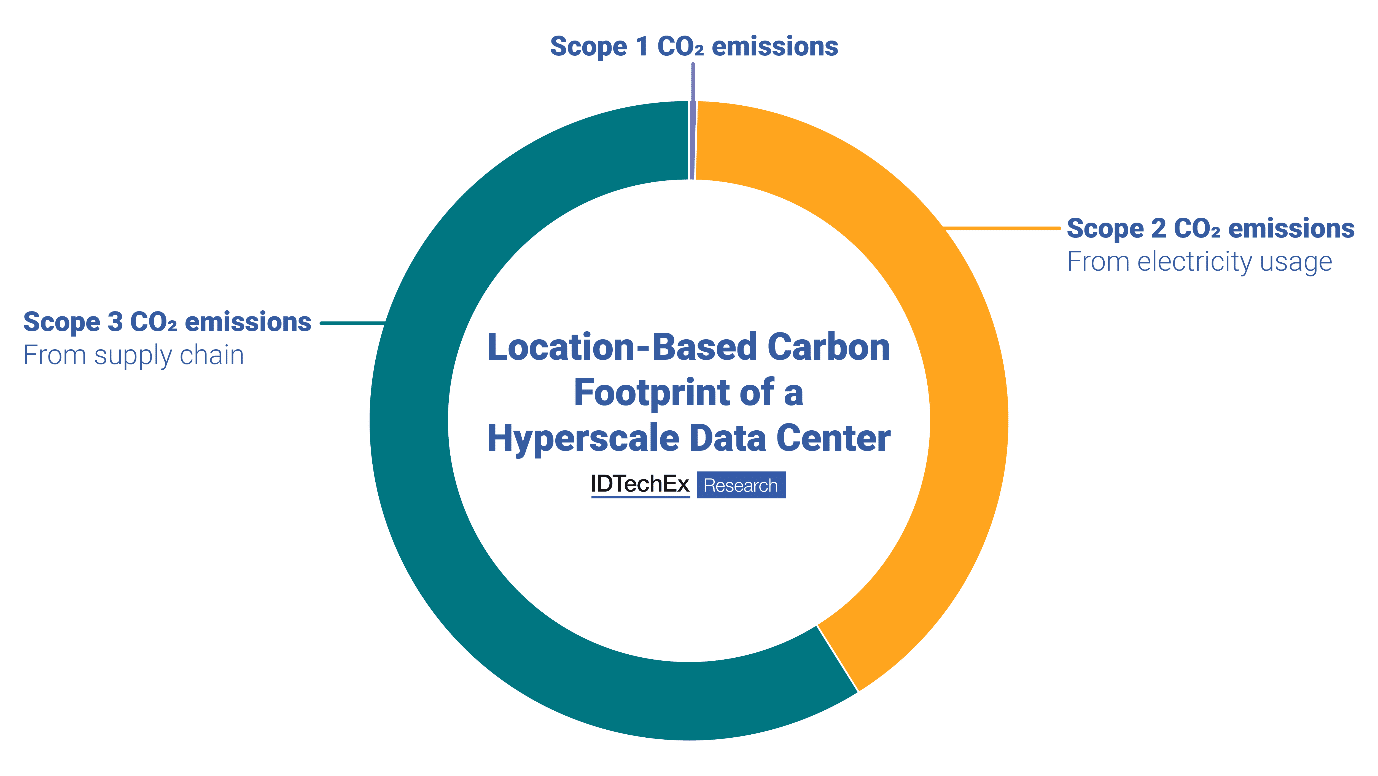

Scope 2 power-based emissions and Scope 3 supply chain emissions make the biggest contribution to the data center’s carbon footprint. IDTechEx’s Sustainability for Data Centers report explores which technologies can reduce these emissions. Major data center players converged in London, UK, in the middle of March for the 2025 iteration of Data Centre World. Co-located […]

Key Insights from Data Centre World 2025: Sustainability and AI Read More +