Productionizing State-of-the-art Models at the Edge for Smart City Use Cases (Part I)

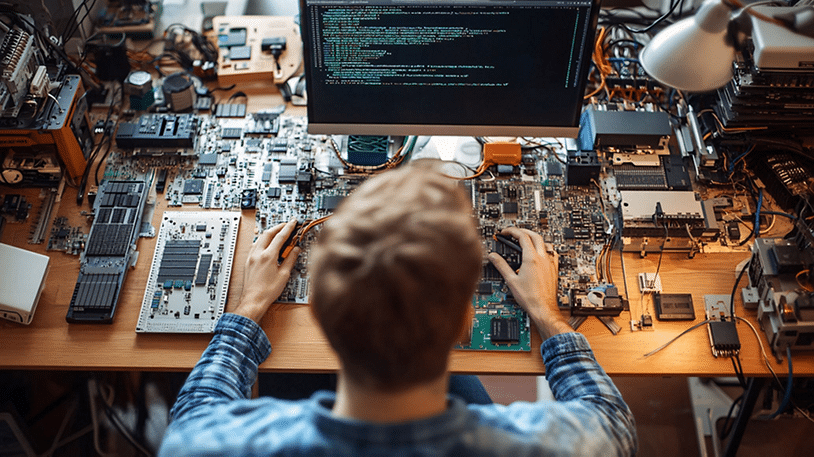

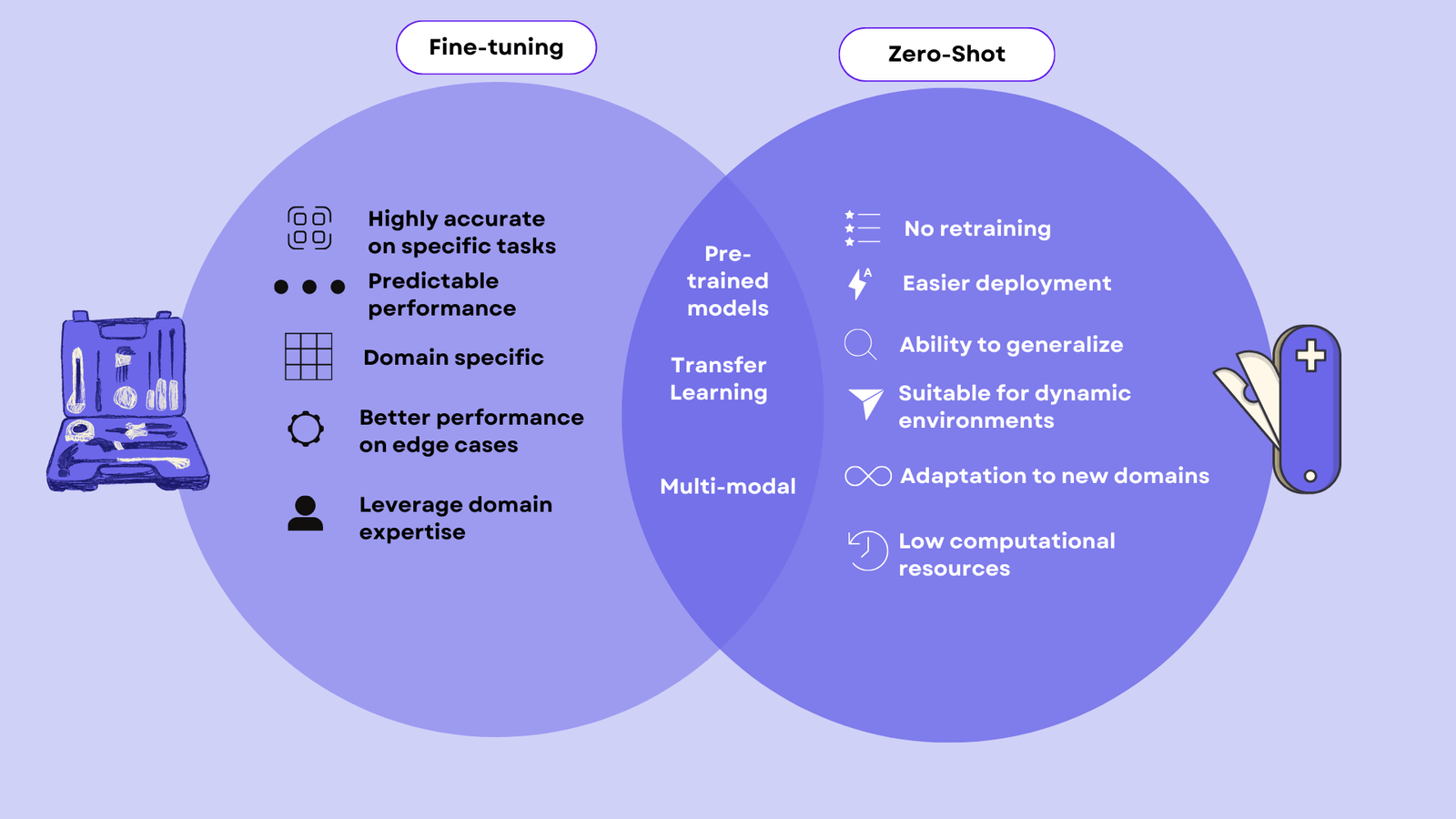

This blog post was originally published at CLIKA’s website. It is reprinted here with the permission of CLIKA. Approaches to productionizing models for edge applications can vary greatly depending on user priorities, with some models not requiring model optimization at all. An organization can choose pre-existing models designed specifically for edge use cases with performance […]

Productionizing State-of-the-art Models at the Edge for Smart City Use Cases (Part I) Read More +