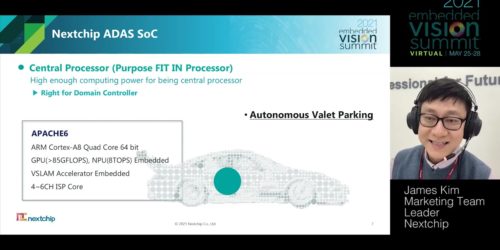

“Deploying an Autonomous Valet Parking Solution with Cameras Using AI,” a Presentation from Nextchip and VADAS

James Kim, Marketing Team Leader at Nextchip, and Peter Lee, CEO of VADAS, co-present the “Deploying an Autonomous Valet Parking Solution with Cameras Using AI” tutorial at the May 2021 Embedded Vision Summit. In this talk, Nextchip’s Kim explains why his company targeted autonomous valet parking (AVP) with its newest APACHE6 SoC, and how Nextchip […]