Combining an ISP and Vision Processor to Implement Computer Vision

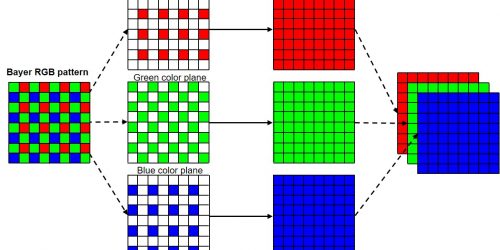

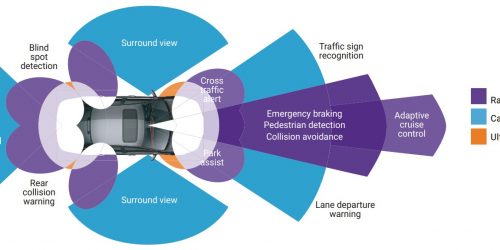

An ISP (image signal processor) in combination with one or several vision processors can collaboratively deliver more robust computer vision processing capabilities than vision processing is capable of providing standalone. However, an ISP operating in a computer vision-optimized configuration may differ from one functioning under the historical assumption that its outputs would be intended for […]

Combining an ISP and Vision Processor to Implement Computer Vision Read More +