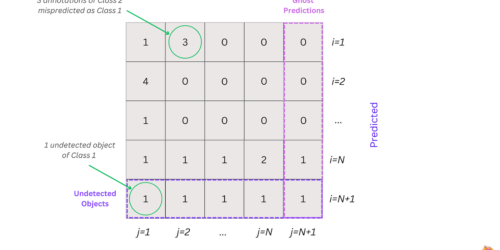

Multiclass Confusion Matrix for Object Detection

This blog post was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. We introduce the Multiclass Confusion Matrix for Object Detection, a table that can help you perform failure analysis identifying otherwise unnoticeable errors, such as edge cases or non-representative issues in your data. In this article we introduce […]

Multiclass Confusion Matrix for Object Detection Read More +