AutoML Decoded: The Ultimate Guide and Tools Comparison

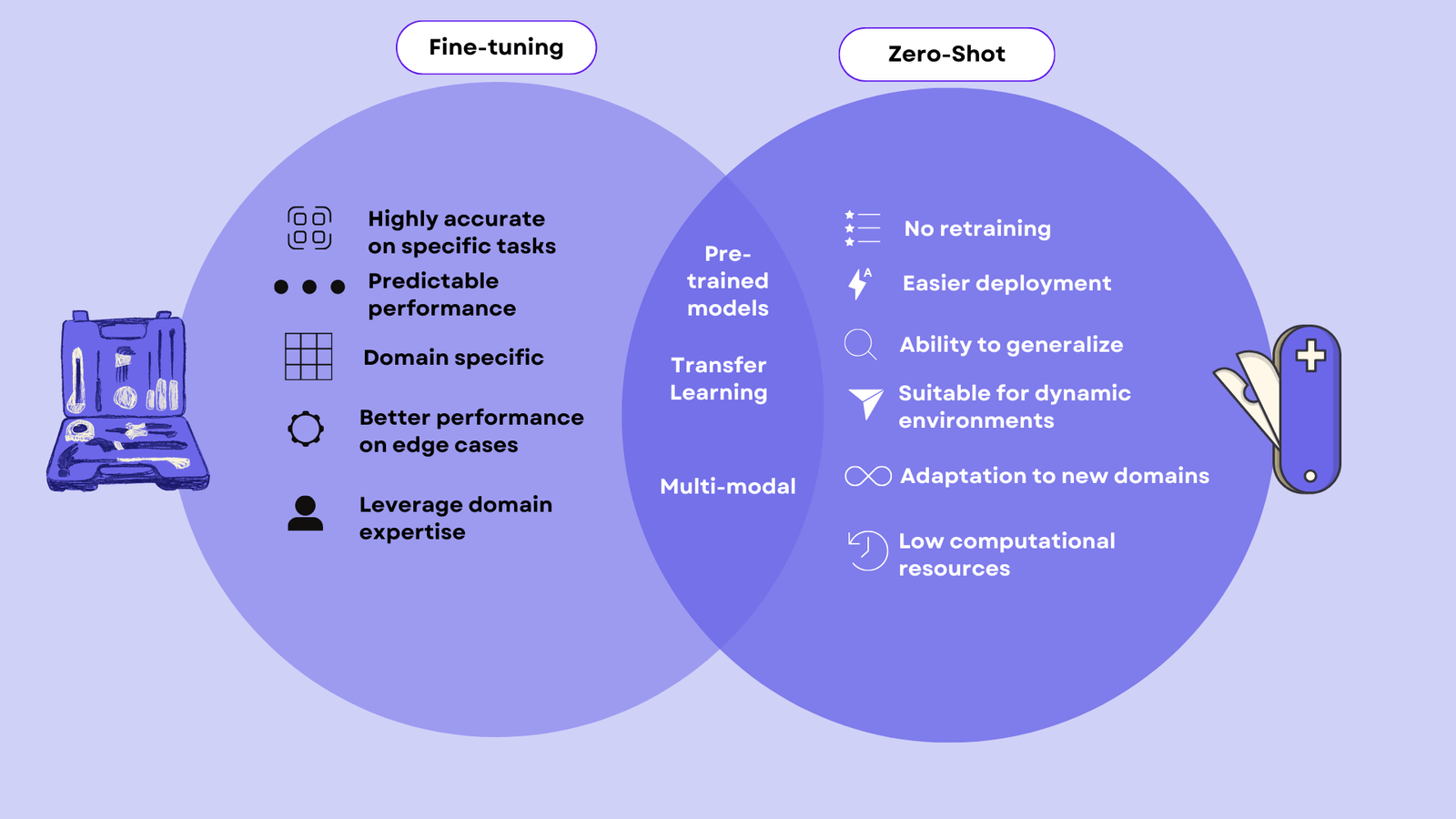

This article was originally published at Tryolabs’ website. It is reprinted here with the permission of Tryolabs. The quest for efficient and user-friendly solutions has led to the emergence of a game-changing concept: Automated Machine Learning (AutoML). AutoML is the process of automating the tasks involved in the entire Machine Learning lifecycle, such as data […]

AutoML Decoded: The Ultimate Guide and Tools Comparison Read More +