AI On the Road: Why AI-powered Cars are the Future

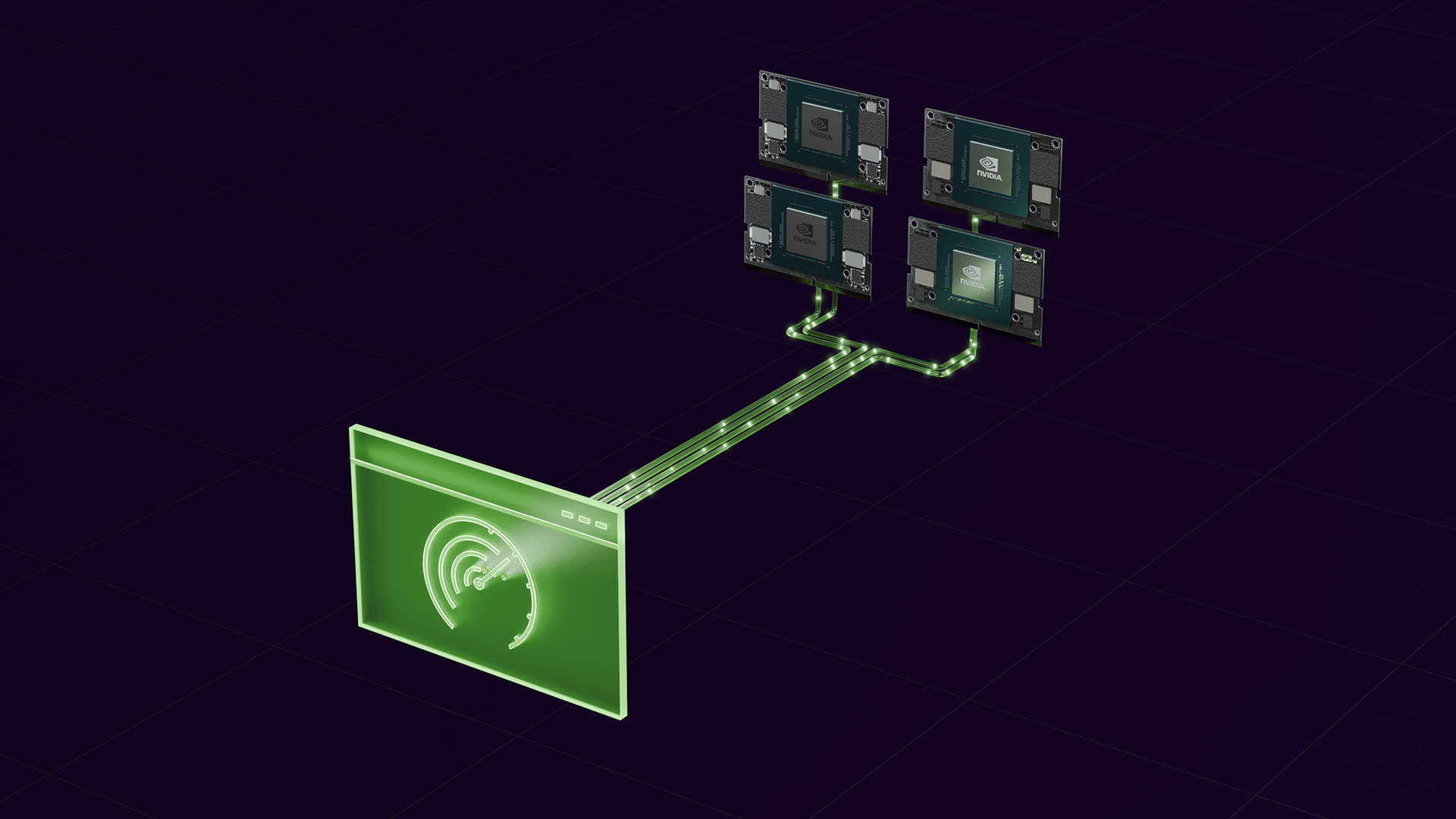

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. AI transforms your driving experience in unexpected ways as showcased by Qualcomm Technologies collaborations As automotive technology rapidly advances, consumers are looking for vehicles that deliver AI-enhanced experiences through conversational voice assistants and sophisticated user interfaces. Automotive […]

AI On the Road: Why AI-powered Cars are the Future Read More +