“Architecting a Smart Home Monitoring System with Millions of Cameras,” a Presentation from Comcast

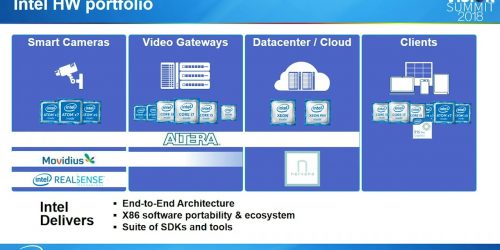

Hongcheng Wang, Senior Manager of Technical R&D at Comcast, presents the “Architecting a Smart Home Monitoring System with Millions of Cameras” tutorial at the May 2018 Embedded Vision Summit. Video monitoring is a critical capability for the smart home. With millions of cameras streaming to the cloud, efficient and scalable video analytics becomes essential. To […]