Transformer Models and NPU IP Co-optimized for the Edge

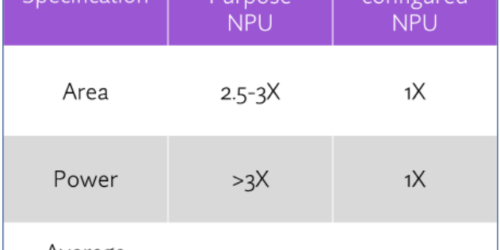

Transformers are taking the AI world by storm, as evidenced by super-intelligent chatbots and search queries, as well as image and art generators. These are also based on neural net technologies but programmed in a quite different way from more commonly understood convolution methods. Now transformers are starting to make their way to edge applications. […]

Transformer Models and NPU IP Co-optimized for the Edge Read More +