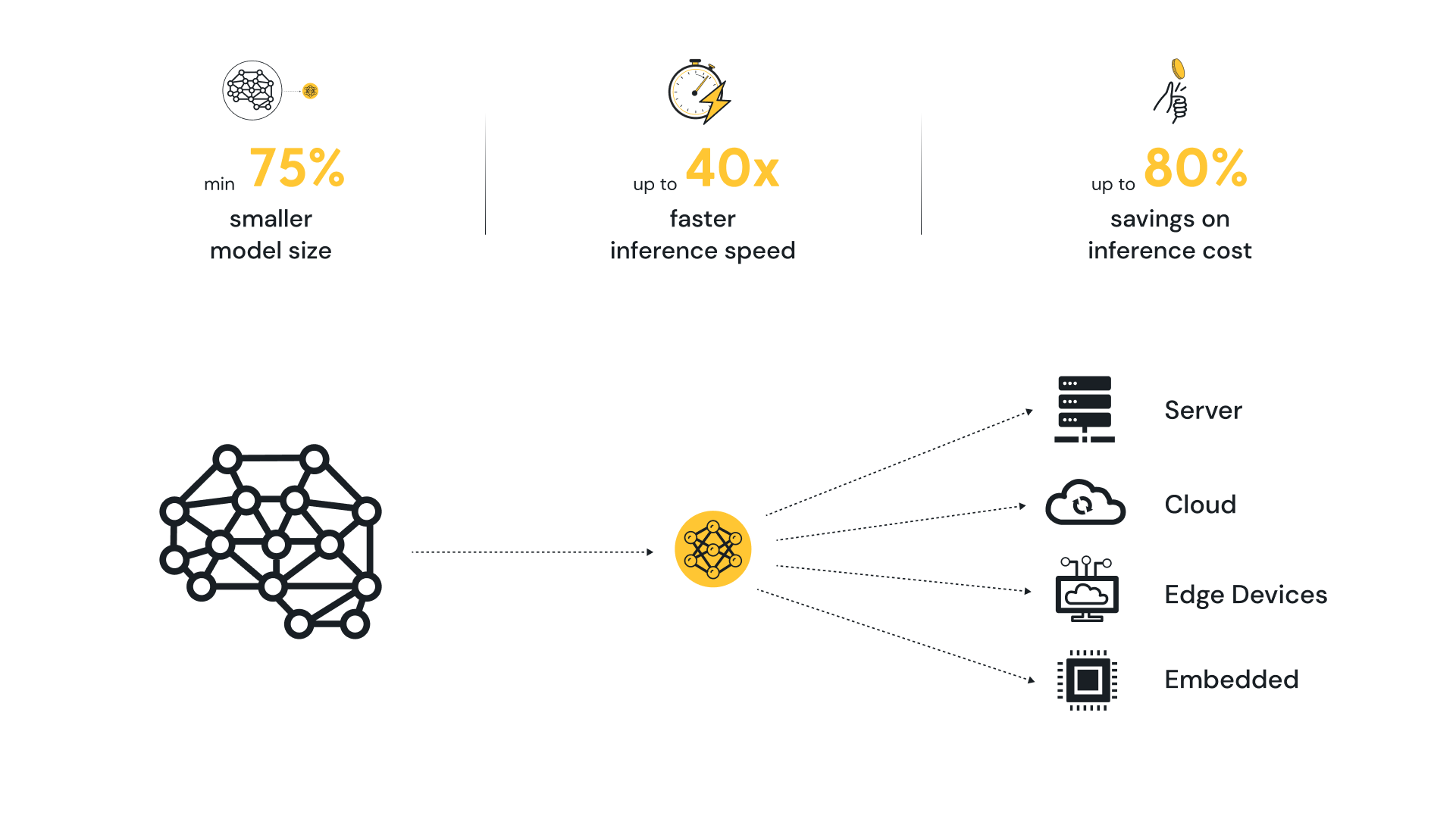

CLIKA helps enterprises of all sizes productionize their AI faster and at scale by providing a toolkit—the Auto Compression Engine (ACE)—that automatically compresses AI models and accelerates inference on any hardware, including those with limited compute resources like edge devices. ACE is powered by our proprietary compression technology that dramatically reduces the size of AI models without compromising performance With CLIKA, enterprises not only save up to 80% in inference costs but also achieve up to 40 times faster inference speed, all while reducing the model size by at least 75%. CLIKA’s mission is to embed intelligence in every device, everywhere.

CLIKA

Recent Content by Company

Productionizing State-of-the-art Models at the Edge for Smart City Use Cases (Part I)

This blog post was originally published at CLIKA’s website. It is reprinted here with the permission of CLIKA. Approaches to productionizing models for edge applications can vary greatly depending on user priorities, with some models not requiring model optimization at all. An organization can choose pre-existing models designed specifically for edge use cases with performance […]

Alliance Members at 2025 CES

The Edge AI and Vision Alliance 2025 CES Directory for game-changing computer vision and AI technologies Many Alliance Member companies will be showing off the latest building-block technologies that enable new capabilities for machines that see! CES is huge so we’ve created a handy checklist of these companies and where to find them including how […]

Fully Sharded Data Parallelism (FSDP)

This blog post was originally published at CLIKA’s website. It is reprinted here with the permission of CLIKA. In this blog we will explore Fully Sharded Data Parallelism (FSDP), which is a technique that allows for the training of large Neural Network models in a distributed manner efficiently. We’ll examine FSDP from a bird’s eye […]

Why CLIKA’s Auto Lightweight AI Toolkit is the Key to Unlocking Hardware-agnostic AI

This blog post was originally published at CLIKA’s website. It is reprinted here with the permission of CLIKA. Recent advances in artificial intelligence (AI) research have democratized access to models like ChatGPT. While this is good news in that it has urged organizations and companies to start their own AI projects either to improve business […]

On Finding CLIKA: the Founders’ Journey

This blog post was originally published at CLIKA’s website. It is reprinted here with the permission of CLIKA. CLIKA, a tinyAI startup, was founded based on the realization that the future of artificial intelligence (AI) would depend on how well and quickly businesses would be able to scale and productionize their AI. Ben Asaf was […]