Automotive Applications for Embedded Vision

Vision products in automotive applications can make us better and safer drivers

Vision products in automotive applications can serve to enhance the driving experience by making us better and safer drivers through both driver and road monitoring.

Driver monitoring applications use computer vision to ensure that driver remains alert and awake while operating the vehicle. These systems can monitor head movement and body language for indications that the driver is drowsy, thus posing a threat to others on the road. They can also monitor for driver distraction behaviors such as texting, eating, etc., responding with a friendly reminder that encourages the driver to focus on the road instead.

In addition to monitoring activities occurring inside the vehicle, exterior applications such as lane departure warning systems can use video with lane detection algorithms to recognize the lane markings and road edges and estimate the position of the car within the lane. The driver can then be warned in cases of unintentional lane departure. Solutions exist to read roadside warning signs and to alert the driver if they are not heeded, as well as for collision mitigation, blind spot detection, park and reverse assist, self-parking vehicles and event-data recording.

Eventually, this technology will to lead cars with self-driving capability; Google, for example, is already testing prototypes. However many automotive industry experts believe that the goal of vision in vehicles is not so much to eliminate the driving experience but to just to make it safer, at least in the near term.

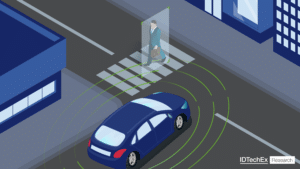

The Automotive Radar Market: Three Key Takeaways

Front mounted short-range side radars enabling junction pedestrian automatic emergency braking will be a key source of automotive radar market growth. The automotive industry has been using radar for two and a half decades. During that time, it has transformed from enabling luxury features on the most expensive cars, to being used ubiquitously for basic

Robotaxis on the Rise: Exploring Autonomous Vehicles

Robotaxis are proving they can offer driverless services in certain cities, as a means of accessible and modern public transport. IDTechEx states in its latest report, “Autonomous Vehicles Market 2025-2045: Robotaxis, Autonomous Cars, Sensors“, that testing is taking place worldwide, with the most commercial deployment happening in China currently. The report explores the commercial readiness

Single- vs. Multi-camera Systems: The Ultimate Guide

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Single and multiple camera setups play a vital role in today’s vision systems, enhancing applications such as Automated sports broadcasting, Industrial robots, Traffic monitoring and more. Explore the key differences, advantages, and real-world applications of

Intel Accelerates Software-defined Innovation with Whole-vehicle Approach

At CES 2025, Intel unveils new adaptive control solution, next-gen discrete graphics and AWS virtual development environment. What’s New: At CES, Intel unveiled an expanded product portfolio and new partnerships designed to accelerate automakers’ transitions to electric and software-defined vehicles (SDVs). Intel now offers a whole-vehicle platform, including high-performance compute, discrete graphics, artificial intelligence (AI), power

NVIDIA DRIVE Hyperion Platform Achieves Critical Automotive Safety and Cybersecurity Milestones for AV Development

Adopted and Backed by Automotive Manufacturers and Safety Authorities, Latest Iteration to Feature DRIVE Thor on NVIDIA Blackwell Running NVIDIA DriveOS January 6, 2025 — CES — NVIDIA today announced that its autonomous vehicle (AV) platform, NVIDIA DRIVE AGX™ Hyperion, has passed industry-safety assessments by TÜV SÜD and TÜV Rheinland — two of the industry’s

NXP Accelerates the Transformation to Software-defined Vehicles (SDV) with Agreement to Acquire TTTech Auto

NXP strengthens its automotive business with a leading software solution provider specialized in the systems, safety and security required for SDVs TTTech Auto complements and accelerates the NXP CoreRide platform, enabling automakers to reduce complexity, maximize system performance and shorten time to market The acquisition is the next milestone in NXP’s strategy to be the

Qualcomm Brings Industry-leading AI Innovations and Broad Collaborations to CES 2025 Across PC, Automotive, Smart Home and Enterprises

Highlights: Spotlight on bringing edge AI across devices and computing spaces, including PC, automotive, smart home and into enterprises broadly, with global ecosystem partners at the show. In PC, continued traction for the Snapdragon X Series, the launch of the new Snapdragon X platform, and the launch of a new desktop form factor and NPU-powered

New Edge AI-enabled Radar Sensor from TI Empowers Automakers to Reimagine In-cabin Experiences

News highlights: TI enhances detection accuracy with the industry’s first single-chip 60GHz millimeter-wave (mmWave) radar sensor to support three in-cabin sensing applications enabled by edge artificial intelligence (AI). Auto manufacturers can deliver premium audio experiences with a highly integrated automotive Arm®-based microcontroller (MCU) and processor with TI’s vector-based C7x digital signal processor (DSP) core to

Nextchip Demonstration of an ISP-based Thermal Imaging Camera

Barry Fitzgerald, local representative for Nextchip, demonstrates the company’s latest edge AI and vision technologies and products at the December 2024 Edge AI and Vision Alliance Forum. Specifically, Fitzgerald demonstrates a thermal imaging camera design based on the company’s ISP. The approach shown enhances night-time detection of pedestrians and animals beyond current visual capabilities, which

Automotive Radar Market 2025-2045: Robotaxis and Autonomous Cars

For more information, visit https://www.idtechex.com/en/research-report/automotive-radar-market-2025-2045-robotaxis-and-autonomous-cars/1061. The automotive radar market is forecast to hit 500 million annual sales in 2041 IDTechEx’s report, Automotive Radar Market 2025-2045: Robotaxis & Autonomous Cars, predicts the automotive radar market will hit 500 million annual sales in 2041. The market share today is dominated by the big tier-one companies like Continental,