Gesture Control Functions

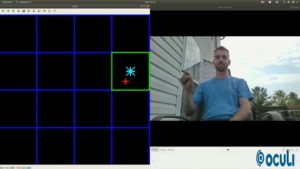

Oculi Enables Near-zero Lag Performance with an Embedded Solution for Gesture Control

Immersive extended reality (XR) experiences let users seamlessly interact with virtual environments. These experiences require real-time gesture control and eye tracking while running in resource-constrained environments such as on head-mounted displays (HMDs) and smart glasses. These capabilities are typically implemented using computer vision technology, with imaging sensors that generate lots of data to be moved

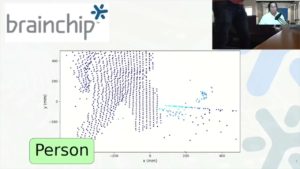

BrainChip Demonstration of AI at the Sensor with 3D Point Cloud Solutions Based on the Akida Neural Processor

Todd Vierra, Director of Customer Engagements at BrainChip, demonstrates the company’s latest edge AI and vision technologies and products at the 2021 Embedded Vision Summit. Specifically, Vierra demonstrates AI at the sensor with 3D point cloud solutions based on the company’s Akida event-based neural processor (NPU). Utilizing BrainChip’s Akida NPU, you can leverage advanced neuromorphic

May 2021 Embedded Vision Summit Slides

The Embedded Vision Summit was held online on May 25-28, 2021, as an educational forum for product creators interested in incorporating visual intelligence into electronic systems and software. The presentations delivered at the Summit are listed below. All of the slides from these presentations are included in PDF form. To… May 2021 Embedded Vision Summit

September 2020 Embedded Vision Summit Slides

The Embedded Vision Summit was held online on September 15-25, 2020, as an educational forum for product creators interested in incorporating visual intelligence into electronic systems and software. The presentations delivered at the Summit are listed below. All of the slides from these presentations are included in PDF form. To… September 2020 Embedded Vision Summit

May 2019 Embedded Vision Summit Slides

The Embedded Vision Summit was held on May 20-23, 2019 in Santa Clara, California, as an educational forum for product creators interested in incorporating visual intelligence into electronic systems and software. The presentations delivered at the Summit are listed below. All of the slides from these presentations are included in… May 2019 Embedded Vision Summit

May 2018 Embedded Vision Summit Slides

The Embedded Vision Summit was held on May 21-24, 2018 in Santa Clara, California, as an educational forum for product creators interested in incorporating visual intelligence into electronic systems and software. The presentations delivered at the Summit are listed below. All of the slides from these presentations are included in… May 2018 Embedded Vision Summit

“Always-on Vision Becomes a Reality,” a Presentation from Qualcomm Research

Evgeni Gousev, Senior Director at Qualcomm Research, presents the "Always-On Vision Becomes a Reality" tutorial at the May 2017 Embedded Vision Summit. Intelligent devices equipped with human-like senses such as always-on touch, audio and motion detection have enabled a variety of new use cases and applications, transforming the way we interact with each other and

“Always-on Vision Becomes a Reality,” a Presentation from Qualcomm Research

Evgeni Gousev, Senior Director at Qualcomm Research, presents the "Always-On Vision Becomes a Reality" tutorial at the May 2017 Embedded Vision Summit. Intelligent devices equipped with human-like senses such as always-on touch, audio and motion detection have enabled a variety of new use cases and applications, transforming the way we interact with each other and

May 2017 Embedded Vision Summit Slides

The Embedded Vision Summit was held on May 1-3, 2017 in Santa Clara, California, as a educational forum for product creators interested in incorporating visual intelligence into electronic systems and software. The presentations delivered at the Summit are listed below. All of the slides from these presentations are included in… May 2017 Embedded Vision Summit

May 2016 Embedded Vision Summit Proceedings

The Embedded Vision Summit was held on May 2-4, 2016 in Santa Clara, California, as a educational forum for product creators interested in incorporating visual intelligence into electronic systems and software. The presentations presented at the Summit are listed below. All of the slides from these presentations are included in… May 2016 Embedded Vision Summit

“Techniques for Efficient Implementation of Deep Neural Networks,” a Presentation from Stanford

Song Han, graduate student at Stanford, delivers the presentation "Techniques for Efficient Implementation of Deep Neural Networks" at the March 2016 Embedded Vision Alliance Member Meeting. Song presents recent findings on techniques for the efficient implementation of deep neural networks.

Adding Precise Finger Gesture Recognition Capabilities to the Microsoft Kinect

CogniMem’s Chris McCormick, application engineer, demonstrates how the addition of general-purpose and scalable pattern recognition can be used to bring enhanced gesture control to the Microsoft Kinect. Envisioned applications include augmenting or eliminating the TV remote control, using American Sign Language for direct text translation, and expanding the game-playing experience. To process even more gestures

Vision in Wearable Devices: Enhanced and Expanded Application and Function Choices

A version of this article was originally published at EE Times' Embedded.com Design Line. It is reprinted here with the permission of EE Times. Thanks to the emergence of increasingly capable and cost-effective processors, image sensors, memories and other semiconductor devices, along with robust algorithms, it's now practical to incorporate computer vision into a wide

Practical Computer Vision Enables Digital Signage with Audience Perception

This article was originally published at Information Display Magazine. It is reprinted here with the permission of the Society of Information Display. Signs that see and understand the actions and characteristics of individuals in front of them can deliver numerous benefits to advertisers and viewers alike. Such capabilities were once only practical in research labs

Smart In-Vehicle Cameras Increase Driver and Passenger Safety

This article was originally published at John Day's Automotive Electronics News. It is reprinted here with the permission of JHDay Communications. Cameras located in a vehicle's interior, coupled with cost-effective and power-efficient processors, can deliver abundant benefits to drivers and passengers alike. By Brian Dipert Editor-in-Chief Embedded Vision Alliance Tom Wilson Vice President, Business Development

May 2014 Embedded Vision Summit Technical Presentation: “Vision-Based Gesture User Interfaces,” Francis MacDougall, Qualcomm

Francis MacDougall, Senior Director of Technology at Qualcomm, presents the "Vision-Based Gesture User Interfaces" tutorial at the May 2014 Embedded Vision Summit. The means by which we interact with the machines around us is undergoing a fundamental transformation. While we may still sometimes need to push buttons, touch displays and trackpads, and raise our voices,

Improved Vision Processors, Sensors Enable Proliferation of New and Enhanced ADAS Functions

This article was originally published at John Day's Automotive Electronics News. It is reprinted here with the permission of JHDay Communications. Thanks to the emergence of increasingly capable and cost-effective processors, image sensors, memories and other semiconductor devices, along with robust algorithms, it's now practical to incorporate computer vision into a wide range of embedded

October 2013 Embedded Vision Summit Technical Presentation: “Vision-Based Gesture User Interfaces,” Francis MacDougall, Qualcomm

Francis MacDougall, Senior Director of Technology at Qualcomm, presents the "Vision-Based Gesture User Interfaces" tutorial within the "Vision Applications" technical session at the October 2013 Embedded Vision Summit East. MacDougall explains how gestures fit into the spectrum of advanced user interface options, compares and contrasts the various 2-D and 3-D technologies (vision and other) available