Object Tracking Functions

What is Depth of Field and Its Relevance in Embedded Vision?

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Depth of Field (DoF) is crucial for embedded vision since it can improve the ability to process and analyze visual data. It is impacted by factors such as aperture size, focal length, and more. Get

Give AI a Look: Any Industry Can Now Search and Summarize Vast Volumes of Visual Data

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Accenture, Dell Technologies and Lenovo are among the companies tapping a new NVIDIA AI Blueprint to develop visual AI agents that can boost productivity, optimize processes and create safer spaces. Enterprises and public sector organizations around the

“Embedded Vision Opportunities and Challenges in Retail Checkout,” an Interview with Zebra Technologies

Anatoly Kotlarsky, Distinguished Member of the Technical Staff in R&D at Zebra Technologies, talks with Phil Lapsley, Co-Founder and Vice President of BDTI and Vice President of Business Development at the Edge AI and Vision Alliance, for the “Embedded Vision Opportunities and Challenges in Retail Checkout” interview at the May… “Embedded Vision Opportunities and Challenges

“Cost-efficient, High-quality AI for Consumer-grade Smart Home Cameras,” a Presentation from Wyze

Lin Chen, Chief Scientist at Wyze, presents the “Cost-efficient, High-quality AI for Consumer-grade Smart Home Cameras” tutorial at the May 2024 Embedded Vision Summit. In this talk, Chen explains how Wyze delivers robust visual AI at ultra-low cost for millions of consumer smart cameras, and how his company is rapidly… “Cost-efficient, High-quality AI for Consumer-grade

Optimizing the CV Pipeline in Automotive Vehicle Development Using the PVA Engine

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. In the field of automotive vehicle software development, more large-scale AI models are being integrated into autonomous vehicles. The models range from vision AI models to end-to-end AI models for autonomous driving. Now the demand for computing

“Implementing AI/Computer Vision for Corporate Security Surveillance,” a Presentation from VMware

Prasad Saranjame, former Head of Physical Security and Resiliency at VMware, presents the “Implementing AI/Computer Vision for Corporate Security Surveillance” tutorial at the May 2024 Embedded Vision Summit. AI-enabled security cameras offer substantial benefits for corporate security and operational efficiency. However, successful deployment requires thoughtful selection of use cases and… “Implementing AI/Computer Vision for Corporate

“Multi-object Tracking Systems,” a Presentation from Tryolabs

Javier Berneche, Senior Machine Learning Engineer at Tryolabs, presents the “Multiple Object Tracking Systems” tutorial at the May 2024 Embedded Vision Summit. Object tracking is an essential capability in many computer vision systems, including applications in fields such as traffic control, self-driving vehicles, sports and more. In this talk, Berneche… “Multi-object Tracking Systems,” a Presentation

“Improved Navigation Assistance for the Blind via Real-time Edge AI,” a Presentation from Tesla

Aishwarya Jadhav, Software Engineer in the Autopilot AI Team at Tesla, presents the “Improved Navigation Assistance for the Blind via Real-time Edge AI,” tutorial at the May 2024 Embedded Vision Summit. In this talk, Jadhav presents recent work on AI Guide Dog, a groundbreaking research project aimed at providing navigation… “Improved Navigation Assistance for the

“Introduction to Modern Radar for Machine Perception,” a Presentation from Sensor Cortek

Robert Laganière, Professor at the University of Ottawa and CEO of Sensor Cortek, presents the “Introduction to Modern Radar for Machine Perception” tutorial at the May 2024 Embedded Vision Summit. In this presentation, Laganière provides an introduction to radar (short for radio detection and ranging) for machine perception. Radar is… “Introduction to Modern Radar for

2023 Milestone: More than 760,000 LiDAR Systems in Passenger Cars. Which Technology Leads the Market?

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. Hesai, Seyond, RoboSense, and Valeo: Yole Group’s analysts invite you to dive deep into leading LiDAR tech. OUTLINE: LiDAR can achieve an angular resolution of 0.05°, offering more precise object detection and

The Two Long-wave Infrared Innovations Required for a US$500M Market

Since thermal cameras have been an automotive technology for 25 years and are still not prevalent, it’s clear that developments are required for them to become a common choice in ADAS sensor suites. Although over 1.2 million on-road vehicles have thermal LWIR camera technology installed, this is 0.08% of the estimated total of 1.5 billion

What Is Photobleaching, and How Does It Impact Medical Imaging?

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Photobleaching is a major challenge in medical imaging, as it compromises the accuracy of fluorescent-based techniques. See how photobleaching works and find out how you can overcome this challenge to help medical applications enhance the

“Omnilert Gun Detect: Harnessing Computer Vision to Tackle Gun Violence,” a Presentation from Omnilert

Chad Green, Director of Artificial Intelligence at Omnilert, presents the “Omnilert Gun Detect: Harnessing Computer Vision to Tackle Gun Violence” tutorial at the May 2024 Embedded Vision Summit. In the United States in 2023, there were 658 mass shootings, and 42,996 people lost their lives to gun violence. Detecting and… “Omnilert Gun Detect: Harnessing Computer

Redefining Hybrid Meetings With AI-powered 360° Videoconferencing

This blog post was originally published at Ambarella’s website. It is reprinted here with the permission of Ambarella. The global pandemic catalyzed a boom in videoconferencing that continues to grow as companies embrace hybrid work models and seek more sustainable approaches to business communication with less travel. Now, with videoconferencing becoming a cornerstone of modern

“Using AI to Enhance the Well-being of the Elderly,” a Presentation from Kepler Vision Technologies

Harro Stokman, CEO of Kepler Vision Technologies, presents the “Using Artificial Intelligence to Enhance the Well-being of the Elderly” tutorial at the May 2024 Embedded Vision Summit. This presentation provides insights into an innovative application of artificial intelligence and advanced computer vision technologies in the healthcare sector, specifically focused on… “Using AI to Enhance the

eYs3D Microelectronics Demonstration of Computer Vision Embedded Solutions with an AI SoC

James Wang, president of eYs3D Microelectronics, demonstrates the company’s latest edge AI and vision technologies and products at the September 2024 Edge AI and Vision Alliance Forum. Specifically, Wang demonstrates the company’s stereo camera and AI SoC with edge processing capability, and computer vision object detection models.

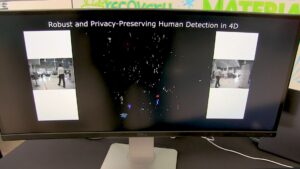

Waveye Demonstration of Ultra-high Resolution LIR Imaging Technology

Levon Budagyan, CEO of Waveye, demonstrates the company’s latest edge AI and vision technologies and products at the September 2024 Edge AI and Vision Alliance Forum. Specifically, Budagyan demonstrates his company’s ultra-high resolution LIR imaging technology, focused on key robotics applications: Robust, privacy-preserving human detection for robots 4D microwave imaging in high resolution for indoor

How AI and Smart Glasses Give You a New Perspective on Real Life

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. When smart glasses are paired with generative artificial intelligence, they become the ideal way to interact with your digital assistant They may be shades, but smart glasses are poised to give you a clearer view of everything