Optical Character Recognition Functions

“Choosing the Optimum Mix of Sensors for Driver Assistance and Autonomous Vehicles,” a Presentation from NXP Semiconductors

Ali Osman Ors, Director of Automotive Microcontrollers and Processors at NXP Semiconductors, presents the "Choosing the Optimum Mix of Sensors for Driver Assistance and Autonomous Vehicles" tutorial at the May 2017 Embedded Vision Summit. A diverse set of sensor technologies is available and emerging to provide vehicle autonomy or driver assistance. These sensor technologies often

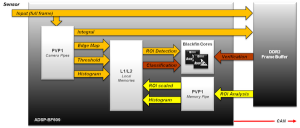

“Implementing an Optimized CNN Traffic Sign Recognition Solution,” a Presentation from NXP Semiconductors and Au-Zone Technologies

Rafal Malewski, Head of the Graphics Technology Engineering Center at NXP Semiconductors, and Sébastien Taylor, Vision Technology Architect at Au-Zone Technologies, present the "Implementing an Optimized CNN Traffic Sign Recognition Solution" tutorial at the May 2017 Embedded Vision Summit. Now that the benefits of using deep neural networks for image classification are well known, the

“Implementing an Optimized CNN Traffic Sign Recognition Solution,” a Presentation from NXP Semiconductors and Au-Zone Technologies

Rafal Malewski, Head of the Graphics Technology Engineering Center at NXP Semiconductors, and Sébastien Taylor, Vision Technology Architect at Au-Zone Technologies, present the "Implementing an Optimized CNN Traffic Sign Recognition Solution" tutorial at the May 2017 Embedded Vision Summit. Now that the benefits of using deep neural networks for image classification are well known, the

May 2017 Embedded Vision Summit Slides

The Embedded Vision Summit was held on May 1-3, 2017 in Santa Clara, California, as a educational forum for product creators interested in incorporating visual intelligence into electronic systems and software. The presentations delivered at the Summit are listed below. All of the slides from these presentations are included in… May 2017 Embedded Vision Summit

May 2016 Embedded Vision Summit Proceedings

The Embedded Vision Summit was held on May 2-4, 2016 in Santa Clara, California, as a educational forum for product creators interested in incorporating visual intelligence into electronic systems and software. The presentations presented at the Summit are listed below. All of the slides from these presentations are included in… May 2016 Embedded Vision Summit

“Techniques for Efficient Implementation of Deep Neural Networks,” a Presentation from Stanford

Song Han, graduate student at Stanford, delivers the presentation "Techniques for Efficient Implementation of Deep Neural Networks" at the March 2016 Embedded Vision Alliance Member Meeting. Song presents recent findings on techniques for the efficient implementation of deep neural networks.

Deep Learning Use Cases for Computer Vision (Download)

Six Deep Learning-Enabled Vision Applications in Digital Media, Healthcare, Agriculture, Retail, Manufacturing, and Other Industries The enterprise applications for deep learning have only scratched the surface of their potential applicability and use cases. Because it is data agnostic, deep learning is poised to be used in almost every enterprise vertical… Deep Learning Use Cases for

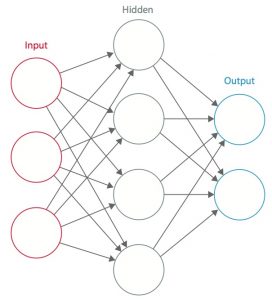

Using Convolutional Neural Networks for Image Recognition

This article was originally published at Cadence's website. It is reprinted here with the permission of Cadence. Convolutional neural networks (CNNs) are widely used in pattern- and image-recognition problems as they have a number of advantages compared to other techniques. This white paper covers the basics of CNNs including a description of the various layers

“Harman’s Augmented Navigation Platform—The Convergence of ADAS and Navigation,” a Presentation from Harman

Alon Atsmon, Vice President of Technology Strategy at Harman International, presents the "Harman’s Augmented Navigation Platform—The Convergence of ADAS and Navigation" tutorial at the May 2015 Embedded Vision Summit. Until recently, advanced driver assistance systems (ADAS) and in-car navigation systems have evolved as separate standalone systems. Today, however, the combination of available embedded computing power

Vision in Wearable Devices: Enhanced and Expanded Application and Function Choices

A version of this article was originally published at EE Times' Embedded.com Design Line. It is reprinted here with the permission of EE Times. Thanks to the emergence of increasingly capable and cost-effective processors, image sensors, memories and other semiconductor devices, along with robust algorithms, it's now practical to incorporate computer vision into a wide

Accelerate Machine Learning with the cuDNN Deep Neural Network Library

This article was originally published at NVIDIA's developer blog. It is reprinted here with the permission of NVIDIA. By Larry Brown Solution Architect, NVIDIA Machine Learning (ML) has its origins in the field of Artificial Intelligence, which started out decades ago with the lofty goals of creating a computer that could do any work a

Improved Vision Processors, Sensors Enable Proliferation of New and Enhanced ADAS Functions

This article was originally published at John Day's Automotive Electronics News. It is reprinted here with the permission of JHDay Communications. Thanks to the emergence of increasingly capable and cost-effective processors, image sensors, memories and other semiconductor devices, along with robust algorithms, it's now practical to incorporate computer vision into a wide range of embedded

October 2013 Embedded Vision Summit Technical Presentation: “Better Image Understanding Through Better Sensor Understanding,” Michael Tusch, Apical

Michael Tusch, Founder and CEO of Apical Imaging, presents the "Better Image Understanding Through Better Sensor Understanding" tutorial within the "Front-End Image Processing for Vision Applications" technical session at the October 2013 Embedded Vision Summit East. One of the main barriers to widespread use of embedded vision is its reliability. For example, systems which detect

September 2013 Qualcomm UPLINQ Conference Presentation: “Accelerating Computer Vision Applications with the Hexagon DSP,” Eric Gregori, BDTI

Eric Gregori, Senior Software Engineer at BDTI, presents the "Accelerating Computer Vision Applications with the Hexagon DSP" tutorial at the September 2013 Qualcomm UPLINQ Conference. Smartphones, tablets and embedded systems increasingly use sophisticated vision algorithms to deliver capabilities like augmented reality and gesture user interfaces. Since vision algorithms are computationally demanding, a key challenge when

“Machine Learning,” a Presentation from UT Austin

Professor Kristen Grauman of the University of Texas at Austin presents the keynote on machine learning at the December 2012 Embedded Vision Alliance Member Summit. Grauman is a rising star in computer vision research. Among other distinctions, she was recently recognized with a Regents' Outstanding Teaching Award and, along with Devi Parikh, received the prestigious

December 2012 Embedded Vision Alliance Member Summit Technology Trends Presentation

Embedded Vision Alliance Editor-in-Chief (and BDTI Senior Analyst) Brian Dipert and BDTI Senior Software Engineer Eric Gregori co-deliver an embedded vision application technology trends presentation at the December 2012 Embedded Vision Alliance Member Summit. Brian and Eric discuss embedded vision opportunities in mobile electronics devices. They quantify the market sizes and trends for smartphones and

Camera-Based ADAS for Mass Deployments

By Peter Voss and Benno Kusstatscher, Automotive Segment Team Analog Devices This article was originally published by Elektronik Magazine. It is reprinted here with the permission of the original publisher. Abstract Car manufacturers (OEM‘s) and system suppliers (Tier 1s) do agree: Advanced Driver Assistance Systems (ADAS) will see a steep growth in the years to

Auto-Safety Mandate Brings Video Systems into Full View, Along with Design Challenges

By Don Nesbitt, Marketing Manager in the High Speed Signal Conditioning Group Analog Devices This article was originally published by Hanser Automotive Magazine. It is reprinted here with the permission of the original publisher. The emerging field of video-based automotive safety was in the headlines during December 2011 when the U.S. Department of Transportation proposed