LLMs and MLLMs

The past decade-plus has seen incredible progress in practical computer vision. Thanks to deep learning, computer vision is dramatically more robust and accessible, and has enabled compelling capabilities in thousands of applications, from automotive safety to healthcare. But today’s widely used deep learning techniques suffer from serious limitations. Often, they struggle when confronted with ambiguity (e.g., are those people fighting or dancing?) or with challenging imaging conditions (e.g., is that shadow in the fog a person or a shrub?). And, for many product developers, computer vision remains out of reach due to the cost and complexity of obtaining the necessary training data, or due to lack of necessary technical skills.

Recent advances in large language models (LLMs) and their variants such as vision language models (VLMs, which comprehend both images and text), hold the key to overcoming these challenges. VLMs are an example of multimodal large language models (MLLMs), which integrate multiple data modalities such as language, images, audio, and video to enable complex cross-modal understanding and generation tasks. MLLMs represent a significant evolution in AI by combining the capabilities of LLMs with multimodal processing to handle diverse inputs and outputs.

The purpose of this portal is to facilitate awareness of, and education regarding, the challenges and opportunities in using LLMs, VLMs, and other types of MLLMs in practical applications — especially applications involving edge AI and machine perception. The content that follows (which is updated regularly) discusses these topics. As a starting point, we encourage you to watch the recording of the symposium “Your Next Computer Vision Model Might be an LLM: Generative AI and the Move From Large Language Models to Vision Language Models“, sponsored by the Edge AI and Vision Alliance. A preview video of the symposium introduction by Jeff Bier, Founder of the Alliance, follows:

If there are topics related to LLMs, VLMs or other types of MLLMs that you’d like to learn about and don’t find covered below, please email us at [email protected] and we’ll consider adding content on these topics in the future.

View all LLM and MLLM Content

Rockets to Retail: Intel Core Ultra Delivers Edge AI for Video Management

At Intel Vision, Network Optix debuts natural language prompt prototype to redefine video management, offering industries faster AI-driven insights and efficiency. On the surface, aerospace manufacturers, shopping malls, universities, police departments and automakers might not have a lot in common. But they each collectively use and manage hundreds to thousands of video cameras across their

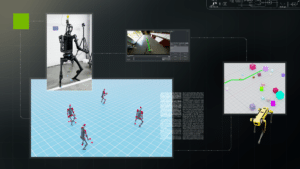

R²D²: Advancing Robot Mobility and Whole-body Control with Novel Workflows and AI Foundation Models from NVIDIA Research

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Welcome to the first edition of the NVIDIA Robotics Research and Development Digest (R2D2). This technical blog series will give developers and researchers deeper insight and access to the latest physical AI and robotics research breakthroughs across

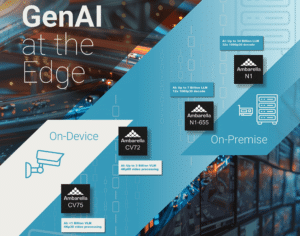

Ambarella Debuts Next-generation Edge GenAI Technology at ISC West, Including Reasoning Models Running on its CVflow Edge AI SoCs

With Over 30 Million Edge AI Systems-on-Chip Shipped, Ambarella is Driving Innovation for a Broad Range of On-Device and On-Premise Generative AI Applications SANTA CLARA, Calif., March 31, 2025 — Ambarella, Inc. (NASDAQ: AMBA), an edge AI semiconductor company, today announced during the ISC West security expo that it is continuing to push the envelope

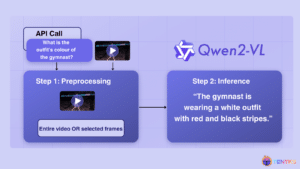

Video Understanding: Qwen2-VL, An Expert Vision-language Model

This article was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. Qwen2-VL, an advanced vision language model built on Qwen2 [1], sets new benchmarks in image comprehension across varied resolutions and ratios, while also tackling extended video content. Though Qwen2-V excels at many fronts, this article explores the model’s

NVIDIA Announces Isaac GR00T N1 — the World’s First Open Humanoid Robot Foundation Model — and Simulation Frameworks to Speed Robot Development

Now Available, Fully Customizable Foundation Model Brings Generalized Skills and Reasoning to Humanoid Robots NVIDIA, Google DeepMind and Disney Research Collaborate to Develop Next-Generation Open-Source Newton Physics Engine New Omniverse Blueprint for Synthetic Data Generation and Open-Source Dataset Jumpstart Physical AI Data Flywheel March 18, 2025—GTC—NVIDIA today announced a portfolio of technologies to supercharge humanoid

NVIDIA Announces Major Release of Cosmos World Foundation Models and Physical AI Data Tools

New Models Enable Prediction, Controllable World Generation and Reasoning for Physical AI Two New Blueprints Deliver Massive Physical AI Synthetic Data Generation for Robot and Autonomous Vehicle Post-Training 1X, Agility Robotics, Figure AI, Skild AI Among Early Adopters March 18, 2025—GTC—NVIDIA today announced a major release of new NVIDIA Cosmos™ world foundation models (WFMs), introducing

NVIDIA Unveils Open Physical AI Dataset to Advance Robotics and Autonomous Vehicle Development

Expected to become the world’s largest such dataset, the initial release of standardized synthetic data is now available to robotics developers as open source. Teaching autonomous robots and vehicles how to interact with the physical world requires vast amounts of high-quality data. To give researchers and developers a head start, NVIDIA is releasing a massive,

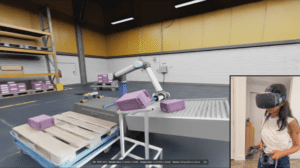

Build Real-time Multimodal XR Apps with NVIDIA AI Blueprint for Video Search and Summarization

This article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. With the recent advancements in generative AI and vision foundational models, VLMs present a new wave of visual computing wherein the models are capable of highly sophisticated perception and deep contextual understanding. These intelligent solutions offer a promising

Scalable Video Search: Cascading Foundation Models

This article was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. Video has become the lingua franca of the digital age, but its ubiquity presents a unique challenge: how do we efficiently extract meaningful information from this ocean of visual data? In Part 1 of this series, we navigate

Building a Simple VLM-based Multimodal Information Retrieval System with NVIDIA NIM

This article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. In today’s data-driven world, the ability to retrieve accurate information from even modest amounts of data is vital for developers seeking streamlined, effective solutions for quick deployments, prototyping, or experimentation. One of the key challenges in information retrieval

Vision Language Model Prompt Engineering Guide for Image and Video Understanding

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Vision language models (VLMs) are evolving at a breakneck speed. In 2020, the first VLMs revolutionized the generative AI landscape by bringing visual understanding to large language models (LLMs) through the use of a vision encoder. These

SAM 2 + GPT-4o: Cascading Foundation Models via Visual Prompting (Part 2)

This article was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. In Part 2 of our Segment Anything Model 2 (SAM 2) Series, we show how foundation models (e.g., GPT-4o, Claude Sonnet 3.5 and YOLO-World) can be used to generate visual inputs (e.g., bounding boxes) for SAM 2. Learn

SAM 2 + GPT-4o: Cascading Foundation Models via Visual Prompting (Part 1)

This article was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. In Part 1 of this article we introduce Segment Anything Model 2 (SAM 2). Then, we walk you through how you can set it up and run inference on your own video clips. Learn more about visual prompting

AI Disruption is Driving Innovation in On-device Inference

This article was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. How the proliferation and evolution of generative models will transform the AI landscape and unlock value. The introduction of DeepSeek R1, a cutting-edge reasoning AI model, has caused ripples throughout the tech industry. That’s because its performance is on

From Seeing to Understanding: LLMs Leveraging Computer Vision

This blog post was originally published at Tryolabs’ website. It is reprinted here with the permission of Tryolabs. From Face ID unlocking our phones to counting customers in stores, Computer Vision has already transformed how businesses operate. As Generative AI (GenAI) becomes more compelling and accessible, this tried-and-tested technology is entering a new era of

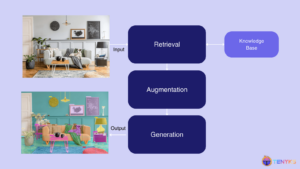

RAG for Vision: Building Multimodal Computer Vision Systems

This blog post was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. This article explores the exciting world of Visual RAG, exploring its significance and how it’s revolutionizing traditional computer vision pipelines. From understanding the basics of RAG to its specific applications in visual tasks and surveillance, we’ll examine