Development Tools for Embedded Vision

ENCOMPASSING MOST OF THE STANDARD ARSENAL USED FOR DEVELOPING REAL-TIME EMBEDDED PROCESSOR SYSTEMS

The software tools (compilers, debuggers, operating systems, libraries, etc.) encompass most of the standard arsenal used for developing real-time embedded processor systems, while adding in specialized vision libraries and possibly vendor-specific development tools for software development. On the hardware side, the requirements will depend on the application space, since the designer may need equipment for monitoring and testing real-time video data. Most of these hardware development tools are already used for other types of video system design.

Both general-purpose and vender-specific tools

Many vendors of vision devices use integrated CPUs that are based on the same instruction set (ARM, x86, etc), allowing a common set of development tools for software development. However, even though the base instruction set is the same, each CPU vendor integrates a different set of peripherals that have unique software interface requirements. In addition, most vendors accelerate the CPU with specialized computing devices (GPUs, DSPs, FPGAs, etc.) This extended CPU programming model requires a customized version of standard development tools. Most CPU vendors develop their own optimized software tool chain, while also working with 3rd-party software tool suppliers to make sure that the CPU components are broadly supported.

Heterogeneous software development in an integrated development environment

Since vision applications often require a mix of processing architectures, the development tools become more complicated and must handle multiple instruction sets and additional system debugging challenges. Most vendors provide a suite of tools that integrate development tasks into a single interface for the developer, simplifying software development and testing.

Qualcomm Dragonwing Intelligent Video Suite Modernizes Video Management with Generative AI at Its Core

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Video cameras generate a lot of data. Companies that use a video management system (VMS) are left wanting to get more value out of all the video data they generate, enabling them to take the actions that

Rockets to Retail: Intel Core Ultra Delivers Edge AI for Video Management

At Intel Vision, Network Optix debuts natural language prompt prototype to redefine video management, offering industries faster AI-driven insights and efficiency. On the surface, aerospace manufacturers, shopping malls, universities, police departments and automakers might not have a lot in common. But they each collectively use and manage hundreds to thousands of video cameras across their

Attend Jeff Bier’s Presentation and Discussion at Andes Technology’s Upcoming RISC-V CON Silicon Valley Event

On April 29, 2025 from 9:00 AM to 6:00 PM PT, Alliance Member company Andes Technology will deliver the RISC-V CON Silicon Valley event at the DoubleTree Hotel by Hilton in San Jose, CA. Jeff Bier, Founder of the Edge AI and Vision Alliance, will be one of the invited guest speakers. From the event

LLM Benchmarking: Fundamental Concepts

This article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The past few years have witnessed the rise in popularity of generative AI and large language models (LLMs), as part of a broad AI revolution. As LLM-based applications are rolled out across enterprises, there is a need to

STMicroelectronics Releases STM32MP23 Microprocessors Featured for Performance and Economy and Extends Support for OpenSTLinux Releases

Focused feature set with AI acceleration and cyber protection for industrial and IoT applications Geneva, Switzerland, April 7, 2025 — STMicroelectronics has announced mass-market availability of new STM32MP23 general-purpose microprocessors (MPUs), combining high-temperature operation up to 125°C with the speed and efficiency of dual Arm® Cortex®-A35 cores, for superior performance and ruggedness in industrial and

Enterprise AI: Insights from Industry Leaders

This blog post was originally published at Tryolabs’ website. It is reprinted here with the permission of Tryolabs. The potential of AI continues to captivate businesses, yet the reality of implementation often proves challenging. In our latest event, we explored the complex landscape of enterprise AI Adoption. Our expert panel cut through the hype, offering

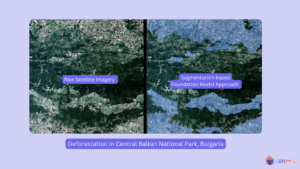

Visual Intelligence: Foundation Models + Satellite Analytics for Deforestation (Part 2)

This blog post was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. In Part 2, we explore how Foundation Models can be leveraged to track deforestation patterns. Building upon the insights from our Sentinel-2 pipeline and Central Balkan case study, we dive into the revolution that foundation models have

High-performance AI In House: Qualcomm Dragonwing AI On-prem Appliance Solution and Qualcomm AI Inference Suite

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. The Qualcomm Dragonwing AI On-Prem Appliance Solution pairs with the software and services in the Qualcomm AI Inference Suite for AI inference that spans from near-edge to cloud. Together, they allow your small-to-medium business, enterprise or industrial