Development Tools for Embedded Vision

ENCOMPASSING MOST OF THE STANDARD ARSENAL USED FOR DEVELOPING REAL-TIME EMBEDDED PROCESSOR SYSTEMS

The software tools (compilers, debuggers, operating systems, libraries, etc.) encompass most of the standard arsenal used for developing real-time embedded processor systems, while adding in specialized vision libraries and possibly vendor-specific development tools for software development. On the hardware side, the requirements will depend on the application space, since the designer may need equipment for monitoring and testing real-time video data. Most of these hardware development tools are already used for other types of video system design.

Both general-purpose and vender-specific tools

Many vendors of vision devices use integrated CPUs that are based on the same instruction set (ARM, x86, etc), allowing a common set of development tools for software development. However, even though the base instruction set is the same, each CPU vendor integrates a different set of peripherals that have unique software interface requirements. In addition, most vendors accelerate the CPU with specialized computing devices (GPUs, DSPs, FPGAs, etc.) This extended CPU programming model requires a customized version of standard development tools. Most CPU vendors develop their own optimized software tool chain, while also working with 3rd-party software tool suppliers to make sure that the CPU components are broadly supported.

Heterogeneous software development in an integrated development environment

Since vision applications often require a mix of processing architectures, the development tools become more complicated and must handle multiple instruction sets and additional system debugging challenges. Most vendors provide a suite of tools that integrate development tasks into a single interface for the developer, simplifying software development and testing.

Qualcomm Expands Generative AI Capabilities With Acquisition of VinAI Division

Highlights: Acquisition will strengthen Qualcomm’s generative AI research and development capabilities and expedite the creation of advanced AI solutions for products like smartphones, PCs, software-defined vehicles, and more Dr. Hung Bui, VinAI’s founder and CEO, will join Qualcomm Apr 1, 2025 – SAN DIEGO & HANOI, VIETNAM – Qualcomm today announced the acquisition of MovianAI Artificial

DeepSeek: Stop the Panic

DeepSeek is a precursor of many more disruptors coming in the AI world. Its importance may be overblown compared with what comes next. What’s at stake: DeepSeek nearly sank Nvidia and other AI model players. Except DeepSeek itself is barely known by anyone, its true story still a mystery, and the likely impact on the

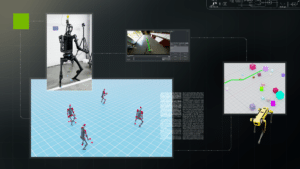

R²D²: Advancing Robot Mobility and Whole-body Control with Novel Workflows and AI Foundation Models from NVIDIA Research

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Welcome to the first edition of the NVIDIA Robotics Research and Development Digest (R2D2). This technical blog series will give developers and researchers deeper insight and access to the latest physical AI and robotics research breakthroughs across

Sony Semiconductor Demonstration of AITRIOS Vision AI at the Extreme Edge Made Simple

Armaghan Ebrahimi, Senior Technical Product Manager, and Zachary Li, Product and Business Development Manager, both of Sony Semiconductor, demonstrates the company’s latest edge AI and vision technologies and products at the March 2025 Edge AI and Vision Alliance Forum. Specifically, Ebrahimi and Li demonstration the Raspberry Pi AI Camera, powered by Sony’s IMX500 smart sensor

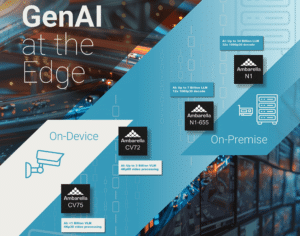

Ambarella Debuts Next-generation Edge GenAI Technology at ISC West, Including Reasoning Models Running on its CVflow Edge AI SoCs

With Over 30 Million Edge AI Systems-on-Chip Shipped, Ambarella is Driving Innovation for a Broad Range of On-Device and On-Premise Generative AI Applications SANTA CLARA, Calif., March 31, 2025 — Ambarella, Inc. (NASDAQ: AMBA), an edge AI semiconductor company, today announced during the ISC West security expo that it is continuing to push the envelope

LLMOps Unpacked: The Operational Complexities of LLMs

This blog post was originally published at Tryolabs’ website. It is reprinted here with the permission of Tryolabs. Incorporating a Large Language Model (LLM) into a commercial product is a complex endeavor, far beyond the simplicity of prototyping. As Machine Learning and Generative AI (GenAI) evolve, so does the need for specialized operational practices, leading

Visual Intelligence: Foundation Models + Satellite Analytics for Deforestation (Part 1)

This blog post was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. Satellite imagery has revolutionized how we monitor Earth’s forests, offering unprecedented insights into deforestation patterns. In this two-part series, we explore both traditional and cutting-edge approaches to forest monitoring, using Bulgaria’s Central Balkan National Park as our

MemryX Raises $44 Million in Series B Funding for Advanced Edge AI Computing

ANN ARBOR, Mich., March 27, 2025 /PRNewswire/ — MemryX, a provider of industry leading Edge AI semiconductor solutions, announced today it has raised $44 million in Series B funding. The funding round received broad support from new and existing investors. This announcement follows MemryX reaching production quality of the industry leading MX3 Accelerator chip, the